ABSTRACT

In 1999, the Indiana University School of Medicine implemented a competency-based curriculum structured around nine core competencies. The students responded to this new curriculum with minimal enthusiasm. We sought to better understand the student perspective in an effort to improve and refine the competency requirements. Accordingly, early in the 2003-2004 academic year, we established four focus groups (6-10 students per group) to systematically analyze student experiences with the competency requirements across four years of medical school. Each of the groups met once to respond to a series of scripted questions about the competencies. Sessions were transcribed and analyzed by three readers using standard protocols for qualitative analysis. Analysis of 525 student comments revealed two major areas of dissatisfaction, as reflected by the frequency: Inconsistent knowledge and use of the competencies by the faculty (39%) and lack of clarity and uniformity in communication about the competencies (26%). Despite these perceived deficiencies, the students generally embraced the important concepts inherent in the nine competencies and recognized their application to the physician role (33%). Sixteen percent of the comments were specific recommendations for improvement, which included competency-specific training for all teachers, clear and consistent communication throughout all four years of the curriculum, and improved methods of feedback and assessment. Many of these recommendations were incorporated into refinements and additions to the competency-based curriculum, which probably contributed to the notable improvement in student acceptance of the competencies measured by end-of-course evaluations. This study has shown that focus groups can provide a rich source of information about student perceptions and attitudes regarding curricular change, and can reveal problems and shortcomings not otherwise apparent. By soliciting student feedback early in the new curriculum, we were able to gather constructive criticisms that led to actionable reforms.

INTRODUCTION

Numerous surveys of the American public have documented a persistent and growing dissatisfaction with the way health care is delivered in this country.1,2 Of particular concern is a sense of alienation between physician and patient. The technical competence of physicians is not in question, but the abilities to communicate effectively with patients, to convey compassion and respect, and to appreciate the uniqueness of individuals, are perceived by patients as uncommon physician attributes.3-5

Partly in response to this public dissatisfaction, medical schools and residency programs around the country have been making a gradual shift to “competency-based” medical education. Although differing in details, all competency-based programs attempt to promote and evaluate those skills, behaviors, and attitudes thought to exemplify well-rounded, compassionate physicians. It is no longer sufficient for a physician-in-training simply to demonstrate an adequate fund of knowledge and acceptable clinical skills; he or she must also demonstrate, for example, the ability to communicate bad news to a patient or to understand the cultural context of patient care.

The Accreditation Council for Graduate Medical Education (ACGME) has defined six general competencies to be incorporated into residency training,6 and many of the nation’s 125 medical schools are in various phases of discussion, review, and implementation of their own competency-based programs. Several reports in the literature have described the challenges educators face in making this shift to competency-based training. Impediments cited include securing faculty and student buy-in, defining benchmarks of competence, developing appropriate assessment tools, and creating interventions for remediation, as well as limited faculty expertise and the substantial investment in time required to develop these resources.7-13

Based on the pioneering work of Brown University,14 Indiana University School of Medicine (IUSM) implemented a competency-based curriculum in 1999, one of the first programs of its kind in the nation.15,16 IUSM defined nine core competencies that are assessed throughout the four-year medical curriculum. Acceptable performance in all nine competencies is required for graduation. IUSM has an enrollment of over 1100 students and nearly 1200 full-time faculty members distributed among nine separate campuses across the state. Instituting curricular change in a school of this size and complexity does not come easily, and we experienced many of the same hurdles described in the literature.

Recognizing that students can have a powerful influence in the success or failure of curricular change,17,18 input was sought from representatives of the IUSM student body during the development and implementation of the new curriculum and a close monitoring of student perceptions was made a priority. However, despite repeated attempts to communicate the purpose of the competencies and how their achievement would be documented, the feedback from student course evaluations in the early years of implementation indicated that many students remained unconvinced of the value of the competencies to their education and were dissatisfied with the evaluation process.

To better understand the reasons for this discontent, we used structured focus groups and qualitative analysis to assess the impact of the competency-based curriculum on student satisfaction with their undergraduate educational experience and their perception of how well they were being prepared for the practice of medicine. Our goal was to identify specific areas for improvement that would guide refinement of the competency requirements and enhance the communication regarding curricular goals of the competencies.

METHODS

Focus Groups. Early in the 2003-2004 academic year, four focus groups were established, one for each class year. Each group was composed of 6 to 10 students who were not on academic probation and, to assure a common basis for discussion, only students who had attended the main Indianapolis campus. A total of 30 students participated. Each group met once during the academic year to respond to a series of carefully scripted questions about the competency-based curriculum. Participants were invited to describe when and how the competencies had been presented to them, their perceptions of the competencies—both initially and after exposure to the competencies in the curriculum, their satisfaction with feedback and assessment regarding the competencies, how they believed the competencies may have enhanced their skills as physicians, and how the school can make the competency-based curriculum more meaningful and satisfying to students. In addition to these open-ended questions, participants were asked to rank on a five-point scale the importance of the competencies to medical education (5 = “essential” to 1 = “non-essential”).

Sessions were transcribed and analyzed by three readers using accepted protocols.19 Two readers used axial coding to sort the data into large themes, while the third used NVivo software (QSR International Pty Ltd) to perform a line-by-line examination and assign comments to thematic categories. Patterns of comments within categories were identified, and categories collapsed to achieve consensus on four over-arching themes. This research study was approved by the Indiana University Purdue University Indianapolis Institutional Review Board.

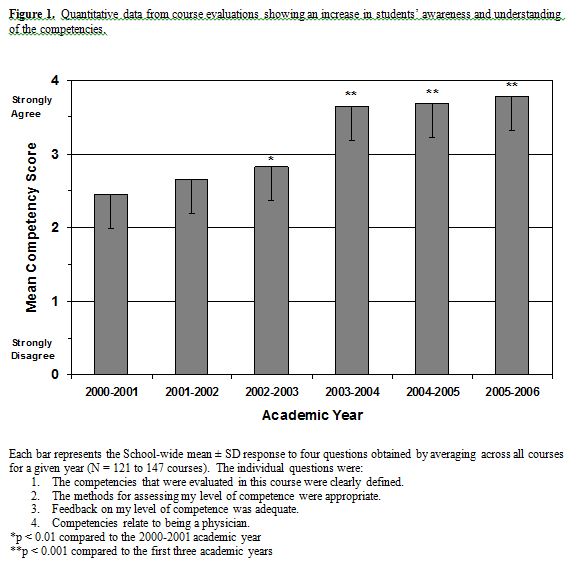

Course Evaluations. Beginning with the 2000-2001 academic year, four competency-related items were added to all end-of-course evaluations. These items were intended to evaluate the student awareness and understanding of the competencies. The individual item statements were:

- •“The competencies that were evaluated in this course were clearly defined.”

•“The methods for assessing my level of competence were appropriate.”

•“Feedback on my level of competence was adequate.”

•“Competencies relate to being a physician.”

Responses were recorded using a five-point Likert scale, with 4 denoting “strongly agree” and 0 denoting “strongly disagree.” The mean response to these four items was obtained for each course, and a grand mean was obtained by averaging across all course-specific means for a given academic year (N = 121 to 147 courses).

The aggregate mean of these four items from all courses was compared across six years of evaluation (2000-2006). The return rate was approximately 80% per year. Mean rank responses were compared using the nonparametric Kruskall-Wallis test. Differences were considered significant if p less than 0.05.

RESULTS

Focus Group Data. The total number of comments made by the students (stratified by class year) was: MS1 = 110 (8), MS2 = 114 (6), MS3 = 175 (10), MS4 = 126 (6). The number of students is shown in parentheses. Careful examination of the student comments revealed four main themes, as reflected by the frequency:

- •Faculty Knowledge and Use of Competencies (39%)

•How Competencies Relate to Being a Physician (33%)

•Communication of Competencies to Students (26%)

•Students’ Recommendations for Improvement (16%)

The total percentage exceeds 100% because some individual comments applied to multiple themes. For each of the main themes, we have provided a summary statement that we believe captures the general consensus view, as well as several representative quotations from students.

Faculty Knowledge and Use of Competencies. Students perceived inconsistent knowledge and use of the competencies by the faculty.

- •“You know I pay my [tuition] so if I’m going to be part of the whole competency thing, I would like to know that the people who are assessing me are capable of doing that.” MS3

•“I say 98% of my staff, residents, and interns don’t even know what a competency is.” MS3

•“[Faculty] just check the box ‘good’ . . . I wonder if they are aware of it [the competency] themselves. I kind of think it’s just another paper and grade sheet that they have to check a box on.” MS3

•“Some of them just decide that we will not address competencies because it’s extra work.” MS4

•“ . . . if my preceptor watches me do an H&P or something like that, it’s very good, but I don’t think he’s thinking about the competencies when he’s evaluating these.” MS2

How Competencies Relate to Being a Physician. Students generally embraced the important concepts inherent in the nine competencies and recognized their application to the physician role.

- •“I think there are good doctors out there and I think there are bad doctors out there and I think that there are ones just okay, and I think a lot of it comes back to whether or not they have these characteristics.” MS3

•“These [the competencies] are tough to assess and I’m glad the School is trying to take it on. You know, it’s got to be done and I don’t think anybody can really argue [against] these nine things as being important.” MS3

•If [consumers] know that people are taking the time and effort to identify these things, maybe consumer confidence in medicine will come back.” MS3

•“We think these things are kind of ridiculous, but that’s because we integrate all of them into our daily activities . . . So I guess by that standard, yes, ‘mission accomplished’.” MS4

•“It’s just a good way to keep us in check as we are going along. To remind us there’s more than just the basic sciences . . . a way to keep us steering toward the type of physicians that we want to be.” MS1

Communication of Competencies to Students. Students perceived a lack of clarity and uniformity in communication about the competencies.

- •“You’re thinking to yourself, I’m taking biochemistry and anatomy and what the heck does that have to do with moral reasoning and ethical judgment.” MS4

•“The first time that I ever heard about [the competencies] was when a [basic science] professor was going through his introduction and said, ‘I’m required to say that these are the competencies that will be addressed by this class’.” MS2

•“[The competencies] are laid out in some 100-page manual on every rotation and nobody really reads those. I guess you can look it up if you wanted to, but it’s not specifically addressed.” MS3

•“You can point out peoples’ faults and assess them and say you need to improve on this, but where does it go after that? Who helps you improve?” MS3

•“Dr. X got up [in a 3rd-year clerkship] and actually talked about the competencies that were addressed . . . . That was the first time I ever actually understood any of the competencies and I never had any of them specifically explained to me before.” MS3

Student Recommendations for Improvement. For each area of concern, students offered specific recommendations for improving competencies within the curriculum, which included competency-specific training for all teachers, clear and consistent communication throughout all four years of the curriculum, and improved methods of feedback and assessment.

- •“I think that if [a course addresses a particular competency], it should be assessed the entire time. It shouldn’t just be one little thing that you do.” MS4

•“Have this [the competencies] be what they grade us on…because you can’t be a medical student and get through without doing these things. You might as well make it part of our grade [because] that’s the way we all are going to take something more importantly if it’s reflected in our grades.” MS4

•“Wouldn’t it be great if there was a course on ANGEL [course management website] about the competencies where I could see the checkmarks I’m collecting, and then when I get my first checkmarks…voila…they show up on ANGEL…look I’m making progress! This is what I’m going to be expected to do next year and have it laid out right there.” MS2

•“I think the group [150 students] is too large . . . to assess these things . . . but I would be ok with it being assessed in smaller groups.” MS2

•“We love attention . . . I want someone paying attention to me, telling me what I’m good at, telling me what I’m really bad at, and how I can get better. I want attention!” MS2

In response to the request, “Please rank, using a five-point scale, how important you think the competencies are to your medical education, with five being essential and one being non-essential,” focus group participants (stratified by class year) ranked the competencies as follows: MS1 = 4.7 ± 0.5 (8), MS2 = 4.9 ± 0.2 (6), MS3 = 4.8 ± 0.4 (10), MS4 = 4.4 ± 0.7 (6). Data are expressed as the mean ± SD (N).

Course Evaluation Data. Quantitative data from the end-of-course evaluations showed an improvement in student awareness and understanding of the competencies (Figure 1). During the first three years of evaluation, the aggregate mean of the four competency-related items (mean competency score) improved marginally, but still reflected a generally poor student perception of the competencies. Not until the 2003-2004 academic year did the mean competency score increase sufficiently to indicate a generally favorable student perception of the competencies. The score remained elevated during the 2004-2005 and 2005-2006 academic years.

DISCUSSION

“Reforming the curriculum without attention to the learning environment, to me, does not serve the students and the public good.” Kenneth Ludmerer20

In 1999, IUSM adopted a competency-based curriculum consisting of nine competencies: (1) Effective Communication; (2) Basic Clinical Skills; (3) Using Science to Guide Diagnosis, Management, Therapeutics, and Prevention; (4) Lifelong Learning; (5) Self-Awareness, Self-Care, and Personal Growth; (6) The Social and Community Contexts of Health Care; (7) Moral Reasoning and Ethical Judgment; (8) Problem Solving; and (9) Professionalism and Role Recognition.15 Assessment and certification of achievement in these competencies are sequentially integrated into all four years of the curriculum, culminating in a competency transcript upon graduation. For each competency, three levels of mastery are defined according to specific performance criteria. Students must demonstrate a beginning level of mastery (Level 1) in all nine competencies by the end of their second year, and an intermediate level (Level 2) by the end of their third year. During their fourth year, students must demonstrate an advanced level (Level 3) in three competencies of their choosing. The graduating class of 2003 was the first to complete the entire four-year, competency-based curriculum.

Educational research highlights the importance of including student input into curricular revision,21,22 because students can exert a powerful influence on the success or failure of such undertakings.17,18 Although student input was sought throughout the development and implementation of the competency-based curriculum, its initial reception by the IUSM student body was less than enthusiastic. From the 2000-2001 academic year (the first year for which evaluation data were available) until the 2002-2003 academic year, the mean competency score, while gradually improving, nonetheless indicated weak student support. Many of the graduating seniors in this period expressed harsh critiques of the competency-based curriculum on the Association of American Medical Colleges graduation questionnaire. In ways both formal and informal, the students made their dissatisfaction with the competencies known. Corrective action was plainly needed. We believed that an in-depth understanding of the reasons for student dissatisfaction was crucial if the new curriculum was to succeed and thrive. Our use of focus groups provided a way to systematically analyze student experience with the competencies across four years of medical school. Our intent was to provide a clear and accurate summary of the perceptions and experiences of students as they progressed through the competency-based curriculum. The information gleaned from this study helped to identify and correct some of the perceived deficiencies in our new curriculum, and may serve as a cautionary guide for other schools contemplating similar curricular changes.

At the time of this study in early 2003-2004, the competency-based curriculum had entered into its fifth year and virtually all IUSM students had been exposed to the competencies since the start of medical school. This fact alone may have diminished some of the resentment voiced in earlier years by students who felt they were being treated as “guinea pigs” in an untested curriculum. With familiarity comes acceptance, which may partly explain the substantial improvement in mean competency scores observed in 2003-2004 and beyond. Had this study been conducted earlier, we may have heard a different—perhaps less balanced—student perspective. Despite their dissatisfaction with certain aspects of the new curriculum, the students in our study gave strong endorsement to the concepts embodied in the competencies and the practice of medicine. As noted in the Results, all four focus group classes ranked the competencies highly in terms of their importance to medical education. The comments expressed by the focus group participants were not about whether the competencies should be taught but how they should be taught. Nevertheless, we believe it fair to say that the students took a dim view of how the competency-based curriculum had been implemented, and they questioned whether the competencies were being assessed in a rational and consistent manner.

Of the two major areas of concern identified by the students, the most frequently mentioned was inconsistent knowledge and use of the competencies by the faculty. Some professors expressly stated which competencies were being assessed in their course, explained how the students were to be evaluated, and provided meaningful feedback about their performance. But this was not usually the case. All too often students received little or no guidance about the competencies being assessed or the manner of evaluation. Feedback was vague and unhelpful, if it existed at all. Moreover, the way in which a particular competency was evaluated in one course often seemed inconsistent with the way it was evaluated in another course. For example, a physiology professor might assess Effective Communication by examining the legibility of a student’s handwriting, whereas an anatomy professor might assess this same competency by critiquing a student’s oral presentation. Because there were no generally agreed-upon assessment methods, each faculty member simply did what he or she thought expedient to achieve the goal. All of this lent an air of ambiguity and capriciousness to the competency-assessment process, which the students found very disconcerting.

*

*Another area of concern identified by the students was lack of clarity and uniformity in communication about the competencies. Although the school made diligent attempts to educate students about the competencies early in their first year and periodically thereafter, the students continued to express confusion and uncertainty about what was expected of them. That the faculty did not speak with one voice about the competencies probably accounted for much of this confusion. What students heard from one faculty member was often at odds with what they heard from another faculty member. Some professors would highlight the competencies, whereas others would ignore them. From the student perspective, there was no clear and consistent source of information about the competencies, and the faculty was not unanimous in its embrace of the new curriculum.

These insightful observations led naturally to the student principal recommendations for improvement: competency-specific training for all teachers, clear and consistent communication throughout all four years of the curriculum, and improved methods of feedback and assessment. Interestingly, these recommendations parallel some of the faculty concerns identified by Broyles et al.13 in their study of curricular change at an osteopathic medical school. Early in the 2004-2005 academic year, we shared the student comments and recommendations with key administrators and faculty members in charge of the competency-based curriculum. At that time, some of the deficiencies identified by the students—such as the lack of standardization in competency assessment—had already come to the attention of the school’s educational administration and were targeted for improvement. To what extent the student voices added to the chorus and motivated specific reforms is uncertain, but we suspect they were a major influence.

Several improvements in the competency-based curriculum have been instituted since we shared our findings. For example, a week-long educational experience was created to expose newly matriculated students to the competencies in a clinical context.15 During their first week of medical school, students systematically examine a single clinical case from different competency perspectives and learn how the competencies work together to improve patient outcomes. This in-depth introduction is intended to impress upon the students the importance of the competencies and illustrate how they are utilized across the continuum of medical care.

Another notable improvement was the establishment of competency teams.15 Each of nine teams is organized to support a particular competency, is chaired by a competency director, and includes basic scientists, clinicians, students, and education specialists. Each team is responsible for developing new competency learning experiences and assessments, integrating these competency activities across the four-year curriculum, and improving the communication of competency requirements to the students and faculty. Although the effectiveness of the first-week experience and competency teams have yet to be fully realized, we believe they have gone a long way towards alleviating many of the students’ criticisms about the competency-based curriculum.

This study has shown that focus groups can provide a rich source of information about student perceptions and attitudes regarding curricular change, and can reveal problems and shortcomings not otherwise apparent. Owing to their unique perspective, the students themselves can often envision the best ways to correct curricular deficiencies. Our school’s experience with curricular change underscores the importance of student feedback at all stages of the process. By soliciting student feedback early in the new curriculum, before student dissatisfaction with the competencies was entrenched, we were able to gather constructive criticisms that led to actionable reforms. The fact that the mean competency score remained elevated for three consecutive academic years suggests that the competency-based curriculum at IUSM survived its growing pains and has now matured to the point where it is well-accepted by the students.

ACKNOWLEDGEMENTS

This work was supported by an Indiana University School of Medicine Educational Research and Development Grant.

REFERENCES

- 1.Bogart, L.M., Bird, S.T., Walt, L.C., Delahanty, D.L., and Figler, J.L. Association of stereotypes about physicians to health care satisfaction, help-seeking behavior, and adherence to treatment. Social Science and Medicine. 2004; 58(6):1049-1058.

2.Zemencuk, J.K., Hayward, R.A., Skarupski, K.A., and Katz, S.J. Patients’ desires and expectations for medical care: A challenge to improving patient satisfaction. American Journal of Medical Quality. 1999; 14(1):21-27.

3.Williams, S., Weinman, J., and Dale, J. Doctor-patient communication and patient satisfaction: A review. Family Practice. 1998; 15(5):480-492.

4.Roter, D.L., Stewart, M., Putnam, S.M., Lipkin Jr., M., Stiles, W., and Inui, T.S. Communication patterns of primary care physicians. Journal of the American Medical Association. 1997; 277(4):350-356.

5.Brown, J.B., Boles, M., Mullooly, J.P., and Levinson, W. Effect of clinician communication skills training on patient satisfaction: A randomized, controlled trial. Annals of Internal Medicine. 1999; 131(11):822-829.

6.ACGME outcome project: General competencies. Accreditation Council for Graduate Medical Education. http://www.acgme.org/outcome/comp/compHome.asp [Accessed November 24, 2006]7.Carraccio, C., Englander, R., Wolfsthal, S., Martin, C., and Ferentz, K. Educating the pediatrician of the 21st century: Defining and implementing a competency-based system. Pediatrics. 2004; 113(2):252-258.

8.Carraccio, C., Wolfsthal, S.D., Englander, R., Ferentz, K., and Martin, C. Shifting paradigms: From Flexner to competencies. Academic Medicine. 2002; 77(5):361-367.

9.Folberg, R., Antonioli, D.A., and Alexander, C.B. Competency-based residency training in pathology: Challenges and opportunities. Human Pathology. 2002; 33(1):3-6.

10.Mohr, J.J., Randolph, G.D., Laughon, M.M., and Schaff, E. Integrating improvement competencies into residency education: A pilot project from a pediatric continuity clinic. Ambulatory Pediatrics. 2003; 3(3):131-136.

11.Reed, V.A., Jernstedt, G.C., Ballow, M., Bush, R.K., Gewurz, A.T., and McGeady, S.J. Developing resources to teach and assess the core competencies: A collaborative approach. Academic Medicine. 2004; 79(11):1062-1066.

12.Heard, J.K., Allen, R.M., and Clardy, J. Assessing the needs of residency program directors to meet the ACGME general competencies. Academic Medicine. 2002; 77(7):750.

13.Broyles, I., Savidge, M., Schwalenberg-Leip, E., Thompson, K., Lee, R., and Sprafka, S. Stages of concern during curriculum change. Journal of the International Association of Medical Science Educators. 2007; 17(1):14-26.

14.An educational blueprint for the Brown University School of Medicine. Smith, S.R., and Fuller, B. Brown University 2006. http://biomed.brown.edu/Medicine_Programs/MD2000/Blueprint_for_the_Web_04.pdf [Accessed August 7, 2007]15.Litzelman, D.K., and Cottingham, A.H. The new formal competency-based curriculum and informal curriculum at Indiana University School of Medicine: Overview and five-year analysis. Academic Medicine. 2007; 82(4):410-421.

16.Smith, P.S., and Cushing, H.E. Indiana University School of Medicine. Academic Medicine. 2000; 75(9):S118-S121.

17.Bland, C.J., Starnaman, S., Wersal, L., Moorhead-Rosenberg, L., Zonia, S., and Henry, R. Curricular change in medical schools: How to succeed. Academic Medicine. 2000; 75(6):575-594.

18.Bernier Jr., G.M., Adler, S., Kanter, S., and Meyer III, W.J. On changing curricula: Lessons learned at two dissimilar medical schools. Academic Medicine. 2000; 75(6):595-601.

19.Krueger, R.A. Analyzing and reporting focus group results. Volume 6. Thousand Oaks: Sage Publications, 1998.

20.Greene, J. Medical schools: changing times, changing curriculum. amednews.com. 2000; December 11, 2000.

21.Atkins, K.M., Roberts, A.E., and Cochran, N. How medical students can bring about curricular change. Academic Medicine. 1998; 73(11):1173-1176.

22.Gerrity, M.S., and Mahaffy, J. Evaluating change in medical school curricula: How did we know where we were going? Academic Medicine. 1998; 73(9):S55-S59.