ABSTRACT

This study determined efficacy (bellringer exam scores) of three anatomy instructional tools: 2D images, 3D images, and hands on models & prosected specimens (HO). Eighteen undergraduate students participated in this prospective, cross-over controlled, single-blind study. After analysis there was no strong pedagogical evidence to support one instructional tool over another.

The purpose of this study was to determine the efficacy of three anatomy instructional tools: 2D images, 3D images, and hands on models and prosected specimens. This specific question is couched in the broader question of how best to teach gross anatomy. The significance of this line of research has grown dramatically as the capability of computing and communicating techniques to deliver high quality learning experiences to a diverse audience is one of the most promising aspects of computer assisted learning (CAL).1

This diverse audience may represent distance learners around the world. At Lakehead University, for example, distance education (DE) courses have grown from 52 courses (1700 registrants) in 2000 to 139 courses (4800 registrants) in 20072. Fuelling this growth is the assumption that high tech 3D graphics or easily accessible 2D graphics have great educational value in gross anatomy instruction, but little evidence exists to support that contention. There is a critical need to test that assumption. Medical educators are facing important decisions about the use of instructional materials (i.e. cadavers, skeletons, plastic models, computer models, and atlas illustrations) and some of these decisions are being based upon financial and accessibility merits. This study brings the pedagogical merits into greater focus. If 2D and 3D images are empirically proven to be as good or better tools for increasing student understanding of human anatomy, then medical educators can embrace this trend to CAL and DE. If 3D images impede learning in some individuals, then this type of learning tool needs cautious application or minimized inclusion in curricula.

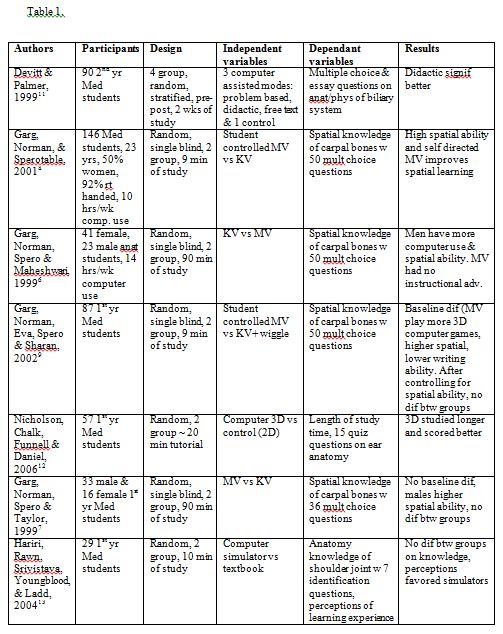

Experimental studies examining learning modes in anatomy are limited. Fourteen such studies were identified in the literature and these are summarized (along with the current study) in Table 1. Observations of note include:

- •There is no clear evidence that CAL is significantly better than traditional methods

•Although the majority of results favor computer assisted technology, methodological concerns have compromised conclusions drawn. Strength of evidence has been hampered by; a) not controlling or monitoring study time involved with each learning medium3, b) allowing students to self select their treatment group3,4 and c) measuring improvement scores when the pre and post tests are quite different.5

•Many of the media-comparative studies were of limited value as the critical elements separating the two forms of instruction were not identified.

•The four studies by Garg and colleagues,6-9, as well as Levinson10 suggest that multiple views of anatomical images may actually impede learning in students with low spatial ability.

•Apart from the current investigation, no study has employed a cross-over design. A cross-over design allows each participant to serve as their own control, motivation, aptitude and background knowledge.

•The current study was also somewhat unique in using actual course test scores as the dependant variable. This has the dual advantage of promoting a consistent high level of motivation in the participants and applying the research question to a “real life” setting (i.e. increasing external validity).

After ethics approval, 18 of 25 students enrolled in an undergraduate level Human Anatomy course at Lakehead University consented to participate in the study. Students received 6 hours/week of lecture instruction and 4 hours/week of laboratory time. Data was obtained during the laboratory bellringer exam of the MSK system at the end of each three one week units (head & neck, upper extremity, and lower extremity). No diagnostic images (i.e. CT or MRI) were used. A bellringer exam is a circuit that requires students to identify in writing various tagged anatomical structures at stations in a specified amount of time.

The 3D images used in this study were high resolution stereoscopic images viewed with stereoscopic glasses. Students were unable to manipulate the images. These images were from the Bassett collection of prosected cadaver specimens and bones which were housed on the Stanford University server.

The 2D images were the same prosected cadaver specimens and bones from the Bassett collection but the images were NOT viewed stereoscopically.

The prosected specimens of the limbs were dissected so that students could observe superficial and deep structures of the MSK system. Natural human bones of a disarticulated skeleton were used as well as high quality SOMSO© plastic models to illustrate the various bones and muscles of the MSK system.

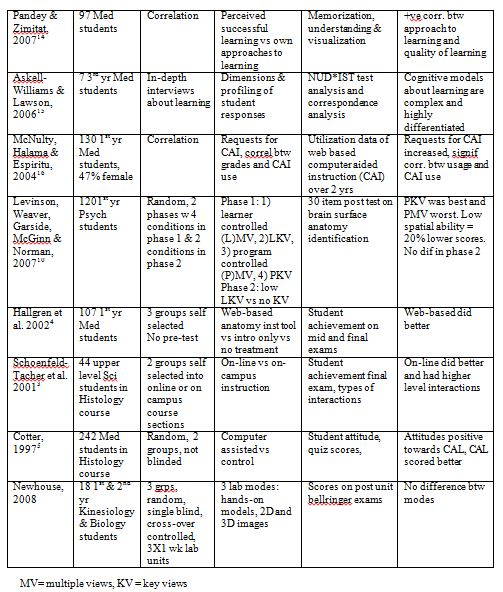

Participants were randomly assigned to one of three groups in unit 1 with subsequent treatment placements as noted in Table 2:

Placement into groups was done in a blinded manner whereby the Principal Investigator (who was also the Professor) was unaware of the group assignments. This blinding was maintained throughout the course because the Professor, while providing the lecture material, was not present during the laboratory periods. This also allowed all marking to be done by the Professor without potential bias.

All students wrote three separate (HO, 2D and 3D) bellringer exams at the end of each unit. The material in each exam was identical except for the mode of presentation. Each exam consisted of approximately 11 stations with 4 structures to identify at each station and the time allocated to each station was 2 minutes in duration.

Students only had access to the learning materials during their scheduled laboratory times. Supplementary studying, differing motivational levels and learning preferences were not specifically controlled for, however, with the crossover design each student becomes their own control, thus greatly limiting these confounding variables.

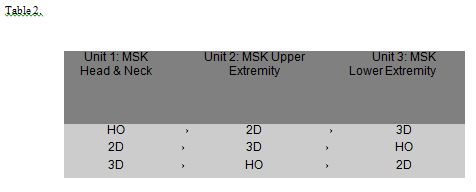

All data analysis was performed using SPSS. In studies with crossover design such as in this study it is important to test for a carry-over effect, i.e. to see if a learning effect confounded the scoring in subsequent units. Armitage and Hills17also refer to this effect as the “treatment by period” interaction, where “treatment” is the factor representing the HO, 2D, or 3D treatment groups and “period” is the factor representing each of the three units. Prior to testing for the carry-over effect test scores were standardized across units so that the mean score in Units 1,2, and 3 were identical. Individual scores were further transformed to use relative instead of absolute scores. This was accomplished by using change scores relative to individual overall means. The carry over effect was assessed by doing three one-way ANOVAs (i.e. HO at T1 vs HO at T2 vs HO at T3 and then again with 2D and then 3D treatments). Because there were no significant differences with these ANOVAs the carry-over effect was dismissed; justifying pooling of the data across the units. The pooled data was analyzed using a 3X1 ANOVA with an n of 18 in each treatment group.

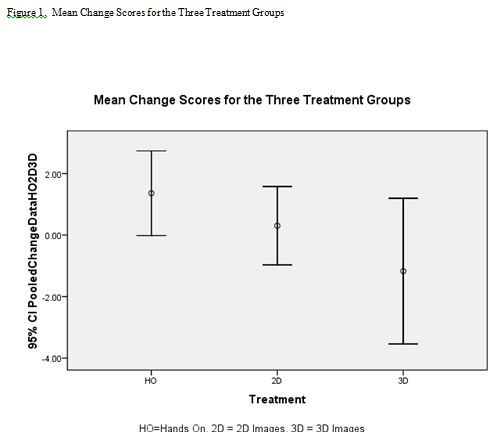

When assessing a carry-over effect, there was no significant differences between Units for HO, 2D, or 3D treatments (p=.69, p=.58, and p=.07, respectively). This permitted the pooling of data to allow 18 participants in each treatment group. A 3×1 ANOVA between treatment groups found no significant difference as shown in Tables 3a, 3b and Figure 1.

At face value, this study demonstrates that the use of HO, 2D and 3D anatomy laboratory instruction tools results in roughly similar learning outcomes. A more guarded interpretation of the results may be warranted. It should be kept in mind that this study used undergraduate students in Kinesiology and Biology programs and similar results may not be seen with different learners of different ages. For example these results may not be seen in a more rigorous and comprehensive graduate level course. In addition, test scores on a purely “identify structure” bellringer exam are not the only learning outcomes that could or should be measured in weighing the merits of an anatomy laboratory instructional tool. Methodological limitations should also be considered. Although the cross-over protocol adds strength to the design as each participant serves as their own control, it would be interesting to replicate this study with larger student numbers. The variance in the results also points to the probability that the assets of instructional modalities are learner-dependent. Certain learners may indeed have a preference and aptitude for certain instructional modalities. The work of Garg and colleagues,6-9 as well as Levinson and colleagues10 suggest that multiple views of anatomical images may actually impede learning in students with low spatial ability. Spatial ability should thus be an important consideration in designing future studies. It would also be worth exploring the p = .07 finding of this study when doing the ANOVA across units on the 3-D treatments. While p = .07 is not significant, and thus allowed pooling of participants, the treatment by period interaction hints of a more complex relationship between 3-D treatment issues (e.g. spatial ability of the learners) and unit issues (e.g. particular anatomy content or a learning effect from one unit to the next). With these considerations in mind, there is a sense that researchers are just beginning to scratch the surface on assessing the merits of HO, 2-D or 3-D images as anatomy laboratory instructional tools. On the basis of this study though, there are no discernable advantages of one mode over the other.

REFERENCES

- 1. Florance, V. Better health in 2010: Information technology in 21st century health care, education and research. Association of American Medical Colleges. 2002.

2. Lakehead University, Office of Institutional Analysis, 2007.

3. Schoenfeld-Tacher, R., McConnell, S., and Graham, M..Do no harm-a comparison of the effects of on-line vs. traditional delivery media on a science course. Journal of Science Education and Technology. 2001; 10(3): 257-265.

4. Hallgren, R.C., Parkhurst, P., Monson, C., and Crewe, N. An Interactive Web-based tool for Learning Anatomic Landmarks. Academic Medicine. 2002; 77(3): 263-265.

5. Cotter, J.R. Computer-assisted Instruction for the Medical Histology Course at SUNY at Buffalo. Academic Medicine. Vol. 72, No. 10, Oct. 1997.

6. Garg, A., Norman, G., Spero, L., and Taylor, I. Learning anatomy: do new computer models improve spatial understanding? Medical Teacher. 1999; 21(5): 519-522.

7. Garg, A., Norman, G., Spero, L., and Maheshwari, P. Do virtual computer models hinder anatomy learning?. Academic Medicine. 1999; 74(10) Suppl: S87-S89.

8. Garg, A., Norman, G., and Sperotable, L. How medical students learn spatial anatomy. The Lancet. 2001; 357(2): 363-364.

9. Garg, A., Norman, G., Eva, K., Spero, L., and Sharan, S. Is there any real virtue of virtual reality? The minor role of multiple orientations in learning anatomy form computers. Academic Medicine. 2002; 77(10) Suppl: S97-S99.

10. Levinson, A.J., Weaver, B., Garside, S., McGinn, H., and Norman, G.R. Virtual reality and brain anatomy: a randomized trial of e-learning instructional designs. Medical Education. 2007; 41: 495-501.

11. Devitt, P., and Palmer, E. Computer-aided learning: an overvalued educational resource? Medical Education. 1999; 33: 136-139.

12. Nicolson, D.T., Chalk, C., Funnell, W.R.J. and Daniel, S.J. Can virtual reality improve anatomy education? A randomized controlled study of a computer-generated three dimensional anatomical ear model. Medical Education. 2006; 40: 1051-1057.

13. Hariri, S., Rawn, C., Srivastava, S., Youngblood, P., and Ladd, A. Evaluation of a surgical simulator for learning clinical anatomy. Medical Education. 2004; 38: 896-902.

14. Pandey, P and Zimitat, C. Medical students’ learning of anatomy: memorization, understanding and visualization. Medical Education. 2007; 41: 7-14.

15. Askell-Williams, H. and Lawson, M.J. Multidimensional profiling of medical students’ cognitive models about learning. Medical Education. 2006; 40: 138-145.

16. McNulty, J., Halama, J, Dauzvardis, M., and Espiritu, B. Evaluation of Web-based Computer-aided Instruction in a Basic Science Course. Academic Medicine. 2000; 75: 59-65.

17. Armitage, P., and M. Hills. The two-period crossover trial. The Statistician. 1982; 31(2):119-131, 1982.