ABSTRACT

We assessed the impact of using clinical vignettes in single best option multiple choice questions (MCQs) on recall of factual pharmacology knowledge. We tested the same knowledge of isolated facts using two sets of MCQs using in-term quizzes administered to second year medical students. The MCQs used by the intervention cohort were context-rich with accompanying clinical vignettes; the MCQs used by the control cohort were context-free requiring recall of identical knowledge. The intervention cohort students correctly answered fewer MCQs (context-rich MCQs) than the control cohort (context-free MCQs). A similar difference was detected between the two cohort answers on the common MCQs . Later we retested the same knowledge on the mid-term and final examinations using the same context-free MCQs for the entire class. The students in both cohorts performed better on the retested MCQs than on newly created MCQs, but there was no significant difference between the two cohorts.

Responses on a five-item “closed response” questionnaire indicated that the majority of students are favorably inclined to context-rich MCQs but some have reservations about this format. We conclude that facilitating recall of knowledge is not a compelling reason for using MCQs with accompanying clinical vignettes.

INTRODUCTION

Among the wide variety of written methods of assessment, multiple choice questions (MCQs), especially the single best option variety, remain the steady staple of examinations administered to undergraduate medical students. Despite the criticism that they promote regurgitation of facts rather than exploring higher order reasoning MCQs continue to be extensively used not only by medical schools but also in the initial steps of examinations by licensing bodies.1,2 Their logistical advantage of allowing testing of large numbers of students and broad sampling of topics in a relatively short time remains unchallenged by any other formats of written examinations.

The validity of MCQs is sometimes questioned because they test factual knowledge rather than professional competence that integrates knowledge, problem solving, communication skills and attitudes. Nevertheless, it has been convincingly shown that knowledge of a domain is the single best determinant of expertise 3. Thus, this form of written test continues to be regarded as a valid method of testing competence 1;4.

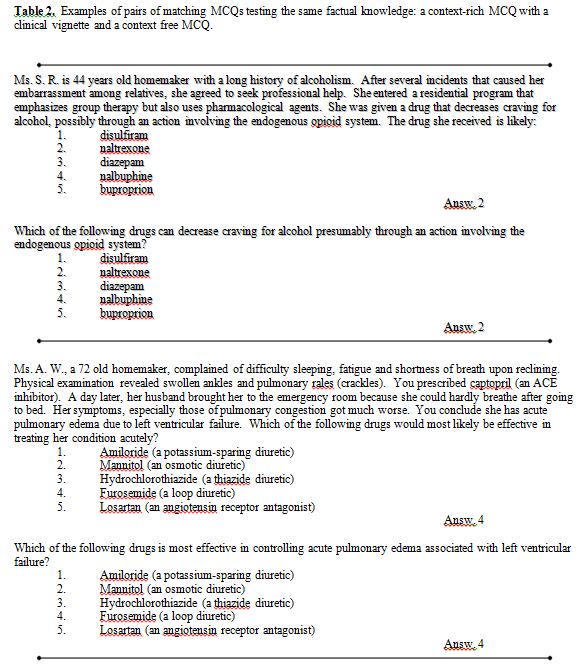

The format and wording of the stem of a MCQ, (what the question asks), play an essential role in determining what is being assessed. In its simplest form a MCQ can ask about an isolated fact. Such a “context-free” MCQ tests rote knowledge only. Higher order skills, such as interpretation and problem solving are best tested using a “context rich” stem, in which the question is embedded in a meaningful context.5 In biomedical subjects, context enrichment most often takes the form of a clinical vignette.2 The putative advantages of enriching the stem with a clinical vignette are two-fold. First, it presumably allows for the assessment of higher order cognitive skills. Second, information is thought to be better recalled when the learning context is similar to the retrieval context.6 For this reason, the universal use of clinical vignettes is strongly encouraged even with MCQs testing factual knowledge.

The goal of the present study was to test the validity of the second conjecture by exploring the differences between context-rich and context-free MCQs. The context-rich MCQs were embedded in clinical vignettes; the context-free were not. The subjects of this study, second year medical students in an integrated basic medical science course, were tested on the pharmacology and therapeutics component of the course. The content was taught, for the most part, in a clinical context. Our study addresses the following questions: (1) When testing the same factual knowledge, do students perform differently on context-rich compared to context-free MCQs? (2) Is recall of factual information influenced by whether previous evaluation was with context-rich or context-free MCQs ?

MATERIALS AND METHODS

We conducted this experimental, prospective, cluster-randomized study during a three month interdisciplinary course at the beginning of the second year of the four-year undergraduate medical curriculum at McGill University. Diverse teaching modalities were used in the course: didactic lectures, interactive whole class formats, and small group sessions. The small group sessions in the pharmacology component of the course employed case-based learning, providing opportunities to discuss important pharmacological principles on the background of a clinical case.

Single best option MCQs are the major component of the multidimensional assessment of the students. In addition to the midterm and final examinations, we used MCQs in the in-term quizzes, two of which were before the midterm examination and two of which were before the final examination. We administered the in-term quizzes at the end of the pharmacology small group sessions then, as learning activity, we discussed the questions and provided the correct answers.

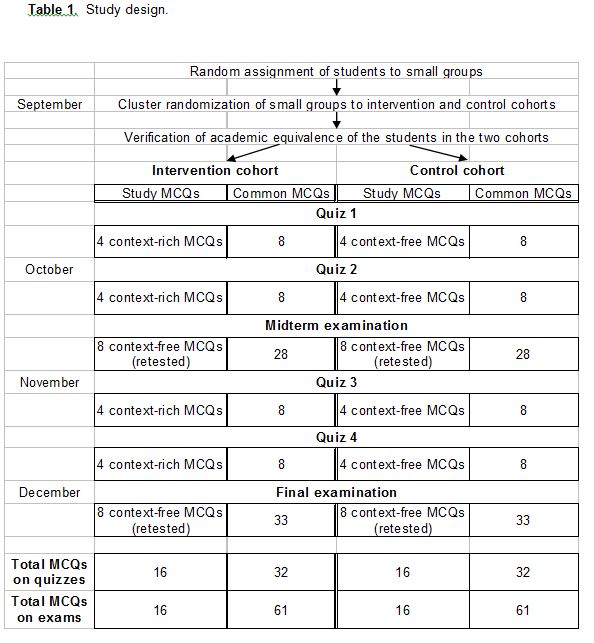

Our study design is outlined in Table 1. The Dean’s office randomly assigned the students to 13 small groups of 13 or 14 students each. For the purpose of this investigation, we divided the class into two cohorts. The intervention cohort comprised 109 students in small groups 1, 3, 5, 7, 9, 11 and 13; the control cohort included 93 students in groups 2, 4, 6, 8, 10, and 12. To verify that the intervention cohort and the control cohort were academically equivalent, we calculated the average grade and the Z-score of the students in the two cohorts in all seven preceding basic science course units of the first year medical curriculum. The average grade and average Z score of the students in the intervention cohort was 81.4% and 0.015; for those in the control cohort the averages were 81.1% and -0.018. Thus we were assured of reasonable randomization.

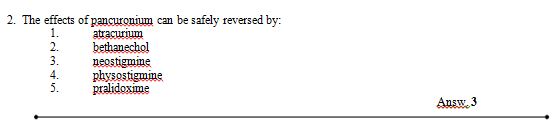

We then prepared two sets of in-term quizzes. Each quiz consisted of 12 MCQs. In one set of quizzes (used for the intervention cohort) there were 4 MCQs that were context-rich with clinical vignettes, and in the other set (used for the control cohort) there were 4 identical, but context-free, MCQs designed to test the same knowledge (Table 2). The remaining 8 MCQs (“common MCQs”) were the same for both cohorts. The authors, two clinicians and two basic scientists, wrote the MCQs with assistance from two experienced pharmacology lecturers. We later reused the context-free MCQs in the midterm and final examinations with slight modifications (usually by changing the sequence of the answer options).

Testing student knowledge recall in quizzes

The students in intervention cohort completed in-term quizzes containing a total of 16 context-rich MCQs with clinical vignettes and 32 common MCQs. The control cohort students completed in-term quizzes containing a total of 16 context-free MCQs and the same 32 common MCQs. We carefully matched the context-rich and context-free MCQs to ensure that they tested the same knowledge.

Testing student knowledge recall in the midterm and final examinations.

In the midterm and final examinations, we retested the same knowledge tested in the in-term quizzes. For this we used 16 (8 in midterm and 8 in final examinations) modified context-free MCQs that had been used in the in-term quizzes. All MCQs in both examinations were the same for the entire class.

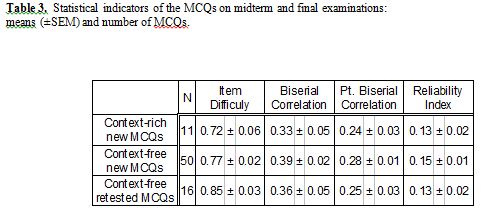

Statistical indicators of MCQs

For each MCQ on the midterm and final examination, four statistical indicators are routinely provided by the McGill computing centre 7: (1) MCQ difficulty is the proportion of students who answered correctly of the total who attempted to answer. The MCQ ability to differentiate between those who achieved a high or low score on the examination is presented as (2) the biserial correlation coefficient and (3) the point biserial correlation coefficient. (4) MCQ reliability is a coefficient measuring, for each MCQ, the proportion of true variation with respect to errors of measurement. These statistical indicators for the various MCQs were compared (Table 3).

Student questionnaire

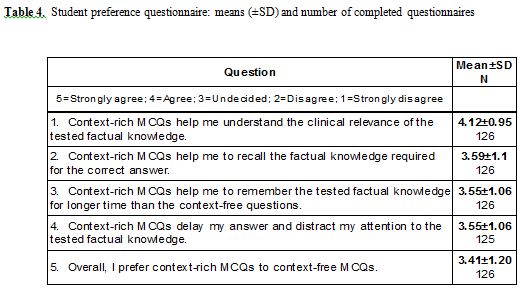

We administered an anonymous five item questionnaire exploring the student attitudes towards context-rich and context-free MCQs using a forced choice 5-point Likert scale anchored by “strongly agree (5)” and “strongly disagree (1)” (Table 4). The same questionnaire requested elementary demographic data and invited optional narrative comments.

Ethical considerations

Prior to the study, we informed all students that some of the MCQs in the quizzes would be used for research purposes only. The study was approved by the Research Ethics Board of the McGill University.

Statistics

We used conventional descriptive statistics, Bonferroni t-test and Spearman correlation coefficient for comparison of the measured values.

RESULTS

Performance on the in-term quizzes

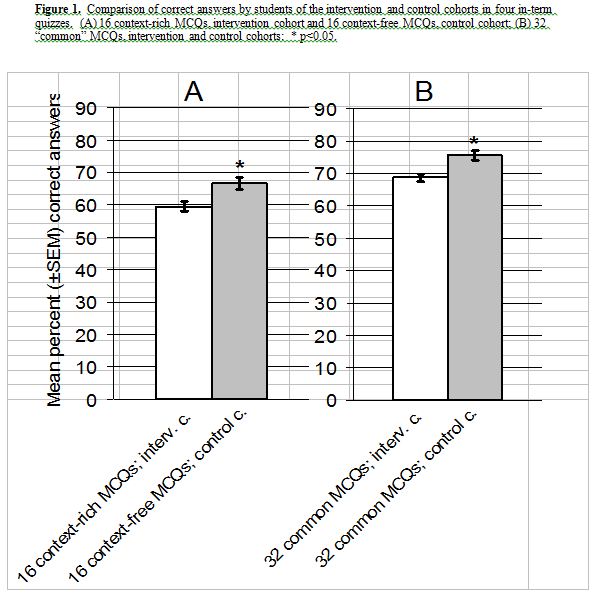

The control cohort students performed better than those in the intervention cohort on the 16 in-term MCQs which tested the same knowledge. The average grade on the context-free MCQs was 66.7 for the control cohort and on the context-rich MCQs 60.0 for the intervention cohort (p < 0.05, Fig. 1A). The control cohort students also performed better on the 32 common MCQs, i.e. the same context-free MCQs done by both cohorts (75.6% vs. 68.6%, p < 0.05, Fig. 1B).

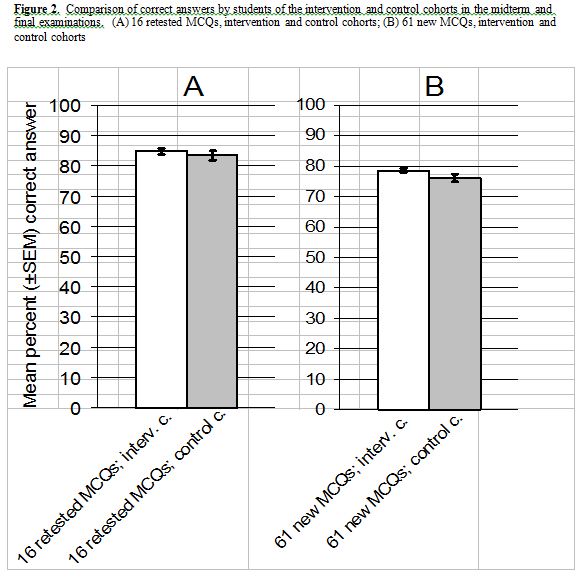

Performance on midterm and final examinations

The intervention and control cohort students performed almost identically on the 16 retested MCQs (84.9% vs. 83.4%, p>0.05, Fig.2A) as well as on the balance of 61 new-MCQs (78.7% vs. 76.0%, p>0.05, Fig 2B). Both cohorts performed better on the 16 retested MCQs than on the 61 new MCQs (intervention cohort 84.9% vs. 78.7%, p<0.05; control cohort 83.4% vs. 76.0%, p<0.05) regardless of whether the material had been previously tested using context-rich or context free MCQs.

Statistical indicators

There were three categories of MCQs in the final and midterm examinations: context free MCQs tested before (retested); context-free MCQs not tested before (new); and context rich MCQs not tested before (new). The mean of the difficulty indicators of the retested MCQs was statistically insignificantly higher than that of the other MCQ categories. This reflected the improved performance of the students on the retested MCQs. The indicators of discrimination and reliability of all three categories of MCQs were comparable (Table 3).

Questionnaire responses

Of the 202 students in the class, 126 (62%) completed the questionnaire. Their answers are summarized in Table 4. The responses to statements 1, 2, 3, and 5 indicate that the majority of students were favorably inclined to the use of context-rich MCQs. The responses to statement 4 revealed that a sizeable minority of students had reservations about this MCQ format. The mean values of responses of students with different levels of education before admission to medicine to statements 1, 2, 3 and 5 were not different. However, the mean values of the responses to statements 4 were negatively correlated with three categories of the level of education (premed, undergraduate, and graduate or professional degree) before admission to medical studies (Spearman rank correlation coefficient=-0.31, p<0.05, N=125), indicating a lesser degree of preference for the context rich MCQs of the students with less advanced education.

Many of the 28 narrative comments received were favorable, such as: “Context-rich is the way to go. Yes, more time consuming but if done appropriately then better as teaching tool. Also USMLE-like”. The most frequent positive comments related to the idea that use of the context-rich format is helpful in preparation for the licensing examinations which use that format. Some were negative, such as: “At the basic, first time learning stage, the info should be tested (i.e., quizzes) in a more basic, less complicated context-free way”.

DISCUSSION

In recognition of the generally accepted notion that medical education should be integrated and that basic sciences should be taught in a clinical context, clinical relevance has been the leitmotif of teaching pharmacology at McGill University for many years.8 The relevance of basic science in clinical medicine is emphasized in didactic lectures and further reinforced in small group sessions and other interactive teaching modalities by presenting pharmacological principles in a context of clinical cases. In view of this, we were surprised that the clinical vignettes used in our study did not give the intervention cohort students a selective advantage in recalling the factual knowledge required for correctly answering the context-rich MCQs in the in-term quizzes. In fact, the intervention group students performed less well than the control cohort students on MCQs testing the same factual knowledge. All students had had some experience with context-rich MCQs with clinical vignettes from previous courses therefore unfamiliarity with this format cannot readily explain this difference. The same difference between the two cohorts emerged in answering the “common” MCQs that were the same for the entire class. Thus, the poorer performance by the intervention cohort students may reflect a difference in knowledge of the material tested in the in-term quizzes rather than increased difficulty in answering the context-rich questions. There also is a possibility that the reading of the longer stems of the context-rich MCQs distracted the students and had negative influence on the overall performance in the quizzes. Uneven matching of the two cohorts is unlikely to account for this difference since their performances in the seven interdisciplinary basic science courses of the first year medical curriculum were equivalent (see Methods) as were those in the subsequent pharmacology component of the midterm and final examinations (Fig. 2).

Although the in-term quizzes were used minimally as a component of summative assessment, their main purpose was formative by providing a learning experience, especially in preparation for the midterm and final examinations. Quite predictably, they accomplished this goal. In the midterm and final examinations, students in both cohorts correctly answered more retested MCQs (context-free MCQs that retested the knowledge previously tested in the in-term quizzes) than new MCQs. However, there was no overall performance difference between the two cohorts suggesting that previous exposure to either, context-rich MCQs or context-free MCQs in in-term quizzes improved equally the formative outcomes in the midterm and final examinations.

Our study has limitations. First, because of the scheduling of the small group sessions and the examinations, the test-retest time interval (between the quizzes and the examinations) varied from 4 to 32 days Second, we have no objective verification that the two versions, context-free and context-rich, of a MCQ tested identical factual knowledge exclusively. We relied on the judgment of five experienced medical teachers that the same factual knowledge was sufficient to correctly answer both versions of the given MCQ and that reading the longer stem of the context-rich MCQ was the only additional task facing the intervention cohort students.

The student responses on the questionnaire imply that an overall positive attitude to the context-rich MCQs prevailed among them. From the narrative comments submitted it appears that the main reason for this attitude was purely pragmatic, namely “practice” with context-rich MCQs is useful preparation for the licensing examinations. Nevertheless, a substantial number of students had reservations about this format; as the correlation suggested, this attitude was more prevalent in students with fewer years of university education before they began medical school. The presumably older and more mature students, with more advanced education before admission to medicine, were apparently better able to recognize the value of clinical context for their learning, or simply were less influenced by the longer stem of the context-rich MCQs.

The basic tenet of learning in context is that recall is facilitated when the new knowledge is acquired in an authentic context.9 This notion has been put into practice in problem based learning where basic science and clinical knowledge are learned in the context of clinical problems relevant to medical practice. The evidence that recall is facilitated when the context in which learning occurs resembles the context in which the knowledge is tested, is well documented.10 However, Koens et al. failed to confirm the same context advantage in an environment relevant to medical education, the classroom and the bedside, suggesting that the context of physical surroundings does not contribute to the same context advantage in medical education.11,12 Obviously, the concept of context in education is more encompassing than physical surroundings.12

Although it is commonly assumed that the clinical context increases commitment to acquire and retain basic biomedical science knowledge, our results failed to support this notion. The context of clinical vignettes in the stem of the MCQs affected neither the summative outcome of the MCQs, the recall in the quizzes, nor the formative outcome, the later recall tested in the examinations. The additional clinical context of the MCQ in the quizzes was not sufficiently significant or authentic to provide any cognitive advantage measurable by the assessment in the examinations.

CONCLUSION

Our study suggests that facilitation of both learning and recall of factual knowledge is not a compelling reason for using context-rich MCQs.

ACKNOWLEDGMENTS

We are grateful to Drs. Brian Collier and Daya Varma, both from the Department of Pharmacology and Therapeutics, McGill University, for participating in critical editing of the MCQs used in this study.

REFERENCES

- McCoubrie, P. Improving the fairness of multiple-choice questions: a literature review. Med. Teach. 2004; 26: 709-712.

- Case, S.M., and Swanson, D.B. Constructing written test questions for the basic and clinical sciences. 3rd (revised) ed. Philadelphia, PA: National Board of Medical Examiners, 2002.

- Glaser, R. Education and thinking: The role of knowledge. Am. Psychol. 1984; 39: 93-104.

- Downing, S.M. Assessment of knowledge with written test formats. In: Norman G, van der Vleuten C, Newble D, eds. International Handbook of Research in Medical Education, Part Two (pages 647-672). Dordrecht, Boston, London: Kluwer Academic Publishers; 2002.

- Schuwirth, L.W., and Van der Vleuten, C.P.M. Different written assessment methods: what can be said about their strengths and weaknesses? Med. Educ. 2004; 38: 974-979.

- Smith, S.M., and Vela E. Environmental context-dependent memory: a review and meta-analysis. Psychon. Bull. Rev. 2001; 8: 203-220.

- Thorndike, R.L. Educational Measurements. 2nd ed. American Council of Education, 1971.

- Norman, G.R., and Schmidt, H.G. The psychological basis of problem-based learning: a review of the evidence. Acad. Med. 1992; 67: 557-565.

- Regehr, G., and Norman, G.R. Issues in cognitive psychology: implications for professional education. Acad. Med. 1996; 71: 988-1001.

- Godden, D.R., and Baddeley, A.D. Context-dependent memory in two natural environments: on land and underwater. Br. J. Psychol. 1975; 66: 325-331.

- Koens, F., ten Cate, O.T., and Custers, E.J. Context-dependent memory in a meaningful environment for medical education: in the classroom and at the bedside. Adv. Health Sci. Educ. 2003; 8: 155-165.

- Koens, F., Mann, K.V., Custers, E.J, and ten Cate, O.T. Analysing the concept of context in medical education. Med. Educ. 2005; 39: 1243-1249.