ABSTRACT

Pop quizzes have been utilized in non-medical academic settings to ensure student preparedness and improve test scores. This study is the first to examine the utility of pop quizzes in an undergraduate medical education (UME) setting. Three consecutive years of sophomore medical student data (n = 409) were used to compare performance indicators for students who were administered pop quizzes versus those who were administered scheduled quizzes and those who took no additional quizzes. Indicators examined were course test scores, semester exam scores, cumulative grades and scores on the National Board of Medical Examiner’s (NBME) Introduction to Clinical Diagnosis subject exam. Students who took pop quizzes performed the poorest on semester exams, the NBME exam and course final grades. ANOVA and post hoc test analyses showed that all three groups differed significantly on NBME scores and semester exams, with those who completed no quizzes scoring the highest, followed by those who took scheduled quizzes and then those who took pop quizzes. This study suggests that mandatory pop quizzes have questionable value in a UME clinical skills course.

INTRODUCTION

Educators in almost every academic environment are faced with the common problem of students who come to classes unprepared. In medical education, instructors also encounter challenges in presenting multiple and complex academic subjects that require students to learn a voluminous amount of material in a relatively short period of time. Pop quizzes have been utilized by educators in non-medical academic settings to ensure student preparedness1, promote critical thinking skills2 and improve subsequent test scores and grades3. A small number of previous research studies in non-medical educational settings have examined the effectiveness of using pop quizzes. Results have demonstrated that pop quizzes improve students’ preparation for exams, their subsequent test scores, and final course grades3. In addition, some studies have found that pop quizzes may be perceived as useful and even liked by some students3-6. However, no published studies in undergraduate medical education settings have examined how pop quizzes affect medical student performance.

Anderson3 has studied the frequency of quiz administration in a behavioral science course and its effect on medical student study behavior, but did not examine pop quizzes. Anderson used a prospective experimental design within a single course and had a small sample of students (n = 10) record their study behaviors under various quiz versus no quiz administration sequences. This study found that students studied more during the weeks in which they were being quizzed regularly, but that “cramming” behaviors occurred in both conditions and there was no relationship between quizzing and performance on exams. Streips et al7 noted that frequent administration of quizzes/exams to medical students may hinder the retention of information by not providing ample time for integration of information learned, resulting in a “study and forget” cycle.

Are pop quizzes effective in undergraduate medical education (UME) settings? In an effort to address this question, the current study explored whether pop quizzes were associated with medical student performance. Specific objectives of this study were to examine whether the quiz format (pop quizzes, scheduled quizzes, or no quizzes) used in an Introduction to Clinical Medicine-II (ICM-II) course for sophomore medical students was associated with differences in: a) ICM-II test scores, semester exam scores, and cumulative course grades, and b) scores on the National Board of Medical Examiners’ (NBME) Introduction to Clinical Diagnosis subject examination.

MATERIALS AND METHODS

Participants, Design and Instruments. This study compared three cohorts (2002-2004) of sophomore medical student course data (n = 409) collected in an ICM-II course at a medical school in the mid-south United States. The ICM II course is a year-long course that runs parallel to the basic science curriculum; the focus is systems-based and utilizes clinical vignettes for teaching and testing. This study was approved by the institutional review board at the medical school where the study was conducted.

The academic performance of the three cohorts was fairly similar as indicated by average scores on the MCAT. (See Figure 1).

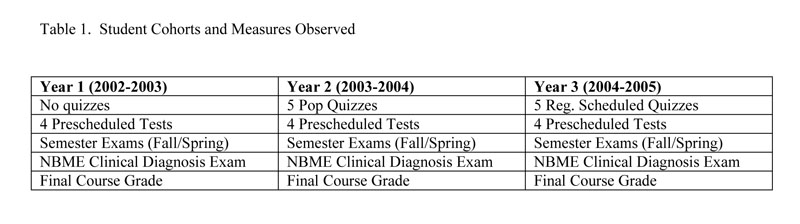

As shown in Table 1, three consecutive years of student data were used to compare scores on ICM-II course tests, semester exams, cumulative grades and scores on the NBME Introduction to Clinical Diagnosis subject examination. The first cohort of students (n = 139) were not administered any additional pop or scheduled quizzes. The second cohort of students (n = 132) completed five pop quizzes (10 points each) and the third cohort (n = 138) completed scheduled quizzes (10 points each) instead of pop quizzes. All students completed four scheduled 50 point tests, fall semester and cumulative spring exams and the NBME subject examination. There was a total of 700 points possible at the end of the year for all three cohorts. During this three year time period, the structure of the pop quizzes, quizzes and exams did not change, nor did the Course Director. The content of material taught in the course did not change significantly. The only difference in the exam scores was in the first cohort, whose spring semester exam and NBME scores were worth 175 points, instead of 150 points for the second and third cohort. This change was made in response to student feedback that the NBME carried too much weight on their final scores. The weight of the NBME was reduced from 25% of the final grade to 21% of the final grade.

The pop quiz and scheduled quiz questions were identical across both years. The format of the questions were the same; all of the questions were balanced in terms of recall versus application of information. The majority of the quiz questions were presented in clinical vignette format, similar to the NBME style of question-writing. This was designed with the distinct purpose of preparing the students as much as possible for these types of questions. Below is an example question taken from the pop quizzes:

A 45 yr old woman presents to your clinic with a chief complaint of left sided chest pain and shortness of breath that began one day prior. She states that the pain is constant and worse with deep breath. She just returned from a trip to Singapore 2 days ago. She denies fever, chills, nausea, vomiting, or cough. She is a smoker (2 ppd for 25 years), and has had hypertension for 10 years. The pain is not worse with exertion and does not radiate. There is no associated nausea or dizziness. On physical exam, her vital signs show a hr 110, rr 20, and bp 154/99 in both arms. She is afebrile. You note clear lungs, and a 2/6 holosystolic murmur at the left mid clavicular line that radiates to the axilla that the patient states is old. There is no S3, jvd, or peripheral edema.

1)What is the most likely cause of her chest pain?

a.Coronary artery disease

b.Pulmonary embolism **

c.Aortic stenosis

d.Congestive heart failure

Analyses. Descriptive statistics including means and standard deviations for the ICM-II quiz totals (pop and scheduled), test score totals, and semester exam totals were computed. Semester exam raw scores were converted to z-scores due to a slight variance in the total points possible over the timeframe of analysis for this study (ZExam). Also, due to changes in the way NBME reported scores during the timeframe of analysis for this study, the NBME scores were transformed to standard z-scores for comparative analyses (ZNBME) following methods previously described8. A one-way analysis of variance (ANOVA) with Tukey post hoc tests was used to evaluate and compare mean test scores, overall course grades, ZExam scores, and ZNBME scores between years. A one-way ANOVA with no post hoc tests was used to compare the quiz scores between the cohorts who received either scheduled or pop quizzes.

RESULTS

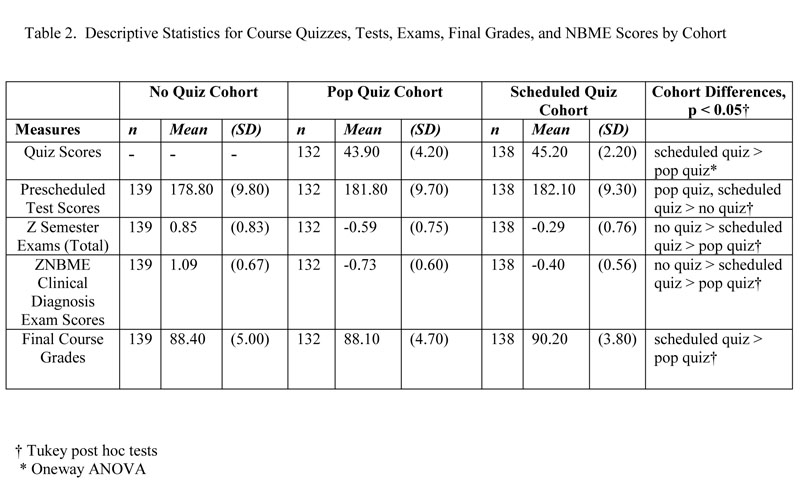

Descriptive statistics for quizzes, exams and test score totals across the three cohorts are presented in Table 2. One way ANOVA F-tests indicated the presence of significant differences for the quiz totals (F1,269 = 14.87, p DISCUSSION

This study examined whether pop quizzes in a UME clinical skills course were associated with improved performance. We did not find evidence to indicate that pop quizzes were useful for medical students in an ICM-II course. Of the 3 cohorts studied, students who took pop quizzes performed consistently worse in all areas examined except for performance on ICM-II course tests. ICM-II test scores were highest for the group who were administered scheduled quizzes. Cumulative course grades were also highest for the group who completed scheduled quizzes while NBME scores were highest among the group who had no quizzes.

To our knowledge, this is the only published study that has examined the utility of pop quizzes in an undergraduate medical student population. In reviewing the empirical research on pop quizzes, we found that some studies demonstrated pop quizzes improve subsequent test scores9. However, the current study found that pop quizzes did not result in significantly improved final course grades. Graham’s study found that students in higher education settings favored the use of pop quizzes because it motivated them to study9. However, anecdotal observations by the professor who taught the ICM-II course indicated that students experienced increased stress and frustration on the days that pop quizzes were administered. Not only did this seem to hinder interactive class discussions, but it also may have shifted the perceived locus of control for learning away from the goal-oriented learners. Medical students have traditionally been viewed as highly self-motivated learners and the implementation of pop quizzes may have altered their normal study habits and learning styles.

Previous research has shown pop quizzes are useful when they are offered for extra credit2 or in short answer format9. Our study used a traditional multiple choice format. We discovered that this format was not conducive to positive learning outcomes. Ruscio10 has shown that pop quizzes which utilize short answer or mini-essay questions result in more student involvement and deeper discussion. This observation may be related to the fact that such formats are more likely to promote critical analysis and reflection than a multiple choice quiz format. Unfortunately, time and class size constraints may prohibit the use of short answer or essay question quizzes in the UME environment.

Although strengths of this study include the use of a large sample size and the examination of different formats of quizzes (pop vs. scheduled vs. none) across several cohorts, there are limitations. This was a retrospective study and is thus limited to a description of observations. We do not suggest explanatory or causal effects between the measured variables. The study was completed at a single institution for a single course, thus limiting the ability to generalize the findings. While we did not measure and control for potential differences in academic performance of individual students, average baseline MCAT scores indicate that the cohorts were not fundamentally different in terms of their test-taking abilities. The three years of medical school classes examined in our study were essentially chosen from the same demographic pool and with the same standards each year of this study.

Promoting a supportive learning environment with effective teaching and assessment methods is vital in successful undergraduate medical education where classes are large, learning is fast-paced, and there is a great deal of material to be covered. In this study, overall course grades were highest for the group who completed scheduled quizzes and lowest for those who completed pop quizzes which suggests that scheduled quizzes, rather than pop quizzes, may help students keep up with their course work, resulting in better final grades. NBME scores were highest among the group who had no quizzes and lowest for those who completed pop quizzes. For medical educators, this study highlights the importance of considering how intuitive interventions in the classroom setting (e.g. pop quizzes) could actually result in counter-intuitive outcomes. Educators who continually assess their educational interventions are well-positioned to provide evidence for the use of various strategies that promote optimal learning. Further critical analysis of traditional evaluation methods and their application in UME will ultimately provide data to help educators make informed course design decisions and ultimately better prepare medical students.

Acknowledgment: The authors would like to thank Karen Szauter, MD, for providing the inspiration for this study, Jennifer VanEcko, ICM-II course coordinator for her administrative expertise, and Elizabeth Hicks for research support.

REFERENCES

- Kauffman, J. Pop quizzes: Theme and variations. The Teaching Professor. 1999; 13:4.

- Carter, C., Gentry, J.A. Use of pop quizzes as an innovative strategy to promote critical thinking in nursing students. Nurse Educator. 2000; 25:155.

- Anderson, J.E. Frequency of quizzes in a behavioral science course: An attempt to increase medical student study behavior. Teaching of Psychology. 1984; 11:34.

- Kouyoumdjian, H. Influence of unannounced quizzes and cumulative exam on attendance and study behavior. Teaching of Psychology. 2004; 31:110-111.

- Lay, W.E. Pop tests: Valuable instructional tools. NISOD Innovation Abstracts. 2002; 24:1.

- Thorne, B.M. Extra credit exercise: A painless pop quiz. Teaching of Psychology. 2000; 27:204-205.

- Durning, S.J., Pangaro, L.N., Sweet, J., Wong, R.Y., Sealey, M.L., Nardino, R., Alper, E., Hogan, K., Hemmer, P.A. Clerkship sharing on inpatient internal medicine rotations: an emerging clerkship model. Teaching and Learning in Medicine. 2005;17(1):49-55.

- Streips, U.N., Virella ,G., Greenberg, R.B., Blue, A., Marvin, F.M., Coleman, M.T., Carter, MB. Analysis on the effects of block testing in the medical preclinical curriculum. Journal of the International Association of Medical Science Educators. 2006;16(1):10-18.

- Graham, RB. Unannounced quizzes raise test scores selectively for mid-range students. Teaching of Psychology. 1999; 26:271-273.

- Ruscio, R. Administering quizzes at random to increase students’ reading. Teaching of Psychology. 2001; 28:204-206.