ABSTRACT

Students find it difficult to see the relevance of, and have trouble integrating, some of the basic science course material when taught in isolation from the clinical courses. Integration of disparate material is perceived to be a challenge and this problem is compounded by assessments in which a single discipline is tested at a time – mostly in a multiple choice format. As such, the theory assessments in Years I and II were changed into a case-based, integrated format with extended-matching and short-answer type questions. This article describes the development of case-based theory exams involving simultaneous testing of all material taught in a module. Specific steps involving the process of building common cases across disciplines, question construction, faculty coordination and quality assurance are described.

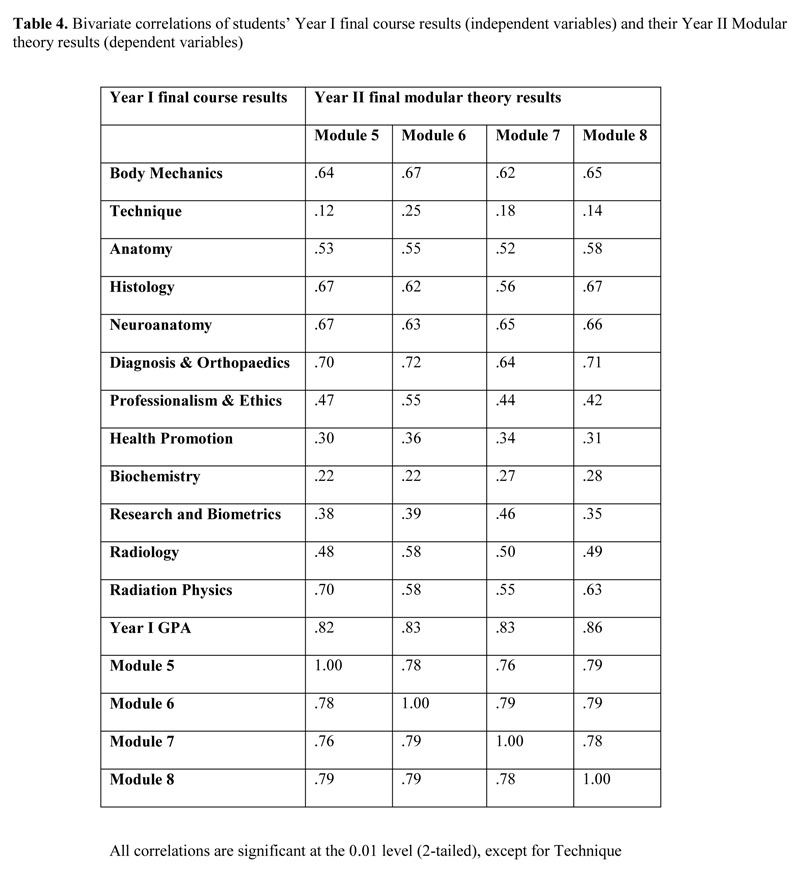

This paper also addresses the quality of these integrated exams as experienced over the first academic year of implementation. Statistical analyses of internal consistency, such as Cronbach’s alpha of between 0.75 and 0.89, and split-half values of between 0.69 and 0.93 for the different exams were found. Correlations between modular results, as well as between Year I discipline-based results and Year II modular results, indicated some measure of construct validity. This result was supported by backward stepwise multiple linear regression analyses.

The new assessment format is resource intensive, but addresses issues of clinical relevance and integration. The fairly sound psychometric proportions of the exams support the high-stake decisions that we have to make on the basis of our examination results.

INTRODUCTION

Traditional undergraduate health education exemplifies non-integration where quite often every hour, on the hour, a different lecturer walks into the classroom and teaches his or her own subject as though it is the most important subject in the program. These lecturers cover widely different content material and they have different teaching styles and varying expectations of the learners. How does a student take all this information, bring it together, and combine the knowledge and skills, particularly from the basic sciences, into the reality of professional practice?1-4 This challenge to the student becomes even more serious when single courses are assessed separately, mostly in a multiple-choice question (MCQ) format of varying question writing depth and style,5-7 scattered throughout the year and often competing with each other7,8 , though at some schools Block Testing has obviated this particular problem.7

A new curriculum at the Canadian Memorial Chiropractic College (CMCC) introduced in 1999 was specifically designed to improve horizontal and vertical integration by arranging the first three years of a four-year health professional curriculum according to anatomical region and by the early introduction of clinical sciences. However, formal9,10 as well as informal student feedback indicated that the taught curriculum was not as well integrated as anticipated. In order to get an idea of how well our curriculum was integrated, we evaluated it against the integration ladder developed by Harden (2000)3. This integration ladder, which is a tool that can be used for curriculum planning and evaluation, has 11 possible steps from the lowest level of integration, called Isolation, through Awareness, Harmonization, Nesting, Temporal Coordination, Sharing, Correlation, Complementary, Multidisciplinary, Interdisciplinary, to Transdisciplinary, the highest level of integration. This review indicated that we were still mostly operating at Step 1 or “Isolation” – a situation where individual lecturers organize their own courses, determining the content as well as the depth to which the course will be covered and developing their assessments in isolation without knowing what others are teaching.

The students also indicated that they had difficulty in seeing the relevance of much of the taught material and they expressed dissatisfaction with assessment methods that tested mostly recall and short term memory through the commonly used multiple choice question (MCQ) assessment format. Assessment-related stress caused by a large number of separate course examinations was also a concern.11

In order to improve the process of integration, instruction in Year III was changed at the beginning of the 2002-2003 academic year into a modular system with nine modules each culminating in a week of theory and practical assessments. This modular system has now been in operation for four academic years. At the beginning of the 2005-2006 academic year, a modular system was also introduced in Years I and II with each year being divided into four modules. This model is similar to that described by Streips et al7 in that courses are taught as before during the module, but, instead of discipline-based assessments scattered throughout most of the year, each module is followed by two weeks of both theory and practical assessments.

Assessment strongly affects student learning, and clinical cases can help students associate course material with “real” patient situations and thus improve relevance and retention.2 Therefore we decided to change the modular theory assessments into a case-based format using extended-matching (EMQs) and short-answer (S-A) questions instead of MCQs.12,13 It was anticipated that these assessments would also lead to better sequencing and integration of course material when faculty collaborate on the development of cases and trans-disciplinary questions. This article describes the development and implementation of integrated modular theory exams for Years I and II of the program.

MATERIALS AND METHODS

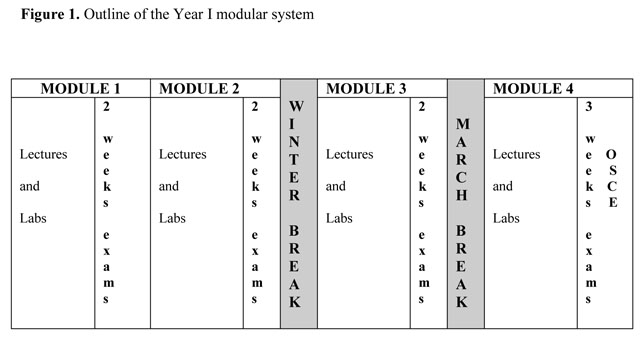

The modular system for Years I and II included two weeks of assessment at the end of each of four modules (Figure 1), except for the last module where the assessment period was three weeks long to accommodate an Objective Structured Clinical Examination (OSCE). In order to give the students the opportunity to have a complete break from their studies over the winter and March break periods, the first two modules were shorter than the last two modules of the year (Figure 1). Although the assessment period at the end of each module also included practical examinations as well as Objective Structured Practical Examinations (OSPEs), this paper describes only the three theory examinations written at the end of each module.

Each theory exam included questions from all courses. If a module, for example, was nine weeks long, Exam 1 covered predominantly the work taught during weeks one to three, Exam 2 predominantly the work taught during weeks four to six, and Exam 3 predominantly the work taught during weeks seven to nine. The number of exam questions per course was prorated to the instruction time, usually two to three questions per lecture hour. The questions could be either in S-A or EMQ format. Each exam had a total score of at least 100. The results of the three exams were averaged for a final theory percentage per module.

Development of the theory exams

The group responsible for developing the exam questions consisted of all faculty teaching in a particular module. Shortly after the start of each module, a meeting was held with all relevant teaching faculty and/or course coordinators. The learning outcomes of all courses taught during the module were made available to the attendees and at the start of the meeting, each attendee briefly described the content to be covered in his/her course during the module. This helped the individual faculty members better understand what was being taught overall. At the conclusion of this part of the meeting, the clinical conditions to be covered in the four cases for each exam (twelve in total) were decided. All the cases had to be relevant to practice.

Completed cases were circulated to all examiners to edit, review and modify so that they could link their own questions to them. For example, if nutrition questions on carbohydrates were to be asked, the faculty member might add to the case that the patient was a single parent who often bought fast food, or was a diabetic. Each faculty member had to spread his/her questions across all four cases. They could also add extensions to a case such as additional physical exam findings, a return visit sometime in the future, or a question asked by a patient. Clinical relevance had to be emphasized and some of the basic science faculty sought assistance from clinicians in this process. This process lead to the development of a modified form of EMQs where the four cases per exam served as the themes with varying numbers of questions linked to them (See Appendix 1). In order to minimize the opportunity for guessing the correct answer, all option lists had to contain seven to twenty-six options.15

Once all the questions were submitted, they were reviewed for submission accuracy and then strategically sequenced after each of the four cases to create a “real life” patient situation. This meant that questions relating to history and basic sciences were placed before the physical examination questions, followed by diagnosis, treatment and/or other management questions. At this stage, potential problems such as not sending a pregnant patient for X-rays, were addressed. The final step in this process was peer review by at least three faculty members.

Writing the tests

The “traditional” examination procedures were not changed. Students were randomly assigned to seats and three hours were allowed to complete each exam.

Quality assurance of results

Analyses of item difficulty and point biserials of all the extended-matching questions in each exam were conducted. Poorly discriminating questions were flagged and referred to the relevant faculty members for review and comments. After receiving responses from the faculty members, corrections were made which might either be the addition of an extra correct option worth a full or part mark, or the deletion of a few poorly discriminating questions. On the final number of questions in each exam, difficulty levels, Spearman-Brown, Kuder-Richardson’s 20 and 21, Standard errors, as well as Cronbach’s alpha were calculated by means of the Performance Evaluation Technology (PET Version 4.1399, TDA Inc., Burlington, ON, Canada). All other statistical analyses were performed by means of the Statistical Package for the Social Sciences (SPSS) Version 14 programme.

Overall pass/fail performances for each exam were determined. After calculating averages to obtain one final theory percentage from the three exams, descriptive statistics of the results were calculated. An absolute standard was applied to the results in that all students who scored below 60% failed. The percentages obtained for each individual discipline in each exam was also calculated and published in separate category reports to assist the students in their self-directed learning and to flag poorly performing students in each discipline for the relevant lecturers. Although not used for promotion purposes, the individual scores per discipline were also tracked throughout the year.

Calculations of Pearson product moment correlation coefficient, r, was completed in order to describe the magnitude of relations between the modular theory results per year of study. For the Year II students we also had the results from their Year I individual course assessments available and correlation coefficients between their Year I final course results (independent variables) and their Year II Modular theory results (dependent variables) were calculated. From these same results a backward stepwise multiple linear regression was performed for the four outcome (dependent) variables: the average theory results obtained in Year II Modules 5, 6, 7, and 8. The eight input (independent) variables included in the final analysis were the final Year I results in Anatomy, Body Mechanics, Diagnosis/ Orthopaedics, Histology, Neuroanatomy, Radiology and Radiation Physics, as well as the Year I GPA.

RESULTS

Integration

Apart from promoting cooperation among faculty members,4 the assessment development process had many other positive outcomes, such as helping us move upwards on Harden’s Integration Ladder.3 The format of the exam planning meetings not only helped the individual faculty members better understand overall educational outcomes, but each person could also review whether his/her course material was optimally placed in a specific module. Incorrectly placed material could then be moved before the start of the next academic year and unnecessary duplication of the material removed.4 This portion of the planning meeting moved us from Step I, or “Isolation” to Step 2, or “Awareness” and on to Step 3, or “Harmonization” on the integration ladder.3

During the previous academic year, the exam planning meetings also stimulated lecturers to talk about prerequisites for their courses. It became apparent that “Nesting” (Step 4) was required and lecturers started working together to ensure an enriched learning experience for the students by talking about skills relating to other courses. For example, although the inflammatory response was normally only taught in Year II, a basic lecture on this topic, taught by a basic scientist, is now included as part of the Functional Recovery and Active Therapeutics I course. For the second academic year of using this system, some Histology lectures and a lab were moved from Anatomy in Year I to Immunology in Year II even though they are still taught by the Histology lecturer. Changes such as these are the beginning of “Sharing” or Step 6 on the integration ladder.3

Relevance

Another positive outcome of the adapted form of EMQs from four common clinical cases per exam, was the fact that twelve cases per module, together with red and yellow flags, covered many of the major clinical disorders likely to be encountered in the anatomical areas covered by a module of five to nine weeks. This exam format also showed the students how the preclinical courses, in particular the basic sciences, fit into professional practice.2,4,6

Feasibility

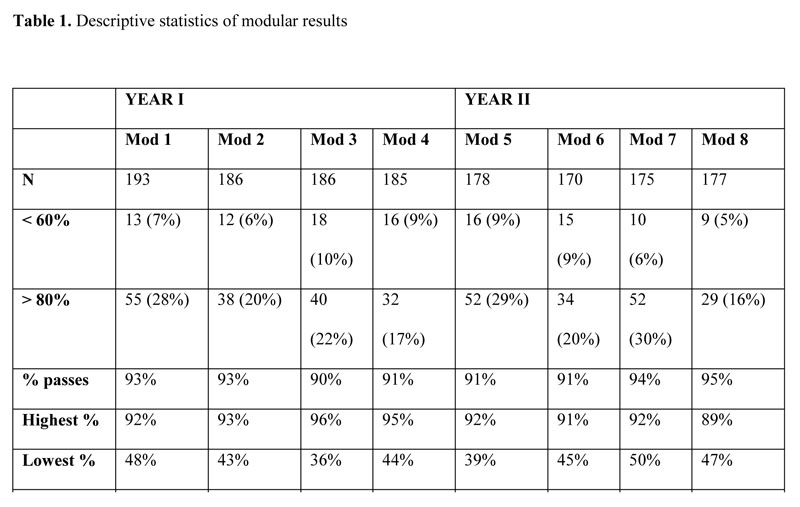

The theory examinations proceeded smoothly and the students soon became familiar with the EMQ and S-A question formats. They indicated that the three hours allocated per exam were more than enough. The mean for every module in the two years was over 70% and the pass percentage was consistently over 90% (Table 1).

Reliability

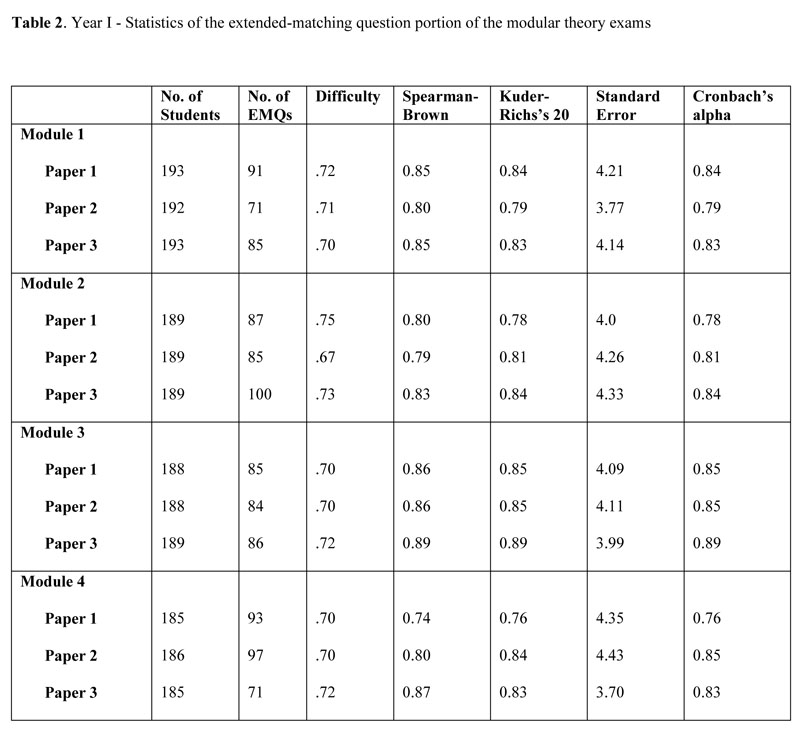

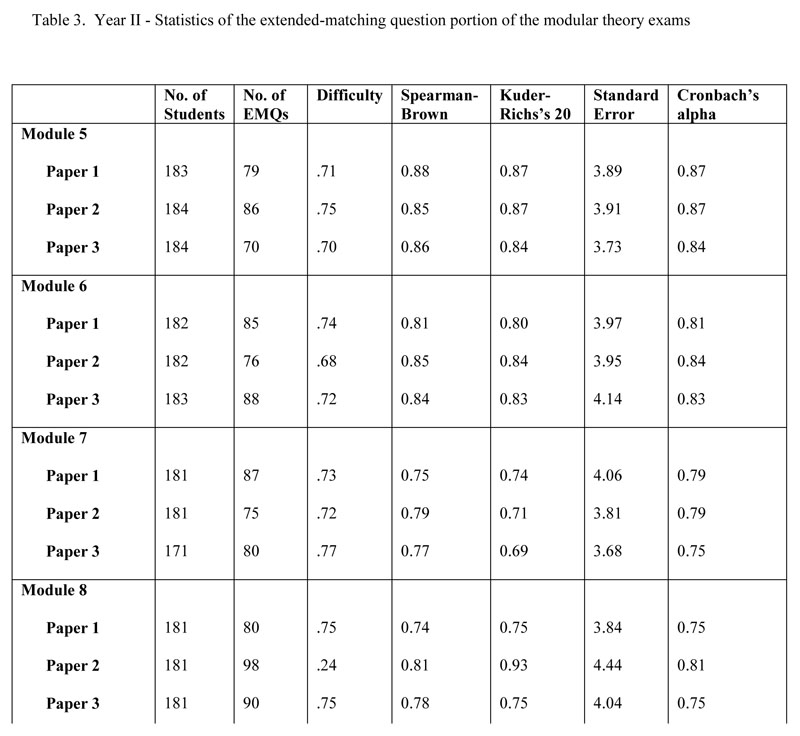

Depending on the number of short answer questions included in each exam, and after elimination of poorly discriminating questions, the number of EMQs per exam varied between 70 and 100 (Tables 2 and 3). The internal consistency (Cronbach’s alpha) was between 0.75 and 0.89, and both split-half (Spearman-Brown) and Kuder-Richardson’s 20 values were between 0.69 and 0.93 for the exams written. (Tables 2 and 3).

Validity

To investigate construct validity for the modular theory examinations in Year I, the final results were correlated with each other. The Pearson correlations between the results of Modules 1, 2, 3 and 4 were all significant and between 0.75 and 0.87. Correlations with other assessments in Year I was also calculated and gave statistically significant correlations between 0.63 and 0.78 with the final Biological Sciences OSPE, between 0.40 and 0.52 with the final Radiology OSPE, and between 0.44 and 0.50 with the end of year OSCE.

Similar results were obtained with Year II results (Table 4) with Pearson correlations between the results of Modules 5, 6, 7, and 8 of between 0.76 and 0.79. For the Year II students we also had their Year I results available which gave significant correlations with the modular results in Year II (Table 4). The Year I GPA correlated between 0.82 and 0.86 with the different modules with the correlation increasing over the year from 0.82 for Module 5 to 0.86 for Module 8. Correlations between the Year II modular theory results and the Year I Technique practical assessment results were only between 0.12 and 0.25 and not significant at the 0.01 level.

The backward stepwise multiple linear regressions all reached convergence in seven iterations or less. After six iterations, 69% of the variance in Module 5 (R2 = 0.687) was explained by the independent variables Year I GPA, Radiation Physics and Radiology (in order of importance). After six iterations, 69% of the variance in Module 6 (R2 = 0.691) was explained by Year I GPA, Diagnosis and Orthopaedics, and Body Mechanics. After four iterations, 71% of the variance in Module 7 (R2 = 0.711) was explained by Year I GPA, Radiation Physics, Radiology, and Anatomy. After seven iterations, 74% of the variance in Module 8 (R2 = 0.737) was explained by Year I GPA and Radiology.

DISCUSSION

This report describes the development and introduction of integrated modular theory examinations into an undergraduate health professional curriculum. Similar to the block testing introduced by two medical schools in the USA and described in a recent paper,7 our modular system was designed to progress from frequent individual discipline assessments, by grouping assessments into a dedicated period at the end of each module. This was to allow the students an uninterrupted period of five to nine weeks to concentrate on their learning, “digest” the content, and move towards an understanding of the relevance of and integration between the different courses taught during the module.

The assessment development process

The process followed to develop these integrated theory exams was found to be resource intensive from both faculty as well as support perspectives. The value of changing to case-based questions is well supported by the literature2,4,6,8 and evidence exists that EMQs have many advantages over MCQs. EMQs are viewed as a more “fair” format, with better item discrimination,12 and with the ability to measure degrees of expertise.13 However, it was anticipated that faculty reaction to this change might not be positive.4, 6, 7, 11, 14

Modular assessments are not new to our institution as a modular system had been in place for Year III since the beginning of the 2002-2003 academic year. In the Year III modular theory exams each examiner developed his/her own cases and questions in the EMQ or S-A format and assessment integration was mostly of the “stapled” variety.2 The implementation of the Year III modular system went smoothly with faculty members quickly adapting to the new assessment format, even though some concerns regarding increased workload was expressed to the Dean.

The recent assessment changes in Years I and II, however, affected many more faculty members, particularly from the basic sciences. Some of the faculty members were initially not comfortable with the new format and most expressed concern at the increased workload caused by having to develop new questions.4,6,7,11,14 As the academic year progressed, some faculty members appeared to become more positive which supports the experience at other institutions implementing change.7 The majority of the faculty adapted and wrote creative questions, perhaps realizing that they were starting to develop a new bank of questions which would decrease their workload over the next few years. This result is similar to that reported in the literature11 indicating that exam setting time was reduced in subsequent years.

Exposure to the case-based questions very soon lead to a request from the students that all courses should now be taught in a case-based format and for some of the faculty members this request added significantly to their stress levels. To assist faculty members in this task, faculty development workshops on case-based teaching have since been offered.

As Harden (2000)3 describes, moving up the integration ladder requires more central organization and resources and more “communication and joint planning between teachers from different subjects.” In this regard we have found our exam planning meetings very useful. Over the year, as faculty members began to understand the process better, the meetings were getting shorter and more work was done by e-mail. However, one meeting at the beginning of each module was an absolute necessity. It is important to note that these meetings should be driven by someone from the Dean’s Office, in our case the relevant Education Coordinator. From our experiences, ownership of the process is crucial to success. Adequate administrative assistance was also a necessity, as putting together these integrated exams is time-consuming and requires a good understanding of the process. Skilled administrative assistance saved the reviewers many hours of work.

Reliability

The quality of the integrated assessments

In order to allow wide sampling of content across the discipline material taught in a module, and to reduce the error caused by task variability to a minimum8 we had decided on three theory exams of at least 100 marks each to be written at the end of every module, thus a total of 300 marks or more. We also decided to use only the average of the three exams for promotion purposes, rather than the total of the individual discipline marks tracked from assessment to assessment over the year.4,7 This was in an attempt to average out unreliability problems that might occur in one or more areas of the assessments and which might be compounded when tracking individual discipline results over the year. When a final discipline result is made up from small numbers of questions at a time, unreliability could become a serious problem.8 The fairly good measures of internal consistency found in our individual modular exam papers (Tables 2 and 3) supported us in this decision. Adding together the results of three exam papers (235 – 272 EMQs plus S-A questions), with a testing time of nine hours8 and using the average of the three papers, should also improve reliability and support the making of high-stakes decisions on the basis of these results.

Validity

Validity is not easy to show. However, the process followed in developing the questions should help with a measure of content validity as it ensured that the questions used in a module came from all courses taught in that module.15 Although processes are currently underway to have the submitted questions checked against the learning outcomes16 to ensure a good spread, so far this has not yet been concluded. However, the fact that assessments took place at five to nine week intervals and that at least 300 questions had to be developed at the end of each module, could be indications that most work taught during the module would be assessed.

Bridge et al16 described four primary principles on which the development of a content-valid test should be based. These include review by experts in the field, as well as the writing of high quality test items. Content validity of our exams would therefore also have been positively affected by the fact that we implemented peer review of the exams, as well as by workshops designed to help faculty develop their question writing skills.

The correlations found between the results that both Year I and Year II students achieved in the four modules per year, as well as between Year II modular results and the final marks that the same students obtained in their Year I exams the year before, are similar to others reported in the literature, and indicated some measure of construct validity.15

Of interest was the fact that, although still significant, the correlations between the practical scores in Year I and the Year II modular theory results of the same students were much weaker (0.12-0.25) than those shown between Year I theory results and Year II modular theory results. As different traits were measured in the practical and theory assessments, this result could also be an indication of validity in the new theory assessment method.8

Many published results are limited by relying only on univariate or bivariate statistical procedures. So, in order to further investigate the separate effects of the Year I results directly upon the criterion variables in terms of their regression coefficients, a multiple regression equation was developed for the average modular theory result in each of the four modules in Year II. The results obtained from these regression equations also indicated some measure of construct validity in the new format of assessment.

Limitations

Our new assessment format has limitations. Although most faculty members involved had previously attended workshops on EMQ writing, those workshops were held some time before the initiation of test preparation. To assist faculty members in improving their question writing skills two workshops were offered at the start of the new academic year. Faculty members in courses such as Professionalism, Ethics, and Health Promotion, decided not to mark S-A questions for more than 180 students and therefore tried to write EMQs on work which could not easily be linked to patients and they found it difficult to develop seven or more options. This result is similar to that reported by other authors.15 We will be working with the faculty members involved to try and develop more creative ways to assess their courses.

CONCLUSIONS

According to van der Vleuten (1996)8 “perfect assessment is an illusion”. However, we are cautiously optimistic that our new assessment format is starting to address the perennial issues of relevance and integration, particularly of the basic sciences. For the first time this year, feedback from Year I and II students has shown an improvement in understanding the relevance of material taught in the basic sciences. The fairly sound psychometric proportions of the exams described in this paper support the high-stake decisions that we have to make on the basis of our examination results. The improvements in integration that came about through the assessment planning meetings were an added positive outcome.

REFERENCES

- Anderson, M.B., Swanson, A.G. Educating medical students – the ACME-TRI report with supplements. Academic Medicine. 1993; 68(Suppl.):S1-46.

- Schmidt, H. Integrating the teaching of basic sciences, clinical sciences, and biopsychosocial issues. Academic Medicine. 1998; 73(9):S24-S31.

- Harden, R.M. The integration ladder: a tool for curriculum planning and evaluation. Medical Education. 2000; 34:551-557.

- Ber, R. The CIP (comprehensive integrative puzzle) assessment method. Medical Teacher. 2003; 25(2):171-176.

- Hudson, J.N., Vernon-Roberts, J.M. Assesment – putting it all together. Medical Education. 2000; 34:947-958.

- Hudson, J.N., Tonkin, A.L. Evaluating the impact of moving from discipline-based to integrated assessment. Medical Education. 2004; 38:832-843.

- Streips, U.N., Virella, G., Greenberg, R.B., Blue, A., Marvin, F.M., Coleman, M.T., Carter, M.B. Analysis on the effects of block testing in the medical preclinical curriculum. Journal of the International Association of Medical Science Educators. 2006; 16:10-18.

- Van der Vleuten, C.P.M. The assessment of professional competence: developments, research and practical implications. Advances in Health Science Education. 1996; 1:41-67.

- Till, H. Identifying the perceived weaknesses of a new curriculum by means of the Dundee Ready Education Environment Measure (DREEM) Inventory. Medical Teacher. 2004; 26(1): 39-45.

- Till, H. Climate Studies: Can students’ perceptions of the ideal educational environment be of use for institutional planning and resource utilization? Medical Teacher. 2005; 27(4):332-337.

- Tonkin, A.L., Hudson J.N. Integration of written assessment is possible in a hybrid curriculum. Medical Education. 2001; 35:1072-1073.

- Case, S.M., Swanson, D.B., Ripkey, D.R. Multiple-choice question strategies: Comparison of items in five-option and extended-matching formats for assessment of diagnostic skills. Academic Medicine. 1994; 69(10):S1-S3

- Beullens, J., Struyf, E., Van Damme, B. Do extended-matching multiple-choice questions measure clinical reasoning? Medical Education. 2005; 39:410-417.

- Van der Vleuten, C.P.M., Schuwirth, L.W.T., Muitjens, A.M.M., Thoben, A.J.N.M., Cohen-Schotanus, J., Van Boven, C.P.A. Cross institutional collaboration in assessment: a case on progress testing. Medical Teacher. 2004; 26(8): 719-725.

- Beullens, J., Van Damme, B., Jaspaert, H., Janssen, P. Are extended-matching multiple-choice items appropriate for a final test in medical education? Medical Teacher. 2002; 24(4):390-395.

- Bridge, P.D., Musial, J., Frank, R., Roe, T., Sawilowsky, S. Measurement practices: methods for developing content-valid student examinations. Medical Teacher. 2003; 25(4):414-421

Appendix 1

Case and sample questions

Jennifer, a 45-year-old woman, complained of “pins and needles” and a dull ache in her right shoulder, radiating down the postero-lateral surfaces of arm, forearm and hand. The pain started 2 months ago and had progressively worsened, affecting her ability to work full time and often awakened her during the night. She also indicated that she lost about 12 pounds since the pain started. Her family history revealed that her grandmother suffered from breast cancer.

Examination revealed that the patient’s shoulder had lost its rounded contour and the entire upper limb was adducted. The forearm was more pronated and flexed than usual. The entire upper limb assumed a configuration analogous to “waiter’s tip” position. You then listened to her chest, and examined her thyroid gland. On examination of both breasts, a large mass was found in the upper lateral quadrant of the right breast fixed to the surrounding connective and muscle tissues. The right nipple was seen to be higher than the left and inverted. A small dimple of skin was noted over the lump. Palpation of the axilla revealed enlarged, firm lymph nodes. The left breast was unremarkable. A lateral radiograph of the cervical spine showed metastases in the bodies of the 5th, 6th, 7th cervical vertebrae. A blood analysis revealed moderate anaemia. Biopsy of the lump tissue was recommended.

A diagnosis of carcinoma of the right breast with metastases into the cervical spine was made.

- You evaluate Jennifer’s right shoulder and on x-ray note that the humeral head is positioned more superior than what would be expected. This could cause impaction of the head of the humerus onto what ligament? (List 2)

- Which muscle is the first to be invaded by the patient’s tumorous mass? (List 5)

- Which nerve is compromised if the patient’s shoulder lost its rounded contour? (List 6)

- Invasion of the basement membrane is the first step in the metastasis of this cancer from the breast to the cervical spine. Cancer cells must express a specific protein receptor in order to begin this process. Which protein receptor must the cancer cells express in order to penetrate the basement membrane? (List 10)

- What is the key characteristic of cancer cells that differentiates their growth pattern from that of normal cells? (List 9)

- Jennifer wants to know: Which of the currently used cancer immunotherapies is the safest and most successful? (List 16)

- What allows tumour cells to escape the attack of specific cytotoxic T lymphocytes? (List 16).

(List # refers to the relevant Option List)