ABSTRACT

Significant forces are converging to reshape the basic science medical curriculum including advances in educational theory, advances in computer technology, and increased understanding of human disease processes. The explosion of information in the medical field and financial pressure on academic medical centers is stimulating significant change in the way that the basic science curriculum is delivered. This study was undertaken to incorporate these advances into the medical microscopic anatomy curriculum by transition from paper and pencil to computerized assessments of student learning. The goals included: modernization of the mode of student assessment on high stakes examinations, maintenance of high academic standards, maintenance of student performance, acquisition of student experience in computerized testing, and reduction in student and faculty time required for assessment. Design, implementation and evaluation of the transition was documented and analyzed. Freshmen medical students were given either written or computer assessments. Student performance was compared on identical items. There was no performance difference in the overall course or for most of the experimental items. The written format provided an advantage on 3% of the items that were likely cued by proximal items. Student evaluation of the transition was positive. They felt better prepared for future computerized examinations. Student satisfaction with instant scoring of the examination was rated quite high. There was recovery of significant student and faculty time in the assessment process. There was student suspicion of the accuracy of item scoring by the computer. The concern was investigated and student satisfaction was obtained with addition of a minor modification to the programming. Overall, the transition to computerized assessments was successful and productive for both students and faculty.

INTRODUCTION

A dramatic increase in the depth and scope of biomedical knowledge has led to significant changes in the medical curriculum in the basic sciences1-3. These changes provide educators a timely opportunity to incorporate advances in computer technology4,5, educational theory6, and understanding of human disease7 in a new era of excellence in medical education.

We applied these advances in a focused manner to successfully transition the high stakes examinations in a freshman basic science course in the medical curriculum from a paper and pencil (i.e., written) format to a computerized format. Computerized assessment of student learning was designed, implemented and evaluated in the context of the medical microscopic anatomy course at the University of Arkansas for Medical Sciences. The course content encompassed histology, cell biology and embryology. The purpose of this study was to determine if students would perform equivalently on identical items presented in either written or computerized format in the course examinations, document student and faculty attitudes about the change to computerization of the major examinations, and identify key elements to a successful transition in this educational context.

Assessment of student learning is essential to the educational process to determine if learning goals are being achieved. Student assessment is also central to curricular development and evaluation of curricular changes. Assessment can be performed by several modalities, but in the basic biomedical sciences it has been traditionally accomplished by evaluation of student performance on paper and pencil written examinations. However, students are required to complete the U.S. Medical Licensing Examination (USMLE) on the computer and, thus, students require educational preparation for the computerized assessment modality8,9. Further, computerized assessment can overcome several of the disadvantages of written examinations including: errors in answer transcription by students, criteria for item scoring, and the time and delay inherent to grading of answer sheets; limited incorporation of high resolution, realistic images; and, in particular, the commitment of time and effort on the part of faculty, and the constraints imposed on student and faculty schedules10. As a result, computerized assessment holds promise for improvements in student learning, and also student-focused curricular development, as well as productive investment of student and faculty time, and student preparation for the USMLE8,9.

Although it is generally recognized that equivalent paper and computer examinations can be prepared8,11,12, it remains essential for the change from paper to computer to be evaluated scientifically for positive or negative effects, in specific contexts, with specific test materials and specific student populations13. However, to our knowledge, there is no report with statistical comparison of a successful transition of high stakes assessments from traditional written examinations to computerized examinations in basic science courses in the medical curriculum. Because significant faculty and institutional investment is required to implement the change to computerized assessments in medical education14, the results reported here may serve as a resource for others pursuing a similar path.

MATERIALS AND METHODS

Design. This study compared student performance on assessments in the medical microscopic anatomy course accomplished by written examinations in Year 1 (Y1) to computerized examinations in Year 2 (Y2). This study was reviewed and classified as exempt by the Institutional Review Board. Participants were the 154 freshmen students taking medical microscopic anatomy (composed of histology, cell biology, and embryology) in Y1 and the 149 freshmen students taking the class in Y2. The students had no prior or concurrent exposure to computerized examinations in the medical curriculum. The students were registered concurrently for two additional courses, gross anatomy and introduction to clinical medicine.

Intervention. The intervention in this study was the administration of 101 items, first, in paper and pencil format (Y1) and, then, in a computerized format (Y2). The content of each item was identical each year and the items were safeguarded in a secure database. The items were one-best-answer, multiple choice questions containing 1 correct and 4 incorrect responses in each item. The items did not contain visual images because of the inherent difficulty and expense to incorporate images into a paper and pencil written examination format. The 101 items were distributed throughout all of the major course examinations, 7 in Y1 and 5 in Y2. The examinations contained a total of 678 items in Y1 and 248 items in Y2.

The items were pre-tested in the year prior to this study to determine individual and group item statistics. The pre-test was performed using 151 comparable medical students in the same educational assessment context used in the intervention. Item inclusion in this study was based on difficulty at the medium level (average 75% of the students passing the item) for the group, item reliability of at least 0.85 for the group, and individual item discrimination index ≥ 0.25.

Implementation. For paper and pencil examinations in Y1, the students were given a printed examination booklet and a scanable answer sheet on which answers were marked by filling in printed circles with a pencil. For written examinations, all 154 students sat for each assessment as a single group in a teaching laboratory. The students were allowed 2 hours to complete the examination, all examination booklets were collected, answer sheets were scanned by computer, and scores were posted within 72 hours. After the scores were posted, students were given the opportunity to briefly review the items in a proctored environment and submit a formal written appeal regarding the scoring of an item. Reviews were conducted in the teaching laboratory under the proctoring of the course faculty. The appeal process revealed that no scoring change was necessary for the items included in this study.

Prior to the first scored computerized examination in Y2, students were given the opportunity to participate in a technical training session, which included a simple, very short practice examination, to acquaint them with the computerized assessment design. Approximately 96% of the students participated in the computer training and completed the practice examination.

For computerized examinations, the students were divided into two groups of 72 students each because of a limited number of available computers. The two groups sat for the examination starting at either 8 am or 10 am, based on alphabetical order of last name, and the group start time was alternated for each subsequent assessment. The students sat at individual personal computers in a computerized testing laboratory and logged into the examination with individual coded passwords which changed for each examination session. Examinations were constructed using Authorware® testing software (MacroMedia). As a security feature, items were presented to each student in a random order to prevent simultaneous presentation of items on multiple computer monitors. Students could move back and forth in the examination to review and change the selected response to any item. The students were allowed 2 hours to complete the examination and, after submitting their responses for grading, they could elect to receive their score immediately on-screen.

A software program was developed to capture student keystrokes on each computer. This was necessary to document student response selection in order to alleviate student concern that the computer did not accurately record their selected responses. In addition, the software was re-programmed to slow down the rate at which a student could scroll through the items and review their responses. This was necessary to allow time for the software to display an item and record the unchanged or changed response before displaying the next item. After the assessment session, students were given the opportunity to briefly review the items and submit a formal written appeal regarding the scoring of an item. The review was conducted on computers in a proctored environment. Faculty review of the student appeals determined there was no change necessary for the scoring of any item included in the study.

Analysis. The class mean Medical College Admissions Test (MCAT) score and mean undergraduate college grade point average (GPA) were compared for each class to determine the equivalence of the student groups. Also, the class mean percentage score and GPA in the medical microscopic anatomy course were compared for each class to evaluate overall course performance. The average difficulty and average discrimination of the items were compared between the two formats. These three statistical comparisons were done using independent sample t-tests. The level of significance was set at p=0.05 for each test.

The proportion of students selecting the correct response for each item was analyzed to compare student performance on the two formats and to determine if one format was more advantageous for any specific item. The proportion of students answering correctly on each format was compared using a test for two independent proportions which is distributed as a z statistic. To correct for inflated Type I error, the significance was set at p=0.01 for each comparison.

Student evaluation was obtained at the end of the course by voluntary student completion of a standardized course evaluation which incorporated a Likert scale as well as ad lib comments. Questions regarding the computerized examinations were added to the course evaluation to document the student experience. Descriptive statistics of student ratings were calculated.

RESULTS

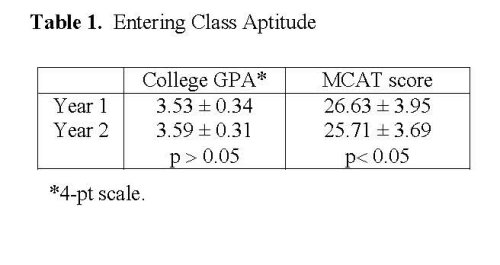

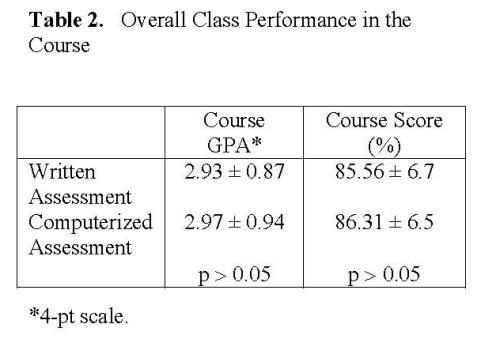

The aptitude of the two student populations was evaluated (Table 1). There was no statistical difference in the mean undergraduate college GPA. Students in the Y2 group had significantly lower MCAT scores. This was of initial concern because this group would be the first freshman class to encounter the computerized assessment format. While the two groups are statistically different on the MCAT performance, the numerical difference between the two groups is less than a point and the two groups are 0.24 standard deviation units apart. The potential meaning of the significance of the relatively small difference is not obvious. Further, the difference in entering MCAT score did not correspond to subsequent differences in performance in the course. There was no significant difference in student performance in the medical microscopic anatomy course with respect to the mean percent score or the mean course GPA (Table 2).

The average difficulty of the items as a group was similar (p>0.05) between the two years, 0.85 in Y1 and 0.82 in Y2. The average discrimination of the items as a group was also similar (p>0.05), 0.29 in Y1 and 0.28 in Y2.

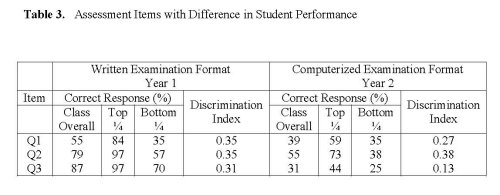

Student success in selection of the correct response was compared for the same set of 101 items. Students performed similarly on 97% of the items. However, three test items showed a statistically significant difference in class performance in the computerized format compared to the written format (Table 3). In these three cases, overall class performance was lower when the item was presented in the computerized format. Review of each of the three items suggested that the change in student performance may have been due to context cueing by conceptually related, near proximity items when the item was presented in the written format. Students were allowed full access to all questions and answers in the computerized examinations until they designated their examination as complete and received their score. This allowed the possibility of cueing as students reviewed the items. However, with random presentation of items in the computerized assessment, items were not presented in conceptually related clusters and as a result cueing was less likely.

As detailed in Table 3, the first item (Q1) with differential performance assessed a difficult concept that was cued for students in the top ¼ of the class by 10 proximal items on related concepts. Students in the bottom ¼ of the class did not benefit from the cued items; 35% selected the correct response in both formats.

Q2 and Q3 (Table 3) were two items on a related concept that were presented in immediate sequence on the written examination in Y1. They were neighbored by three additional items on related concepts. The cue was readily discernible by most students, including those in the top ¼ and bottom ¼ of the class.

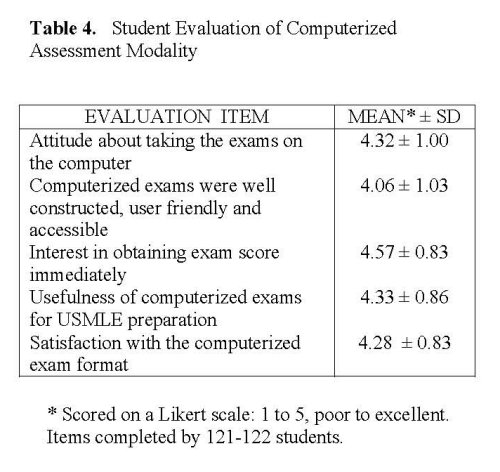

To capture feedback from the students about their experience with the computerized examinations, five evaluation items were added to the standard student evaluation of the course in Y2. Course evaluations were completed voluntarily by 122 students which was 82% participation in the evaluation process. All items related to the computerized examinations were ranked high by the students with a mean of 4.31/5.0 (Table 4). The overall course evaluation, which included 15 additional items not specific to the computerized examinations, was 4.10/5.0.

Comments about the computerized assessment experience were reported by students on the course evaluation. Descriptive comments about the course were provided by 58 students with 13 students providing specific comments about the computerized assessments. Two of the student comments contained apprehensive statements about the assessments and both related to distrust of the technology. These students wrote, “I don’t think the computer exams were always fair. There were times when I went back and could swear I never put that answer, but there was nothing I could really do about it.” and “I enjoyed taking the computerized exams, but I still feel that some of my answers might have been changed by the computer.” However, overall the students felt confident about the technology, “The computerized exams were a big topic this semester. I think that most of the issues with them were handled appropriately.”

The positive student evaluation comments focused on ease of reading the questions, preparation for the computerized format of the USMLE, and the ability to obtain instant scoring. “I loved the computerized exams. It was very easy to use and was actually a more relaxing way to take an exam.” “The computer tests were fantastic.” “I loved the computer exams, please keep them.” “I thought the computerized tests were a great idea. I loved knowing my grade immediately after submitting my final answers.” “I loved the computer exams and the instant grading.” “I think the computerized test is the way to go.” “I like having the exams on the computer.” “As for computer testing, I thought it was great and hope that more of our classes use the same approach.” “The computer exams are well designed and thought out.” “The computerized exams were good in that they are preparing us for the USMLE. I am kind of old fashioned and like the written tests, but since the USMLE is on a computer I want to be prepared and so I want to take more computer tests.”

Discussion

Overall, this controlled study documented statistically that computerized assessment did not provide either an advantage or a disadvantage to a population of freshmen medical students taking microscopic anatomy. Student performance was evaluated in a paper and pencil written format versus a computerized format. Items were selected for inclusion in the study based on individual item statistics and group item statistics following pre-testing in a comparable population in the same academic and situational context. While the group performing the computerized tests could be argued to have had a slightly lower aptitude, as measured by MCAT score, that difference did not affect student performance on the assessment items or in the course overall. The two experimental groups were statistically comparable based on college GPA, course GPA, and performance on assessment items.

Student performance was similar on 97% of the identical items regardless which format was used. However, it is noteworthy that student performance was different on 3 of the 101 items. On these three items, advantage may have been conveyed in the written format because of potential cueing of correct responses by neighboring items with related subject content. This aspect of assessment design is often overlooked. The potential for cueing was based on our review of the written examinations in Y1. Cueing is present to some extent in all examinations regardless of whether the format is paper and pencil or computer. Students were allowed full access to all questions and answers in the computerized examinations until they designated their examination as complete and received their score. This allowed the possibility of cueing as students reviewed the items. To advantage, cueing may have been reduced in the computerized format because items were presented in a random order.

In both years, students were permitted 2 hr to complete each examination. There were 678 questions in 7 written examinations in Y1. There were 248 questions in 5 computerized examinations in Y2. Thus, students had more time per question in the computerized format. This design was employed intentionally in the first year of the computerized examinations to offset student tension about the computer interface. We observed that students did not require the additional time with most students completing each session one hour. The difference in time may have provided an advantage to the students in Y2, but no difference in performance was detected.

Student evaluation of the course provided insightful feedback on the transition. By the end of the course, students were almost unanimously pleased with the computerized examinations. Strong positive features from the student perspective included the use of computer technology for examinations, better preparation of test taking skills for the computerized testing format of the USMLE, and instant examination scoring.

The computer literacy of freshman medical students is very high, both at our institution and elsewhere15,16. In the year prior to this study, ≥ 85% of the entering medical class at our institution reported their level of computer expertise as ‘comfortable’ or ‘expert’, with no one reporting ‘no experience’17,18. Further, 82% of these students owned a computer, 78% of those who did not planned to purchase one, and 96% intended to use the Internet from home to access their medical coursework17,18.

Nonetheless, some students were clearly more comfortable with computer technology than others. While some students reported the computerized testing environment to be relaxing, others reported anxiety regarding the technological aspect of the experience. Our current finding that students strongly preferred computerized examinations over written examinations is supported by previous studies. Although other investigators13 observe that differences in student prior experience with computers does not affect performance on computerized assessments, the present study incorporated an opportunity for no-risk practice in the computerized assessment system, including completion of a practice examination.

Students indicated that the transition to the computerized format was useful to prepare them for other computerized examinations including the USMLE. Proficiency in computerized testing reduced anxiety about the assessment format of future examinations.

Although student comments were generally favorable, students were particularly pleased with the ability to receive a score immediately at the end of their session.

Previous reports suggested that students were displeased by computerized assessments that did not permit them to review and change their selected responses prior to submission for scoring13. Students indicated that the ability to review and change their responses was essential prior to scoring. This was true even though the ability to review and change responses did not appear to alter student performance13. Instant scoring is one of the potential benefits of computerized examinations compared to written examinations, and is easily incorporated into computerized assessment design. This feature was incorporated into the present study and was rated highly in the course evaluation.

During the first computerized assessment session, one student voiced concern that the selected responses were not accurately recorded by the scoring program. This led to similar concern on the part of other students. Validation of the concern was not obtained. Extensive assessment modeling and software testing by faculty and staff revealed that it might be possible to force the program to malfunction by very rapidly paging through screen views. The software was reprogrammed with a split-second delay between on-screen display of items. The programmed temporal delay was not perceptible during the assessment process. To confirm that the modifications were successful and that each student response was accurately recorded, a supplemental computer software program was written to capture keystrokes. No hardware or software error was documented based on keystroke analysis. The analytical problem-solving response to the student concerns was well received by students as indicated in the course evaluation. However, a couple of students continued to express distrust of the technology. This concern represented the only negative feedback from the students regarding this major transition in high stakes assessments.

A major goal of the transition to computerized assessment was happily realized in the form of recovered time for both students and faculty. Increased faculty and staff investment was required to establish the item database, evaluate item statistics, design the assessment protocol, implement the computerized format for the first time, and respond to student and faculty feedback. However, in subsequent years, the faculty recovered significantly time and effort necessary for preparation, administration, proctoring, and scoring of assessments. Faculty reported satisfaction that high performance standards were maintained.

Students also recovered time and effort with the computerized assessments. Previously, lecture and laboratory content were assessed independently with the use of paper and pencil written examinations of lecture content (2 hr period) and separate microscope-based examinations of laboratory content (2 hr period). Integration of the content reduced the time needed for assessment of both lecture and laboratory content from a total of 4 to 2 hr. In particular, this reduced the burden on students who were scheduled for multiple course examinations in a single day. This represented a real change in strategy that likely allowed students to perform better on the multiplicity of high stakes assessments.

Another major goal of the transition was the opportunity for students to complete self-scheduled assessments. This was realized in the subsequent year when students began to perform self-scheduled assessments in the Learning Resource Center during a one week period. This change was positively received by students. Ultimately, successful computerized assessment with maintenance of high standards for student learning may allow extension of this medical curriculum into a distance education format. Online distance-delivery of the basic science medical curriculum will provide access for a larger, more diverse student population and, perhaps, positively influence diversity among practicing physicians.

The transition from paper and pencil to computerized assessment was successful for both students and faculty. The assessment modality was modified with minimal impact on student performance on individual items or in the course overall. Importantly, computerized assessment provided student practice in preparation for the subsequent USMLE. In addition, both faculty and students recovered significant time and effort. The modality has been further extended since the completion of this study to allow students to perform the assessments by computer on a self-scheduled basis. As a result of this study, the computerized assessment modality was successfully extended to two additional freshman medical courses the following year.

ACKNOWLEDGMENT

The authors thank Jean Chaunsumlit for entry of the item database and Elizabeth Hicks for topical literature search. CJMK acknowledges the UAMS Teaching Scholars program and Dr. Gwen V. Childs for provision of support, structure, guidance, and encouragement for this research.

REFERENCES

- Regehr, G. Trends in medical education research. Academic Medicine, 2004; 79: 939-947.

- Heidger, P.M., Jr., Dee,F.,Consoer,D.,Leaven,T., Duncan,J., and Kreiter, C., Integrated approach to teaching and testing in histology with real and virtual imaging. The Anatomical Record, Part B: The New Anatomist, 2002; 269: 107-112.

- Blake, C.A., Lavoie, H.A., and Millette, C.F. Teaching medical histology at the University of South Carolina School of Medicine: Transition to virtual slides and virtual microscopes. Anatomical Record: Part B, The New Anatomist, 2003; 275: 196-206.

- Hastings, N.B., and Tracey, M.W. Does media affect learning: where are we now? TechTrends, 2005; 49: 28-30.

- Vozenilek, J., Huff, J.S., Reznek, M. and Gordon, J.A. See one, do one, teach one: advanced technology in medical education. Academic Emergency Medicine, 2004; 11: 1149-54.

- Sutherland, R., Armstrong, V., Barnes,S., Brawn, R., Breeze, N., Gall, M. Matthewman, S., Olivero, F., Taylor, A., Triggs, P., Wishart, J., and John, P. Transforming teaching and learning: embedding ICT Into everyday classroom practices. Journal of Computer Assisted Learning, 2004; 20: 413-425.

- Sokol, A.J. and Molzen, C.J. The changing standard of care in medicine. E-health, medical errors, and technology add new obstacles. Journal of Legal Medicine, 2002; 23: 449-90.

- Ogilvie, R.W., Trusk, T.C., and Blue, A.V. Students’ attitudes towards computer testing in a basic science course. Medical Education, 1999; 33: 828-31.

- Guagnano, M.T., Merlitti, D., Manigrasso, M.R., Pace-Palitti, V., and Sensi, S. New medical licensing examination using computer-based case simulations and standardized patients. Academic Medicine, 2002; 77: 87-90.

- Papp, K.K. and Aron, D.C. Reflections on academic duties of medical school faculty. Medical Teacher, 2000; 22: 406-411.

- Lieberman, S.A., Frye, A.W., Litwins, S.D., Rasmusson, K.A. and Boulet, J.R. Introduction of patient video clips into computer-based testing: effects on item statistics and reliability estimates. Academic Medicine, 2003; 78: S48-51.

- Preckel, F. and Thiemann, H. Online- versus paper-pencil-version of a high potential intelligence test. Swiss Journal of Psychology, 2003; 62: 131-138.

- Luecht, R.M., Hadadi, A., Swanson, D.B., and Case, S.M.. A comparative study of a comprehensive basic sciences test using paper-and-pencil and computerized formats. Academic Medicine, 1998.;73: S51-3.

- Cantillon, P., Irish, B., and Sales, D. Using computers for assessment in medicine. British Medical Journal, 2004; 329: 606-609.

- Seago, B.L., Schlesinger, J.B., and Hampton, C.L, Using a decade of data on medical student computer literacy for strategic planning. Journal of Medical Library Association, 2002; 90: 202-209.

- Jastrow, H. and Hollinderbaumer, A. On the use and value of new media and how medical students assess their effectiveness in learning anatomy. The Anatomical Record, Part B: The New Anatomist, 2004; 280B: 20-29.

- Heard, J., Allen, R., and Schellhase, D. A comparison of computer skills of freshmen medical students and resident physicians. In Eighth International Conference on Medical Education and Assessment. 1998. Philadelphia.

- Hart, J. and Allen, R. Results of the LRC freshmen computer survey 1995-1999. 1999.