ABSTRACT

This manuscript describes assessment processes that can be used during the normal conduct of Problem-based learning. The recent focus on assessment causes educators to consider different types of assessment methods designed for the purposes of improving students’ performance. Scoring rubrics and Likert scale assessment forms can be used in both formative and summative assessments. Formative assessment can be an on-going and integral part of the learning and improvement cycle. Summative assessment can be based upon patterns of performance toward the end of the course. Students’ written reports on their research on learning issues and integrative in-class discussions that follow after their research offer especially rich opportunities to assess students on many types of learning. Repeated observations of individuals working together throughout the in-class steps of the PBL process provide further opportunities for assessment. Appropriate assessors include faculty members, student peers and the students can assess themselves.

INTRODUCTION

Problem Based Learning (PBL) has been used in medical education for over forty years. With the recent focus on assessment, educators have become more attuned to how to assess students in PBL. Assessment should be designed for the purposes of improving students’ and instructors’ performance leading to further student improvement.1 Feedback is an essential component of assessment. (Assessment can be contrasted with evaluation which is often done for the purposes of making pass- fail type judgments about students).1 This paper will discuss different ways students can be assessed on their learning while engaged in the PBL process.

Current trends in assessment emphasize using different types of assessments including embedded and authentic assessments.1 Embedded assessment means that assessment of student progress and performance are integrated into the regular teaching/learning activities, whereas non-embedded assessments occur outside of the usual learning process.2 Use of embedded assessments of actual performance takes little additional class time and inherently has content validity.2 Embedded assessments assess student progress and performance during the regular PBL session. An embedded assessment tool documents what took place during the learning process for the purposes of assessment. These tools often reflect what the assessors observed while the students were engaged in their learning or performing a task. Non-embedded assessments often take the form of tests. Authentic assessments mimic what is actually done in practice by asking students to engage in real tasks.1 For student these tasks may be simplified, but the assessment should involve real performance. In authentic assessments, students are asked to apply what they learned to real situations, which for medical students would involve doing something that is similar to what physicians actually do. Contrasting these types of assessments (embedded versus non-embedded with authentic versus non-authentic), one could form a 2x 2 matrix to see that assessments can be either 1) embedded and authentic (the most desired assessments), 2) embedded and non-authentic, 3) non-embedded and authentic 4) or non-embedded and non-authentic (e.g., a multiple choice test is generally both non-embedded and non-authentic).

PBL is an instructional method involving different types of active learning opportunities. Because the students are actively engaged in learning in the classroom and demonstrate their progress as they master the content, or problem solving skills, this method provides numerous opportunities for authentic, embedded assessment that do not take away time from instruction. Since PBL employs many different types of learning, what students can be assessed on can vary. The purposes of this manuscript are to 1.) describe some specific assessment tools that can be used in PBL, moved comma and 2.) describe ways to assess students during the PBL process using embedded and authentic assessments.

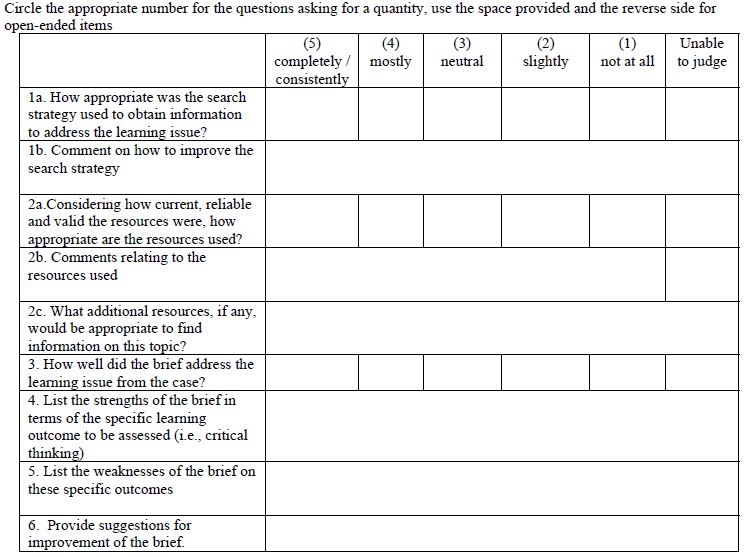

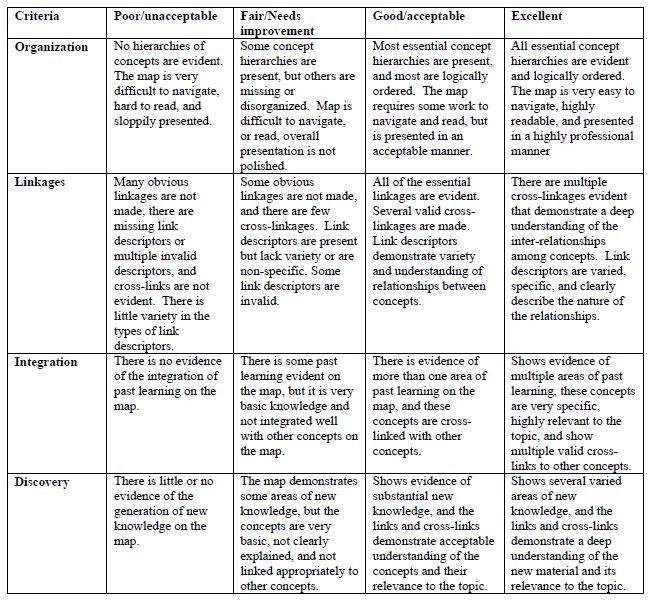

Most assessments relating to specific steps in the PBL process, that will be discussed later in this paper, use the same types of embedded assessment tools, i.e., scoring rubrics 3 and Likert scale assessment forms, because they are very time efficient and yield equitable grading.4 Likert scales usually have 5 points ranging from 1= not at all to 5= consistently demonstrates this trait or very much. The middle category is neutral. Examples of Likert assessment scales are question 1, 2a and 3 on Table 1. A rubric is a written summary of the criteria and standards that will be applied to assess the student’s work. Rubrics transform informed professional judgment into numerical ratings. These ratings can be communicated rapidly and generally yield more consistent scoring.3 It is usually constructed as a matrix with the criteria along the vertical axis and a brief description of the different standards of performance or levels of standards along the horizontal axis. An example of a scoring rubric is contained in Table 2. Faculty members, peers, and the learner him/herself can use rubrics and Likert scales to give students formative and summative assessments. Both Likert scales and rubrics are useful for conducting assessments based on in-class activities because they make the criteria clear and explicit in writing.3 While this paper focuses on assessing students in PBL, it reflects current assessment trends using rubrics, Likert scales, embedded and authentic assessments. These tools and methods are used in primary, secondary and higher education with many different types of instruction.1-3

While scoring rubrics and Likert scale assessment forms can be used with many types of assessments, the specific assessment criteria employed are different depending upon what is being assessed. Specific scoring rubrics or assessment forms can be constructed along the lines of the examples given later to assess most desired specified learning outcomes. Narrative comments based upon repeated observations of student performance can further support these rubric or Likert scale scores.

Students and instructors can use the critical incident type of observations5 to give specific examples of behaviors demonstrated during the PBL discussions. They can record examples of whatever is being assessed. A critical incident documents only those events that critical, influential, or decisive in the student’s developing learning abilities. Usually there are only a few critical incidents noted for each student throughout a course so it is not an onerous job. While giving feedback, if someone notes an especially excellent or poor performance, the instructor should briefly record the incident so that it can be used as part of narrative comments on the student’s performance written at the end of the course. This record should consist of a few sentences to jog the faculty member’s memory.

Assessment considerations within the PBL process

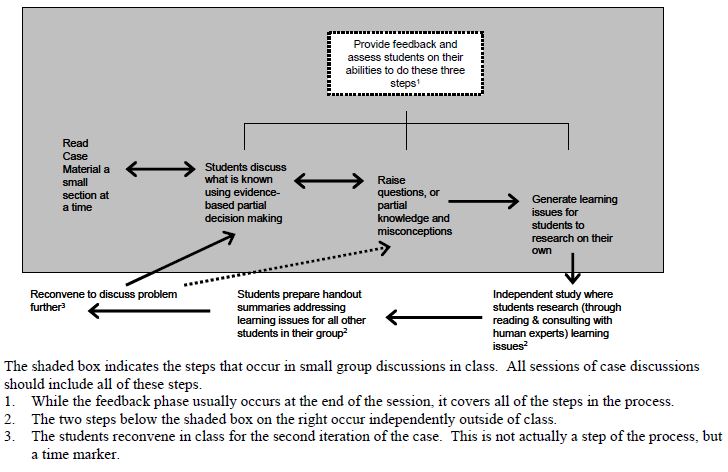

The classical version of PBL is predicated upon the principle that discussion of a problem or case stimulates learning. All material is discussed twice in the PBL discussions, once without prior preparation and then again after researching questions raised (called learning issues). This iterative process is shown in Figure 1. Discussion of what is known, what is unknown, and raising questions can occur simultaneously, not necessarily sequentially as shown in the figure. Because it is assumed that most readers are somewhat familiar with this PBL process I will comment only on specific ways of implementing the PBL process that can foster assessment of student learning. Many different types of assessments can occur through observations of the various steps of the PBL process. During each step or all of the steps together within the PBL process instructors and students can assess more than one type of learning simultaneously.

Many possible assessments are embedded within the regular PBL activities as these assessment flow from repeated observations of the students’ regular performance in the groups. It is valid to use faculty members, peers and oneself as assessors. Peers and instructors complete assessment forms asking for evidence or absence of evidence of specific outcomes in their fellow students. The key is to sample enough observations without overwhelming everyone with the assessment process. Students and faculty members can rotate in and out of the observer-assessment role.

Assessments during specific steps within the PBL process

Generation of learning issues. Throughout the course of the discussion, the students naturally raise questions that they would like clarified. Toward the end of the session, the groups refer to their written list of questions to generate and refine learning issues, or topics that the students need to research on their own outside of class for further understanding. The students refine the questions, group and classify then to make the job of searching for answers more manageable. The questions can either require additional information on the specific problem or take the form of more general knowledge questions, which are preferred for promoting student learning. Questions on the specific problem can be transformed into general knowledge questions. A researchable question based upon a specific question relating to the patient would be, “what type of laboratory data would be indicated for patients like this and why?” This type of question can also require students to consider how strong the evidence is to support these laboratory tests in terms of efficacy and cost effectiveness.

The Association of College and Research Libraries defined five information literacy standards for higher education including: the determination of information needs, the acquisition of information effectively and efficiently, critical assessment of information and its sources, the incorporation of selected information into one’s knowledge base, and the use of information legally and ethically.6 The step of generation of learning issues is ideal for evaluating student’s ability to define an information need.

Figure 1. Problem-based learning is an iterative process

Evidence-based decision-making has developed criteria to define a searchable question.7 These criteria should guide the generation of learning issues. First the problem needs to be clearly identified. This problem should be structured as a specific question. The words of the question should be chosen to facilitate the search for information. A suggested format for defining a searchable question in medicine is called the PICO question. PICO is an acronym for P = problem or population to study, I = intervention, C = comparison, and O = outcome.8 An example of a good PICO question would be: For persons with dementia, will the use of environmental modifications decrease disruptive behaviors? The students’ ability to define an information need can be directly assessed by asking the students individually to write their questions for further study, including asking them to formulate them as PICO questions, and collecting these questions. A scoring rubric would be helpful to efficiently assess these PICO questions and also a way to offer constructive feedback. Each of the letters in the PICO acronym can become a separate criterion for the rubric. Then the faculty members develop 3-4 levels or standards of each criterion giving explicit differences between each level. The levels would reflect the ease of obtaining useful and appropriate information.

Independent study, development of briefs. In between PBL group sessions the students research their own learning issues or questions (see the bottom, not shaded parts of Figure 1). Students then prepare a short (at most one to two pages including graphs or figures, and can be written in bullet points or outline) summary of the information they acquired to answer the learning issue question, and list their information sources, called briefs.9 This written summary is not required in most PBL groups, but my experience indicates that it greatly increases the level of discussion when the groups reconvene. Without the summaries prepared by the students, they come to class with large piles of photocopied or printed material that they refer to repeatedly in class and spend a great deal of time searching for information. Forcing people to abstract the essential ideas helps them to synthesize their knowledge, fosters reflection on their learning, and serves as a check as to whether or not they indeed did address the learning issues raised. Ideally these summaries should be sent electronically to the other students in their group and the instructor in charge of the course in advance of the next class session, and the students should have read the summaries generated by their peers prior to coming to class. When students compile all of the summaries of the learning issues, they develop a resource for further use that contains much information and appropriate resources.

Table 1: Peer and Instructor Assessment of Briefs

Attached to each brief should be a brief tracking sheet, as shown in Appendix A, which can be used for documentation and for self-assessment on the acquisition of information effectively and efficiently. It is a good idea for the faculty members to develop a standard form and distribute an electronic copy of the form that the students can download and always attach to their briefs. The student preparing the brief should rate how useful their search strategy was for addressing the learning issue. For example they might write that this was too inclusive of a search strategy and they got several thousand possible citations to consult. This self-assessment can encourage students to seek help to define more effective searches. Librarians are very useful for helping students to formulate search strategies and identifying appropriate places to find information. A universal objective of PBL education is that the students become aware of the most appropriate resources to find the answers to different types of questions in addition to learning the content itself. Becoming aware of appropriate resources should help students to learn other material in the future. The use of tracking sheets helps students to identify appropriate resources for different types of questions.

Assessments from briefs. Instructors and peers can assess the briefs quickly using a standard, generic form such as found in Table 1. The review of the briefs should be done quickly to determine if they are satisfactory overall. Obtaining some feedback on each brief provides opportunities for immediate and continued improvement on the skills assessed. Students do not have to complete a form for every other student in his/her group every time, but each student should receive some feedback for most of the briefs submitted. Faculty members need to monitor the briefs more closely in the beginning of a PBL program or in a new class. As students progress, this type of formative assessment can be done less frequently. Faculty members and peers can use the same assessment forms, used to provide feedback earlier, for summative assessment on specific learning outcomes by reviewing the last few briefs and their tracking sheets the students develop for a course.

Table 2. Concept Map Assessment Rubric11

A review of the briefs summarizing the student research on learning issues and their tracking sheets are excellent ways to assess students on several information literacy standards6 including acquisition of information effectively and efficiently, critical evaluation of information and its sources, and the use of information legally and ethically. When faculty members or librarians provide formative feedback on the type of searches the students performed, the students can be encouraged to use more than lay search engines on the Internet.

Some topics will lend themselves better to assess of other specific learning outcomes than others. For example, a question that requires a review of different types of literature can be assessed for integrating different ideas or perspectives; whereas the identification of incidence or prevalence rates of a disease might only assess information literacy skills. Since information obtained from print or electronic sources is not directly related to the patient, students can usually be assessed on their ability to apply theoretical knowledge to the specific patient in the problem. Instructors should use their judgment in deciding if a topic can be assessed on specific types of learning outcomes in advance of reading the brief. They or the students might keep a record of what specific learning outcomes individual students have been assessed on and encourage students to take on different kinds of learning issue research throughout the course. Faculty members can also monitor that individual students are not always selecting the more factual issues to research (which are easy to research in textbooks) and encouraging them to also select more integrative learning issues that require more extensive researching or reading different perspectives.

In my opinion, the briefs and the patterns observed from overall group discussions are the most important assessment tools in PBL. Because briefs are written, they can become a portfolio of the students’ work throughout a semester. Further, it is easy to demonstrate to the students their progress over time. If faculty members rotate among groups without spending much time with each group, assessing the briefs can be an excellent way to determine what the individual students are learning and how well each group is adhering to the intended objectives. Briefs are also one of the few individual components of what is normally a group or collaborative experience.

Second discussion of the case. During the second iteration on the material, students reconvene to discuss material after researching their learning issues. During this discussion they should critically assess what they have learned and integrate multi-disciplinary content knowledge while addressing its application to the problem. On the second pass through the material, they follow a similar process of discussing the case only this time they are armed with much more information (see the shaded box in Figure 1). The explanation of new knowledge gained from learning issue research should emerge naturally from the case discussion. If more questions arise, and that often happens, they become learning issues for further study. Thus, the process is iterative. Students prefer to simply report on what they researched in little sequential monologues without an integrated dialogue on the problem; this practice should be avoided to allow for a more meaningful discussion. One mechanism that fosters a rich, multi-disciplinary discussion is to ask the students collectively to construct a concept map10 summarizing what they know about the problem or case during the second pass with this material. Concept maps graphically illustrate the integration of all they know about a problem, showing relationships and hierarchies.10 The construction of a concept map during the second iteration of the problem discussion integrating all of the group’s collective knowledge, skills in inquiry, analysis and integration can be assessed by the instructors and the students using a rubric such as found in Table 2.11 Instructors can use concept maps to assess the organization of knowledge into hierarchies, the associations and integrations among separate details. Generally the group would receive a group grade for their concept map. Even if instructors are not present for the discussion, they can assess concepts maps if they are handed in. Students can use computer programs such as “Inspiration”12 to produce them easily and neatly. “Inspiration” is a computer software package that allows students to construct graphic organizers. Students can use the software to develop ideas and organize their thinking. It can be used to brainstorm, plan, organize, outline, diagram and write. The software uses standard conventions or symbols to show relationships, hierarchies, and consequences and, therefore, is very useful for constructing concept maps. Concept maps made on “Inspiration”12 are easier to follow than those made by hand or not using the standard notation of the software. Therefore, they are easier to assess. If they are constructed on a white board that has a computer camera attached to it often known as Smart Boards or Smart Sympodium, they can be electronically saved to a computer file.

Feedback phase. Feedback should occur at the end of each session, and groups need to reserve time for this formative assessment to occur before the group recesses for the day. Instructors often need to model how to give constructive feedback in a supportive way. Students might be asked to comment orally or use a written form to provide this feedback. A suggested format might be to give several statements or questions reflecting performance and ask the students to use a Likert scale to rate their assessments and write comments supporting the numbers they assigned.

Feedback on the discussion of the first iteration of the problem may concentrate on the quality of their discussions, their use of evidence-based decision-making, their ability to formulate learning issues, and the group functioning. Appropriate questions for formative feedback based upon the second iteration of the problem might be: 1) How much did you learn from this PBL discussion?; 2) How well did the PBL case discussion address the learning issues from the case?; 3) How well will you be able to apply what you learned from the case discussion to patient care?; and 4) an open ended statement such as please provide suggestions to improve the PBL discussions. The first three questions can use Likert scales and ask for comments. Such feedback assesses students’ ability to become self-directed learners. Different people can be the assessors but peers and self assessments are essential to obtaining a fuller picture.

DISCUSSION

On a daily or weekly basis, the observations of student performance in the PBL activities or reviews of briefs should offer formative feedback to help students to improve. These types of assessments offer insights into how well the students are learning and are consistent with current accreditation standards such as those in the LCME13 standards. These same assessment forms taken together can be used to look at repeated observations or reviews to determine trends and patterns. Such trends and patterns can become the basis for making summative evaluations. A summary of the narrative comments made throughout the semester should be included in the summative evaluations also. ED-32 of LCME 13 states that, “Narrative descriptions of student performance and of non-cognitive achievement should be included as part of evaluations in all required courses and clerkships where teacher-student interaction permits this form of assessment”.

Faculty members who are accustomed to giving objective tests may be concerned with the reliability and validity of the kinds of measures discussed in this manuscript. The assessment literature indicates that using scoring rubrics or Likert scales yield reliable and valid measurements if the criteria are appropriate and the description of the standard levels of performance are sufficiently grounded in real samples of different performance quality.1-3 Repeated observations of students can indicate patterns of performance and therefore, are more reliable assessments. Rubrics and Likert scales can also be used to chart student progress over time.4 Observations from a single class are usually not indicative of true abilities. Rubrics and Likert scales provide equitable measures because the same criteria are used for all students. This perception of equitable grading is especially important to minority students.4 To further improve the reliability of these assessments, trained assessors who observe a sample of PBL sessions can do these observations. Training assessors, including peer assessors, involves practicing with specific feedback what to observe and how to consistently complete the rubrics or checklists. Since many of the assessments are both embedded and authentic tools, training students to be reliable assessors also serves to improve their learning and performance. The training process itself is instructive for the students as it can show them an excellent level of performance. During the training, students come to understand what is expected of them before they are assessed. Triangulation of data from different sources and collected over time results in valid measures of the student’s learning. In assessment, triangulation, “refers to the attempt to get a fix on a phenomenon or (an interpretation) by approaching it via several independent routes. In short, you avoid dependence on the validity of any one source by the process of triangulation.”14 Triangulation supports a finding by showing that independent measures agree.5,14 Triangulation can occur from different data sources, different times, different methods and different types of data.5 In addition many of the assessments discussed here are authentic measures and as authentic assessments they mimic professional practice, therefore, they should have good predictive validity to future clinical work. These embedded and authentic measurements also have high face validity because they are based upon actual performance.

CONCLUSIONS

This manuscript describes the assessment processes for medical students that can be used during the normal conduct of PBL. Thus, assessment can be part of the learning process and not seen as taking away time from instruction. Formative assessment can be an on-going and integral part of the learning and improvement cycle. Summative assessment can be based upon patterns of performance toward the end of the course. Because students demonstrate the continual mastery of their learning in small groups in PBL, many opportunities for embedded, authentic assessment of student learning readily exist. Specifically, this manuscript describes the use of scoring rubrics, Likert scale assessment forms, and reflective comments in both formative and summative assessments. Appropriate assessors include faculty members, student peers and the student can assess themselves. The summaries of independent research that the students do to address their learning issues, called briefs,9 are very useful for assessing students on their a) developing information literacy skills, b) synthesis of their knowledge, c) application of knowledge to clinical problems, and d) their written communication skills. The briefs can become a portfolio of the students’ work that can be very useful for documenting learning outcomes for LCME13 or other accreditation agencies. When students develop concept maps10 on the second iteration of the problem, they collaboratively integrate all that they know about a problem These concept maps can be used to assess a) how accurately they have mastered the knowledge, b) how appropriate is their synthesis of their older and newly acquired knowledge, and c) the organization and integration of their knowledge showing linkages among concepts, causes, effects and implications. Repeated observations of individuals working together throughout the in-class steps of the PBL process provide opportunities for assessment of a) knowledge; b) the integration of various theories, explanations, and multi-disciplinary perspectives; c) the individual’s ability to work on teams or groups; and d) professional behaviors. Thus, PBL offers many rich and varied authentic and embedded assessment opportunities.

REFERENCES

- Wiggens, G. Educative asssessment.1998; San Francisco: Jossey- Bass.

- Wilson M. and Sloane K. From principles to practice: An embedded assessment system. Applied Measurement in Education. 2000; 13(2): 181-208.

- Walvoord, B.E. Assessment clear and simple. 2004; San Francisco: Jossey- Bass.

- Stevens, D.D. and Levi, A. Leveling the field: Using Rubrics to achieve greater equity in teaching and grading. Teaching Excellence. 2005: 17(1):1-3.

- Miles, M.B. and Huberman A.M. Qualitative data analysis. 1994; 2nd edition ed. Thousand Oaks, CA: Sage Publishers.

- Association of College and Research Libraries. Information literacy competency standards for Higher Education. Available at: http://www.ala.org/ala/acrl/acrlstandards/informationliteracycompetency.htm . Accessed October 5, 2004.

- Sackett, D., Richardson, W., Rosenberg, W. and Haynes R. Evidence based medicine: How to practice and teach evidence-based medicine. 1997; New York: Churchill Livingstone Publications.

- Forrest, J. and Miller R.W. Teaching Evidence-based decision making. Presented at Evidence-based decision making for Health Professional Conference 2001.

- Love, M.B, Clayson, Z.C. and Blumberg, P. Real stories: teaching cases in community health. 2002; San Francisco: Department of Health Education, San Francisco State University.

- Novak, J.D. Learning, creating, and using knowledge. 1998; Mahwah, NJ: Lawrence Erlbaum Associates, Publishers.

- Miller, P: Concept map assessment rubric. Presented at the Teaching Professor Conference. 2004.

- Inspiration software. Available at www.inspiration.com. Accessed October 3, 2005.

- Liaison Committee on Medical Education (LCME). Accreditation standards. Available at: http://www.lcme.org/standard.htm. Accessed October 12, 2004.

- Scriven, M. Evaluation thesaurus.1991; Newbury Park, CA: Sage Publications, Inc.

NOTE: Please refer to the complete PDF file for the referenced Tables and Figures