ABSTRACT

Medical educators are identifying how learning opportunities help further student professionalism, which requires explicit methods of assessment. This paper reports how student comments about peer professionalism may contribute to our understanding and assessment of medical student professionalism. An inductive qualitative analysis identified themes across student comments. Using comment characteristic (positive versus negative) as an independent variable and the mean score of the nine scale questions of the professionalism assessment as the dependent variable, an independent-sample test was calculated. Participants included one-hundred and eleven first-year medical students in a required problem-based learning course. A total of 12 comment themes were distinguished. Out of the total male (292) and female (212) comments, 82% and 88% were positive comments, respectively. There was a significant difference in the mean professionalism score between the students who did and did not receive negative comments, t = 2.93, df = 109, p = .002. Overall, this study provides an initial framework from which future studies may draw. This investigation will assist educators to interpret the rich and complicated data of student comments, furthering how we figure qualitative information into the overall evaluation of student professionalism.

INTRODUCTION

Medical educators are charged with furthering medical student professionalism skills. Competency-based curriculum literature has provided direction for teaching and assessing student professionalism. For example, Herbert Swick1 developed a comprehensive definition of professionalism. Frameworks, such as CanMEDS2, the University of Dundee Three-Circle Model3, the ACGME Competencies4, and the AAMC Medical Student Objectives Project5, have also described the knowledge, skills and attitudes that characterize professionalism. Strides with competency-based curricula have taken the ill-defined construct of professionalism and displayed it as a collection of measurable variables, which has informed educators on assessment development.

Several medical schools are initiating learning opportunities to promote medical student professionalism. Swick, et al.6 conducted a survey that investigated whether medical schools are developing and assessing formal instruction related to professionalism. The results suggest that most medical schools are offering some form of instruction (didactic or experiential) related to professionalism. Tulane University, for example, has developed the Program for Professional Values and Ethics in Medical Education.7 This program encourages students, residents, and faculty to examine the characteristics of professionalism and discuss how modeling professional attributes can impact health care practices.

Educators also need to identify how learning opportunities help further student professionalism, which requires explicit methods of assessment. Assessment methods to gauge professionalism characteristics are less developed than other constructs, such as medical knowledge.8 The purpose of this paper is to investigate how student comments on peer evaluations may contribute to our understanding and assessment of medical student professionalism. Peer evaluations may promise to be a valuable source of information. Arnold9 explained in her review of the literature that students have frequent, close contact with their peers, which lends opportunities to observe behaviors outside the presence of faculty and staff. Student reflections, then, may provide unique insights into peer professionalism.

As educators collect assessment data on student professionalism, questions surface about how to interpret student comments about professionalism of peers. Student understanding of professionalism can be informed by competency frameworks and scale questions on evaluations, but written comments may indicate how students attend to and focus on particular characteristics of professionalism. Exploring how student comments are delineated into themes will further the theoretical development of the complex educational phenomenon of student professionalism. We hypothesized that an examination of student comments will reveal several distinct themes of professionalism behaviors. We also hypothesized that student comments will be aligned with scale questions. That is, students who receive negative comments will have lower scores on scale questions than students who only receive positive comments. This investigation aims to help educators interpret the rich and complicated data of student comments, furthering how we figure qualitative information into the overall evaluation of student professionalism.

MATERIALS AND METHODS

The Professional Peer Assessment

In the present study, first-year medical student comments about professionalism of peers were analyzed. The comments were collected from a peer professionalism assessment that was implemented in a problem-based learning course. An important consideration of an assessment instrument is the definition and specificity of the construct.10 Professionalism was defined as student behaviors that were characterized across nine domains of professionalism, which is the foundation of our medical school’s code of professionalism. Using existing literature and frameworks (e.g., Swick’s professionalism characteristics, AAMC Medical Student Objectives Project, CanMEDS, the University of Dundee Three-Circle Model, and the ACGME Competencies), a professionalism code was developed by our medical school curriculum committee. The nine domains include:

Honesty and Integrity

Accountability

Responsibility

Respectful and Nonjudgmental Behavior

Compassion and Empathy

Maturity

Skillful Communication

Confidentiality and Privacy in all patient affairs

Self-directed learning and Appraisal skills

Using these domains, the Associate Dean for Student Services and two faculty members in the Office of Medical Education developed 9 broad questions to address each professionalism domain using a 7-point scale. The peer professionalism assessment also includes a general comment question, which allows the evaluators to reflect on their peers’ professionalism.

Instrument Implementation

Multidisciplinary faculty designed a problem-based learning (PBL) learning experience to augment an interdisciplinary basic-science course, Human Function. It is a year-long course that combines the disciplines of biochemistry, human genetics, and human physiology. Each of the 14 PBL groups includes 8 students and one facilitator. The course is divided into two, fifteen-week semesters. After the first fifteen-weeks, students are placed into a new PBL learning group with a different facilitator and different students. All students, then, have the advantage of two facilitators each year, and working with different peers in each semester.

Each fifteen-week component includes five cases. Each case confronts students with a complex problem. A packet of information explains a patient’s chief complaints, a psychosocial history, physical symptoms, and particular lab results. Students are asked to share and explore hypotheses of the patient conditions. Students also identify key learning issues, or questions about the material. Students research the learning issues and present information to the group members for the following sessions.

Given the expectations of PBL, it was an appropriate course to implement the professionalism evaluation. As students cooperatively share information, PBL aims to develop several skills that are characteristic of the 9 domains of student behaviors outlined in our code of professionalism. Students, for example, apply interpersonal skills as information is presented and critiqued. Students are also accountable to come to PBL and contribute information to address the learning issues.

During the academic year of 2004-2005, 111 students were given the peer professionalism assessment beginning the last week of each semester. Students were asked to complete peer evaluations for each student in their PBL group. Evaluator names remained anonymous and students were not penalized for failing to complete the peer evaluations.

Research Questions

The analyses of the student comments on a peer professionalism evaluation were addressed by two research questions:

1. What are the underlying themes of student comments about the professionalism of peers?

As Harris11 noted, qualitative analyses investigate data in the form of words rather than numbers. A qualitative approach evaluates the raw, descriptive data of student comments, and organizes them into themes, which can reveal a conceptual framework. Using the principles of qualitative data analysis, the research process was organized into the following steps: First, student comments were collected from the 2004-2005 peer professionalism evaluations. Student comments were also distinguished between male and female subjects.

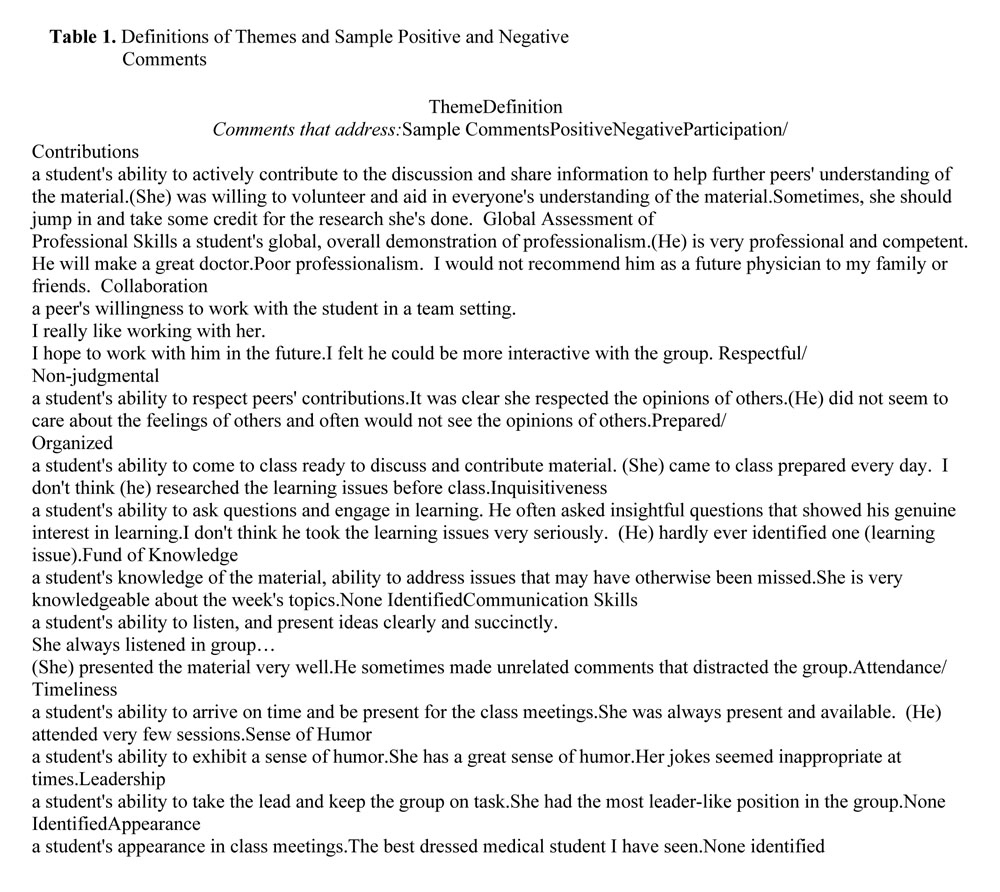

Second, the comments were entered into Nudist 6 software for analysis.12 The principal author conducted the initial interpretation of the data. The author searched for themes, which were used to code the data. This strategy is an inductive method for identifying codes. Third, all authors reviewed the comments and the identified themes. During three, two hour meetings, the authors audited the initial interpretation of the data set. The authors then made modifications to the themes until consensus was achieved. Finally, the data were coded and organized into tables.

2. Is there a significant difference in the mean professionalism scores between students who did and did not receive a negative comment?

An independent-sample test was calculated with comment characteristic as the independent variable and the mean scale score as the dependent variable. The professionalism assessment included 9 scale questions. The dependent variable (scale score) was the mean score of the nine scale questions included in the professionalism assessment. Using a 7 point scale, mean scale scores could range between 1 and 7. Students who received at least one negative comment (n= 28) and students who did not receive a negative comment (n= 83) were distinguished into two groups: positive and negative comment characteristics. We hypothesized that students who received a negative comment would have a lower mean professionalism score than students who only received positive comments.

RESULTS

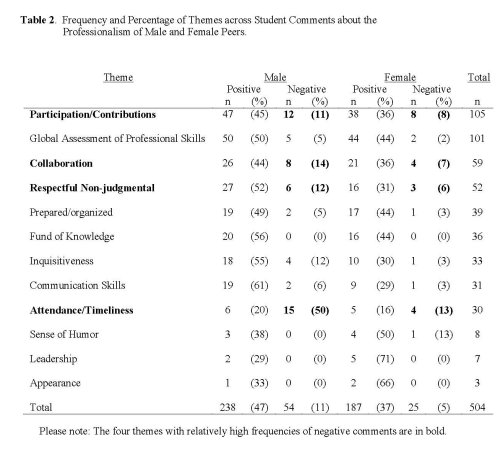

Students were relatively compliant. A total of 111 students (63 males and 48 females) were included in this study. Across the 14 PBL groups in each semester, 1,554 peer assessments were distributed. Approximately 93% (1,445) of the peer assessments were completed. Across the 1,445 completed peer evaluations, 504 comments were identified. A total of 12 themes were distinguished (see Table I). Table II identifies the frequencies and percentages of comments for themes across male and female students. Not all students received comments for each theme. The majority of comments were positive. The total for male (n = 238) and female (n = 187) positive comments reported that 84% of the overall comments were positive.

The most commonly identified themes for both male and female students include participation/contributions (n = 105), a global assessment of professional skills (n = 101), collaboration (n = 59) and respectful/nonjudgmental (n= 52) (see Table II). Both males and females received several negative comments relating to participation/ contributions, collaboration, respectful/nonjudgmental, and attendance/timeliness. The most common negative comments for males addressed attendance and timeliness, whereas the female most common negative theme was participation/contributions.

There was a significant difference in mean professionalism scores between students who did and did not receive a negative comment (t = 2.93, df = 109, p = .002). Overall, mean professionalism scores were higher for students who did not receive at least one negative comment (M= 6.23, SD = .29) than students who did receive a negative comment (M = 5.95, SD = .56). The effect size for comment characteristic was adequate (d = .67), suggesting that there is a practical difference in the mean professionalism score between students who did and did not receive a negative comment.

DISCUSSION

The majority of students completed the peer professionalism evaluations. This finding is consistent with previous research, which found that students are willing to complete anonymous, formative evaluations of the professionalism of their peers.13 The majority of comments reveal that students target a variety of appropriate themes. For example, students attended to their peer participation, attendance, and ability to respect other opinions, which are targeted and important components of professionalism. Overall, the student comments addressed a range of themes, indicating that they can contribute valuable information to the assessment of students’ professionalism.

An overall reflection of the themes that captured several negative comments suggests that students are attuned to whether their peers are meeting basic expectations. Students are willing to point out whether their peers are not being punctual, contributing to the group, respecting others, and engaging in discussion. Bryan and coworkers14 examined a peer assessment of professionalism in a gross anatomy course included in the Mayo medical school curriculum. Consistent with the findings of this study, the authors report that the majority of negative comments address the areas of inter-professional respect and accountability.

This study revealed that students who received a negative comment had an overall lower mean professionalism score than students who did not receive a negative comment. This result is consistent with the research by Rudy and coworkers15, which reported that positive comments about professionalism in a peer evaluation were associated with higher scale scores.

There are some limitations of this study. For example, the anonymity of the evaluators limited the analyses. Identifying the evaluator gender, for example, will answer whether male and female evaluators are more likely to address particular professionalism themes. In addition, this study used nine scale questions and one general comment question. The scale questions may influence students to reflect on particular themes. Several of the themes are consistent with professionalism frameworks. For example, several themes were related to Swick’s professionalism characteristics. Student comments that address participation/contributions, prepared/organized,

and attendance/timeliness are all similar to Swick’s professionalism characteristics of accountability and responsibility characteristics. Also, the communication, collaboration, respectful/nonjudgmental and self-directed learning/inquisitive themes are aligned. Some unique themes identified in student comments, include fund of knowledge, sense of humor, and appearance. Differently phrased scale items and comment items on peer evaluations may reveal comment themes that were not present in this study. Future research in this area could help answer these important questions.

Future research may also explore how student assessment of their peer professionalism may change over time. The student comments in this study were collected for two semesters of a PBL course. While no discernable differences between the frequencies and themes of comments were ascertained between semesters, future studies can address whether the comments become more sophisticated as students progress through the curriculum. The exercise of evaluating peers early in the curriculum can be characterized as a training tool, which may be repeated to sharpen student appraisal skills. Implementing multiple opportunities to assess peer professionalism may further student ability to offer more precise information about professional behaviors. Future studies, for example, may help qualify whether student comments about their peer professionalism improve between the first-year and the fourth-year of the medical school curriculum.

Further, future studies can examine how student assessment of their peer professionalism may be different in clinical and didactic learning situations. Louise Arnold9 noted that professionalism assessments must consider the learning context. This study focused on a problem-based learning course. Future studies may examine whether the themes presented in Table 1 reflect themes identified in other learning situations, such as clinical clerkships. In addition, educators may have an effect on the learning context. Educators have diverse characteristics and different teaching styles. Future studies may examine how educators may impact student ability to assess their peer professionalism.

Overall, this study provides an initial framework from which future studies may draw. The themes will allow researchers to deductively examine what professionalism characteristics students may address, particularly for problem-based learning courses. This study also suggests that student comments are valuable sources of information. Students are willing to reflect on the behavior of their peers across a variety of professionalism attributes. Still, the literature recommends that educators should implement several assessments to gauge student professional behavior. We agree with other researchers who argue that student observations about their peers professionalism is only one piece of the assessment puzzle.16 Educators should exercise caution when using peer comments for evaluation and grading purposes. Students are only beginning to develop their appraisal skills, which may not be as sophisticated as more experienced evaluators.17 Additional assessments, coupled with student comments, are required to create a more complete and detailed picture of whether students are acquiring and demonstrating professionalism competencies.

REFERENCES

- Swick, H.M. Toward a normative definition of medical professionalism. Academic Medicine. 2000; 75(6): 612-616.

- CanMEDS. Extract from the CanMEDS 2000 Project Societal Needs Working Group Report. Medical Teacher. 2000; 22(6): 549-554.

- Harden, R.M., Crosby, J.R., Davis, M.H., and Friedman, M. From competency to meta-competency: A model for the specification of learning outcomes. Medical Teacher. 1999; 21(6): 546-52.

- Accreditation Council for Graduate Medical Education (ACGME). Accreditation Council for Graduate Medical Education Outcomes Project. Available at http://www.acgme.org/outcome/. 2005; Accessed December 15, 2005.

- Association of American Medical Colleges (AAMC). Report 1: Learning Objectives for Medical Student Education. Guidelines for Medical Schools. Washington, DC: Medical School Objectives Project, AAMC, 1998.

- Swick, H.M., Szenas, P., Danoff, D., and Whitcomb, M.E. Teaching professionalism in undergraduate medical education. Journal of the American Medical Association 1999; 282: 830-832.

- Lazarus, C.J., Chauvin, S.W., Rodenhauser, P., and Whitlock, R. The program for professional values and ethics in medical education. Teaching and Learning in Medicine. 2000; 12(4): 208-211.

- Dannefer, E.F., Henson, L.C., Bierer, S.B., Grady-Weliky, T.A., Meldrum, S., Nofziger, A.C., Barclay, C., and Epstein, R.M. Peer assessment of professional competence. Medical Education. 2005; 39: 713-722.

- Arnold, L. Assessing professional behavior: yesterday, today, and tomorrow. Academic Medicine. 2002; 77(6): 502-515.

- Devellis, R.F. Scale development: Theory and applications. London: Sage, 2003.

- Harris, I. Qualitative Methods. In Geoff Norman (ed.) International Handbook of Research in Medical Education, pp. 45-85. Hingham, MA: Kluwer, 2002.

- Nudist 6 software. QSR: Victoria: Scolari, 2000.

- Arnold, L., Shue, C.K., Kritt, B., Ginsburg, S., and Stern, D.T. Medical students’ views on peer assessment in professionalism. Journal of General Internal Medicine. 2005; 20(9): 819-824.

- Bryan, R.E., Krych, A.J., Carmichael, S.W., Viggiano, T.R., and Pawlina, W. Assessing professionalism in early medical education: Experience with peer evaluation and self-evaluation in the gross anatomy course. Annals of the Academy of Medicine. 2005; 34(8): 486-491.

- Rudy, D.W., Fejfar, M.C., Griffith, C.H., and Wilson, J.F. Self- and peer assessment in a first-year communication and interviewing course. Evaluation and the Health Professions. 2004; 24: 436-445.

- Lynch, D.C., Surdyk, P.M., and Eiser, A.R. Assessing professionalism: a review of the literature. Medical Teacher. 2004; 26(4): 366-373.

- Ress, C., and Shephard, M. Students’ and assessors’ attitudes towards students’ self-assessment of their personal and professional behaviors. Medical Education, 2005; 39: 30-39.