ABSTRACT

An independently-developed, interactive, Web-based examination is used to assess learning in histology. The examination assesses factual information, concepts and whether the examinees recognize the organs of the body and the cells, tissues and structures that are associated with them and the other parts of the body. The method of assessment streamlines the administration and scoring of an examination and overcomes some of the inadequacies of examinations that use a microscope, video, projection slides or projected digital images. The strengths of the examination flow from the delivery of the examination to a computer workstation, the duel display of the questions and the images of the specimens on a computer monitor, and the use of an interactive computer interface. The interface allows the examinees to perform operations that improve the process of test-taking by conventional means. As a result, the examination is less cumbersome than a synchronous laboratory examination. The examinees advance by opening individual question windows. The examinees tag questions for review, view the images of specimens at more than one level of magnification, add notes to a textbox and strikethrough the options of multiple-choice questions that are judged to be erroneous. The evaluation of the computer application by the examinees shows the interactive features of the application are useful. The security of the examination during and following an examination is handled by the computer application and the actions taken by the course director. The security measures make the recycling of the images and questions on future examinations feasible.

INTRODUCTION

An instructional program in the microscopic structure of the body is often taught in medical school under the rubric of histology or microscopic anatomy.1-2 The program of instruction, which includes lecture and laboratory sessions, begins with the structure of a cell (cytology), continues with the organization of the tissues (histology) and culminates with the morphology of the organs (microscopic anatomy). And at intervals, as a student progresses from cells to organs, his or her mastery of the laboratory work is measured with a special examination.3-5

The emergence of the computer as a pedagogic tool for histology over the past fifteen years has been dramatic.1-2, 6-7 The application of computer technology to histology instruction however is by no means uniform.2 Some instructors report using computer technology to supplement the activities associated with a traditional microscope experience3, 5, 8-16 and others report supplanting traditional microscope exercises with computer technologies 4, 13, 17-20 but as far as the authors can ascertain only a few instructors have reported using computer-based testing for the summative assessment of knowledge.22-23

There are programs of instruction in histology at the University of Buffalo that utilize digitized photomicrographs of histological materials embedded in computer-guided learning programs.4 The digital materials are not virtual slides and the programs do not use virtual microscopes, the two terms being reserved for a computer-based system that simulates the traditional microscope experience much as possible.24 The digitized photomicrographs of the computer-guided programs are images of microscopic fields selected for their superior instructional value. The digital images are static images and unlike virtual slides are not moved or magnified by computer software.24-26 But, they are accompanied by an insightful explanation of how the objects seen in the histological sections of tissues and organs are identified when viewed with a microscope.

The results of a computer-based examination described in this report contribute a percentage to a student’s overall grade in an interdisciplinary medical school module. The content for one module (with 2 examinations) includes tutorials on the light and electron microscopic structure of a typical cell and the morphology of epithelial tissues, blood cells, fibrous connective tissue, muscle tissue, nerve tissue and blood vessels. The examinations contain images never seen by the examinees and images used in the computer-guided tutorials. The computer application that runs the examination has been used continuously since 2001. As of 2006, the database of questions contained 288 image-based questions.

Microscopes, videotapes and print media have been used at various times in a traditional histology course and interdisciplinary modules to assess the students’ ability to recognize the microscopic objects seen in histological specimens. The motivation for turning to the computer to deliver an examination was to bring symmetry to the method of learning and assessment in the introductory foundations module. The impetus for doing so was the students’ apprehension at taking a print- or video-based examination after using only the computer-guided tutorials.4 The asymmetry was disconcerting to the students4 and the state of affairs begged the question: why not expand the uses of computer technology to include the delivery of examinations? At the same time, the thought of utilizing computer technology for assessment was seen as an opportunity to streamline the delivery and scoring of an examination and eliminate some of the factors that make test-taking with microscopes, projected slides, digital images and digital images displayed with videotapes cumbersome. Two of the most restrictive factors are the synchrony with which the questions are presented and the corresponding need to limit the amount of time allotted to answering each of the examination questions.22

Two groups report using commercially available authoring software interfaces for summative assessment in histology.22, 23 The computer-based examinations are described by the students at the Medical University of South Carolina as “‘Efficient’, ‘educational’, ‘better’ and ‘helpful’” compared to paper and pencil examinations22 and the students at the University of Arkansas did not perform differently when tested with paper and pencil or computer-based lecture examinations.23

The current report builds on the previous contributions to the literature on the use of computer-based assessment in histology by: (1) outlining the general structure of a computer application that runs a combined lecture and laboratory examination, (2) describing how the interactive features of the application are used and the way the features improve the process of sitting for a laboratory examination, (3) summarizing the results of the students’ evaluation of the computer interface and (4) recounting the experience of using a computer-based examination specifically designed for use in a laboratory program in histology.

Computer Application for Examinations

The computer application uses three separate technologies– data management, data display, and user interface. Microsoft® SQL Server 2000® (SQL) running on a Microsoft® Windows 2003 Server® handles the management of all of the information coming into and out of a database. The data is accessed and saved using stored procedures, commonly used routines, run on the SQL Server®. Data display is handled using Active Server Pages (ASP). The ASP utilizes ActiveX Data Objects (ADO) and VBScript. The ADO is the conduit through which the database is accessed.

Data can be requested from or sent to a database using ADO. Once the data is received, it can be manipulated into the necessary format for display using VBScript. For the user interface, the components used are JavaScript, Cascading Style Sheets (CSS), and Hypertext Markup Language (HTML), commonly known as Dynamic HTML (DHTML). JavaScript executes interface interactions with the user, CSS handles formatting and the Web pages are structured with HTML.

In the database, the questions, options, image references and the answer key are stored separately. The separation of the items allows for the reuse of the questions with different options and correct answers. The reuse of the questions with different options and correct answers can often be done with the image-based questions if the question text is brief. Additionally, information is stored in the database relating to general test information: the title and date of the exam and specific user information, such as the users’ names and identification numbers, the time an examinee is assigned to take an examination, and the examinees’ responses and scores for each examination. One of the benefits of using a relational database is that many statistics can be compiled from the exam results. After an examination, the results can be queried to show the response rate for questions as well as for the users.

Each original digital image used for Web display is converted to an image file that is smaller than the original file. A smaller file size reduces the loading time for an image on the medical school’s computer network. One digital image is loaded on a Web page with a question; the maximum height of an image is 200 pixels. Larger graphics push some of the contents of the Web page beyond the limits of the monitor screen; limiting the height of a digital image prevents this from happening and the need for an examinee to scroll the length and /or width of a Web page. When a question requires another image, an alternate image is made available.

How the Computer Application Works

A student logs-on to the application using a unique identifier provided by the University. Having done so, an examinee sees any assignments, i.e., the examination scheduled that day, or previously completed examinations associated with the identifier. The assignments are available to the user at log-on for a defined time period. Once an assignment is selected, the ASP calls the SQL stored procedure to retrieve the appropriate test information. It then builds an external JavaScript file on a server with an array of questions and options for an examination. Each user has a distinct JavaScript file created for loading an examination because the users do not load an examination simultaneously. The JavaScript file is then removed from the server once an examination is loaded.

An examination is loaded from the JavaScript file. The questions are randomized using a second JavaScript array that is unique for each of the examinees as it is loaded. Even though the examination questions are randomized, form element tag names drawn from the database are consistent with the questions no matter the order in which they are displayed. A similar procedure is used to randomize the order of the options. Each of the options maintains the same name as it is loaded, but they are not loaded in the same order at each workstation. Consequently, the same examination questions are not displayed to all of the examinees simultaneously and the order of the options is not displayed in the same order at every workstation even though everyone receives the same images, questions and options.

An examination launches in full screen mode with only one of the examination questions displayed at a time. This display is done by using the CSS property “display: none” for each question after the first question. When an examinee selects a navigation button or a question number from a grid at the top of the screen, a JavaScript changes the display property of the current question to ‘none’ and the selected question to ‘inline’.

A JavaScript matches the correct and incorrect responses upon submission of an examination. The responses are submitted to the database through an ASP calling a SQL stored procedure. The grade, with personal information, the date of the examination, the assignment number, and an examinee’s questions and list of answers are saved to a database. All of the questions and options are passed as a single parameter and parsed by the stored procedure. The information is kept in a row-based format. The columns in the response table are unique identifier, assignment number, question name, user response, and user response group number. If necessary, modifications to the correct responses for the questions can be made in the database. Once an option is modified, the database of user responses can be queried and the scores adjusted according to the revised option.

The computer application is dependent on JavaScript for several reasons. Early in its development, the computer application was designed to run without a database and independent of a computer network. Originally, the application could actually be held on a diskette. The design has advantages. It somewhat mimics a traditional paper examination in that the examination is not accepted until it is completed to the satisfaction of the examinee. It also eliminates the need to change the examinees responses in the database as they proceed through the exam and revise the responses. And it limits the network traffic thus making the response time for navigation faster.

The application saves user responses to the local computer as cookies that can be retrieved later and deleted after submission to the database. The computer application is also designed to give immediate feedback to the users, displaying a score and a corrected examination upon submission of an examination, however, this feature can be turned off.

Screen Display and Navigation

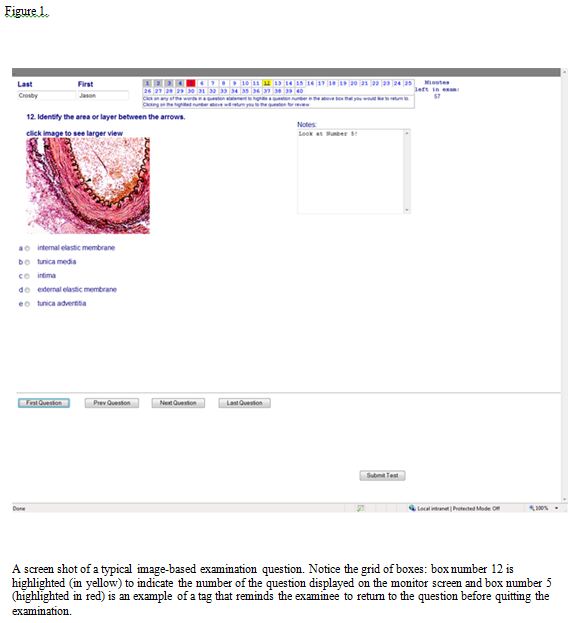

Figure 1 illustrates the layout of an examination window. On the left hand side of the window, proceeding along the bottom and to right hand side of the window, are five buttons. The first four buttons, which allow an examinee to navigate to different questions, are named for the actions that they are designed to accomplish: selecting the “First Question”, “Previous Question”, “Next Question” and “Last Question” buttons display the first question, previous question and so on. Selecting the “Submit Test” on the far right hand side of a window submits the answers selected and closes the application.

An examinee can scan the entire examination viewing the examination windows in numerical order by using the “Next Question” button, then return to the beginning of an examination with the “First Question” button before attempting to answer any of the examination questions. Alternately, an examinee can peruse the windows responding to each question as it appears when the “Next Question” button is selected. Or, an examinee can jump to any question using the navigation buttons and the grid of boxes at the top of the question window.

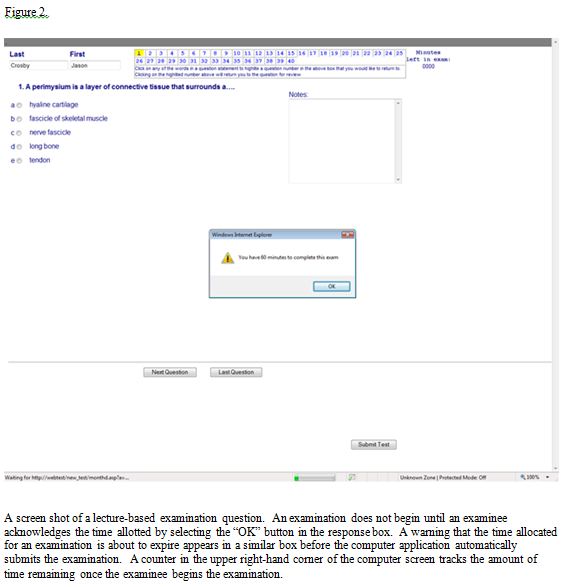

During an examination, an examinee responses are saved locally as a cookie on the computer. To avoid accidental or premature submissions, a prompt asks an examinee to confirm whether he or she intends to submit an examination before it is written to the database. A timer in the upper right-hand corner of the window counts down the number of minutes remaining before the examination closes. Upon the expiration of the time limit for an examination, a JavaScript automatically submits the examination. An examinee is notified of the time limit for an examination when the examination loads (Figure 2) and the amount of time remaining at five minutes and at one minute before the program automatically terminates and submits the examination.

The name of an examinee is posted in the top left hand corner of the question windows. Immediately to the right, a grid of boxes lists the numbers assigned to the questions. The color of a box changes from white to yellow when an examination window corresponding to the question number in the grid of boxes is displayed on a monitor screen. In figure 1, a user advanced to question 12; therefore, the background of box number 12 is highlighted in yellow. The boxes for which an answer is selected are highlighted in grey. In this way, an examinee can keep tract of his or her progress toward completing the examination. The change in the color of a box is done using the CSS ‘background-color’ property and JavaScript.

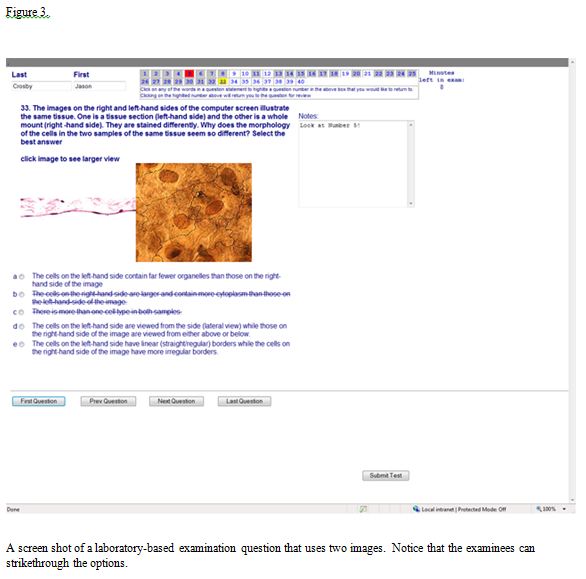

Placing the cursor over the text of a question and left mouse clicking once changes the color of a box from yellow to red (Figures 1 and 3). If the examinee proceeds to another question, the box remains highlighted in red. The boxes tagged in red comprise a subset of the questions an examinee wishes to postpone answering or reevaluate before submitting the examination. Clicking on a red color coded box in the grid of boxes, returns an examinee to the specific question window. Upon returning to the question, the color of the box corresponding to the question selected changes from red to yellow indicating the number of the question displayed on the monitor screen. When the examinee moves on to another question, the color reverts to the prior color. However, if the examinee answers the tagged question by making a selection from the list of options, the background color for the appropriate question box in the grid of boxes is highlighted in grey to indicate the question has been answered. The question can be marked again for review by clicking on the text of the question. The red tag is only turned off and the box highlighted in grey when the current selection is verified by selecting it again or choosing an alternate answer from the list of options.

An examination question occupies the left hand side of an examination window (Figures 1-4). The question is located beneath an examinee’s name and the grid of color coded boxes. The examination questions are one-best-answer questions.27 They consist of (1) a question and a list of options consisting of one correct answer and four distracters (Figure 2); (2) a statement or instruction for handling a question, an image and options (Figure 3); or (3) an examination question, an image and options (Figure 1). Up to five possible options with radio buttons are listed beneath an image.

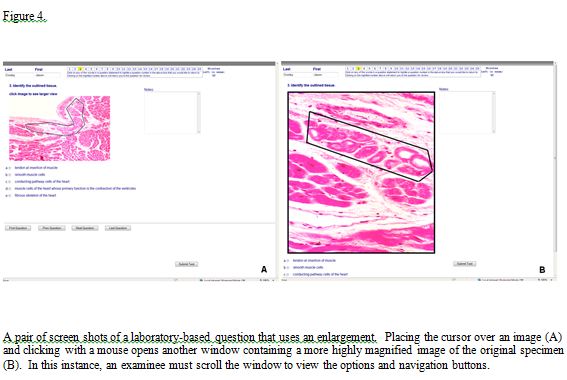

A structure or the structures that are related to a question are marked by an arrow or arrows (Figures 1) or outlined (Figure 4). The color of the graphic is chosen for its ability to draw the gaze of an examinee to the location of the structures. The use of color improves the chances of this happening in an image of a specimen that is stained with a dye of contrasting color.

Two images may be needed to adequately illustrate the histology of a specimen. The first image usually illustrates the morphological features that can not be seen in a more limited field of view (Figure 4A). A second more greatly magnified image then focuses on a more limited field in order to reveal the morphological details of the objects in a specimen that are not easily discerned or can not be discerned in the wider field of view (Figure 4B).

The statement “click image to see larger view” (Figures 1, 3-4) indicates a larger image or an enlargement of a critical area of the original specimen is available. Choosing a larger image may push the options and the control buttons to the bottom and the textbox of notes farther to the right-hand side of the Web page (Figure 4B). Changing the image is done by using a JavaScript to swap the CSS display tag between ‘inline’ and ‘none’ for the initial and enlarged images. The initial image reappears and the second image is removed when the user mouse-clicks on the enlarged image.

An examinee can keep track of the options that he or she believes do not answer a question correctly by placing a line through any of the five options (Figure 3). The strikethrough of an option is accomplished by moving the cursor over an option and left clicking with a mouse. If desired, the line can be removed by repeating the procedure. Setting the CSS text-decoration property to strikethrough allows a user to place a line through the text of any of the options.

A textbox (Figures 1- 4) was added to the right hand side of an examination window in response to the student request for note paper. In figures 1 and 3, the reminder “Look at Number 5!” typed into the textbox serves to illustrate an entry. In practice, the examinees enter the facts they can recall and believe are relevant to answering a question. The information is referred to while trying to reach a decision regarding the best possible option if the option is not recognized or immediately known. During an examination, the entries to the textbox are saved while the program is running and available should an examinee need to return to a question to reexamine the logic or information used in choosing an answer.

While an examination is open, the options selected by an examinee are saved in a cookie on the system. If an examinee needs to reload the examination, the radio buttons chosen by an examinee are filled in. However, neither the notes nor the question numbers tagged for review in the grid of boxes at the top of the monitor screen can be restored. The cookie has a unique name based on the user who is logged-in to the application. It is deleted from the computer system once an examination is submitted or when the computer system is rebooted.

Laboratory-Based Questions

The ability to recognize the microscopic structures is assessed by having the examinees identify objects that at are seen in the digital images of histological specimens (Figures 1 and 4). There are also questions for which the recognition of an object is the first step in selecting an answer to a question. Thus, a structure serves as the basis for a question and an examinee must select a function or relevant point regarding the structure from the list of would-be answers. The breadth of the questions can be expanded by grouping two or more images together (Figure 3). An examinee must then recognize the content of each of the images and answer a question that relates one of the images to the other one.

Before the use of computer-based examinations, multiple-choice questions had replaced fill-in-the-blank questions, even for the examinations that were microscope-based. After several years of computer-based testing, the authors were reminded that fill-in-the-blank questions are handled differently.5 The propensity of the students to respond to a question with a correct but different answer, inadvertently confuse terms and misspell words complicates the scoring of an examination. The situation can be resolved with a database that takes all of the possible answers into account. Or, an examination can be rescored after the unanticipated answers become known. It is also possible to have the examinees select an answer from an extensive list of possible options.28 In the end, the authors were more comfortable with multiple-choice questions and continued to use them.

Limitations Imposed by the Number of Computer Workstations and Class Size

An examination is not administered to all of the students in one sitting because the number of students in the class outnumbers the computer workstations by approximately 2 to 1. The disparity is handled by dividing the class into two groups: one-half of the class sits for an examination immediately after the other half finishes an identical examination. While one group is finishing the first session of the examination, the other group is sequestered in a nearby room shortly before the end of the first examination. To ensure the content of the examination is not revealed to anyone in the second group, all of the students in the first group remain in the computer laboratory until all students in the second group are assembled and accounted for. Therefore, all of the students sitting in the first session, even those who submit the examination early, can not leave the examination room before the second group is ready to take the place of the first group. At the close of the first examination, the first group is released and the second group moved into the room. The system is not popular with the students because the examinees are not permitted to leave or use the computers after submitting the examination. The procedure ensures the students from the two groups do not come into contact with one another to discuss the contents of the examination in the interval between the examinations.

Starting, Ending and Reviewing the Results of an Examination

Once seated, the examinees are briefly introduced to the graphic display and features of the computer interface. They are shown how to move through the examination using the navigation buttons and how to select the enlargements, strikethrough the options, make textbox entries and submit the examination. The examinees are told the examination will be submitted automatically once the time allotted expires and about the count down timer and time warnings that appear toward the end of the examination. The color code for the grid of question numbers and the method of tagging questions for review is explained and demonstrated using several of the examination questions. The security features for randomizing the order of the questions and the response options are made clear to them.

The computer application can display the results of an examination anytime after the examination has been submitted. To view the results of an examination, a student logs-on to the same application Web interface using his or her unique identifier. Once logged-on, the score and a composite of an individual’s examination–the questions, digital images, options and answers and the answers chosen by the examinee –are displayed.

The summary of the examination can be displayed at the workstation upon submission of an examination. However, the speed with which the scoring and the results can be returned to the students is not used for the following reasons: all of the students do not finish at the same time and immediate feedback could be distracting to those who have not finished the examination; the faculty which is busy proctoring, answering questions or preparing for the second group of examines are unable to speak to students who may need to discuss the results of the examination; and when the students are asked about immediate feedback, some have stated they would prefer to review the results of an examination in a less public setting or at least when there are fewer people in the computer laboratory.

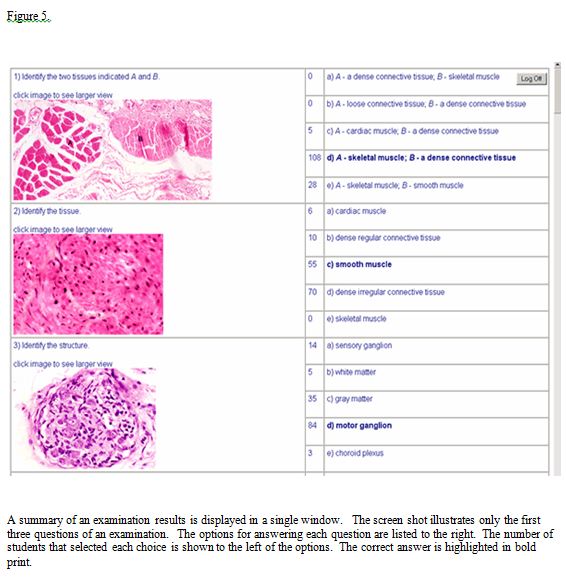

For the purposes of security (see below), the course policy is to deny direct access to electronic copy of the examination. Also, a hard copy and answer key are not posted. A student, who wishes to see his or her “examination paper” and the way it was scored, and discuss his or her performance must meet privately with the course director. The student is shown the computer generated summary of the examination. The computer application also generates a Web page that differs from the student examination paper only in that it summarizes the distribution of the options chosen by the entire class for each of the questions (Figure 5).

Examination Security

Several steps are taken to secure the examination during and after the administration of an examination. The sequestering of the two examination groups and the scrambling of the questions and options has been mentioned. During an examination, the examination is proctored by the faculty and the examinee line of sight restricted to the sight lines immediately in front of a computer monitor by a 3MTM Privacy Filter fitted to the size of the monitor screens. The input from the keyboard is limited to the typing of notes in the textbox and input from the mouse is limited to the left mouse button. At computer log-in, a standard user profile limits the usability of the computer system and restricts the user to the testing venue.

A computer program (Deep Freeze / Faronics Corporation) installed on all of the computers prohibits changes to the computer system. Following an examination, rebooting the computers removes any cookies, images, or residual files left in the cache or history of the computers and prevents the inadvertent release and unauthorized copying of the images. As an alternative, a secure browser may also be used to prevent the copying of an examination.

Backup System

A redundant system backs up the primary system in the event of a system failure. The alternate system duplicates the testing application, along with the database and graphics. Any of the primary systems (testing application and database) can be utilized with the corresponding backup system giving four possibilities for successfully presenting an examination. As a result, the primary test application can be used with either the primary or secondary database. Likewise, the secondary test application can be used with either the primary or secondary database. The change in databases is made by directing the application to the appropriate database. Both testing applications are reached through a host file on a local computer.

Collaborations

The computer application and computer-based examination described in this report are the result of collaboration between the faculty in the module and a computer programmer (JSC). The latter is responsible for the computer application and input of the database and initially the former along with his colleagues in histology (Drs. Cynthia Dlugos, Chester Glomski, Roberta Pentney and Herbert Schuel) were responsible for the content of an examination. With the loss of faculty due to retirement or reassignment, two members of the faculty are now responsible for all of the content. The Office of Medical Computing (OMC) oversees the use of the central computer facility and an ancillary site which together house 82 computers. The OMC also provides the technical assistance needed at start-up and during the conduct of the examinations.

Normally the two faculty members supervise and proctor the examination when it is given in the larger of the two computer laboratories (70 workstations). Three to four computer specialists from OMC help with the set up of the workstations before an examination. During an examination two aides are present in the larger room to accompany students who must leave the room during an examination. Between the examinations, two more aides assist organizing the second group of examinees and moving the first and second groups into and out of computer laboratory. If there are more than 140 examinees, a smaller computer laboratory with 12 workstations is staffed by an aide and proctored by a member of the faculty. The OMC also helps with the set up of the computers in the smaller room.

Student Evaluations

Immediately following an examination, the medical school classes of 2007 and 2010 were asked about their experience with computer-based examinations; their comfort level with the examination and the questions used on the examination; and about the computer interface used for the examination. Out of 140 students, 131 or 93.5% of class the 2007 and 136 students or 97.1% of the class of 2010 responded to the questions contained in the evaluation instrument.

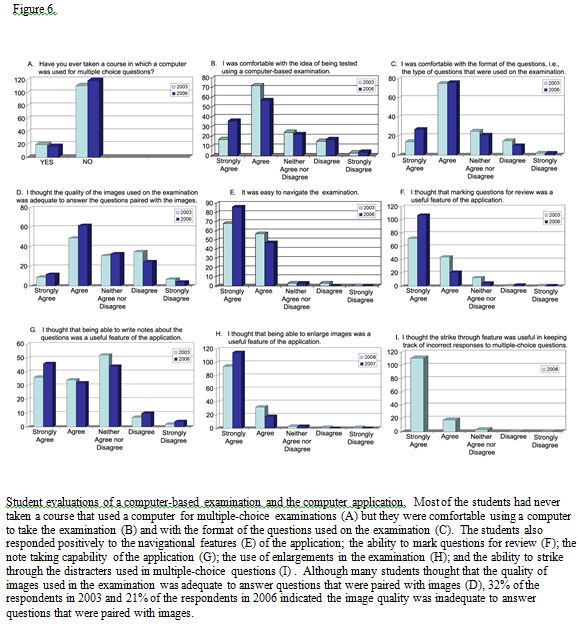

Most of the students (84.7% and 87.5%), had never taken a course that used computer-based testing (Figure 6A). (Note: in this and the examples that follow, the first % of students enclosed by brackets corresponds to the % of students responding in 2003 and the second % of students corresponds to the % of students responding in 2006.) The majority of the students (67.9% and 68.4%) strongly agreed or agreed they were comfortable with the method of testing (Figure 6B) and the format of the questions (67.9% and 76.7%) used on the examination (Figure 6C). Well before an examination, the students were given examples that illustrated the form of the questions used in previous years. The question about the format of the questions evaluated whether the examinees were prepared for the formats actually used.

Forty-four percent of the students in 2003 and 55% of the students in 2006 strongly agreed or agreed that “the quality of the images…was adequate to answer questions that were paired with images” (Figure 6D). Despite using images that were judged by the faculty to be of superior quality and to have faithfully replicated the original microscopic materials, approximately a quarter of each class (23% and 24%) did not agreed or disagreed with the statement and 32% and 21% disagreed or strongly disagreed. In both years, the students were asked to comment on the strengths and weaknesses of the application and the students often referred to the quality of the images. Some students described the images as being “unclear”, “fuzzy”, “blurry” and “of poor quality” while others characterized the images as “clear”, “good”, “great” and “of the highest quality”. The polarity of opinion was a concern given the importance of the images to the validity of a laboratory examination and it was during the dialogue with students after an examination that we were made aware of the specific images that were objectionable to them. Normally, this amounted to 1-2 images out of approximately 12 -15 of the images used. In speaking to students about the polarity of opinion, it was clear the objectivity of the students who considered the images to be of inadequate quality was clouded by their inability to recognize structures contained in an image or their inability to interpret what was demonstrated by an image and not the sharpness or quality of the images.

Nearly all of the students (95.4% and 97.8%) felt the computer application was easy to navigate (Figure 6E) and nearly all (96.2% and 97.8%) supported the use of enlargements (Figure 6H). A somewhat smaller percentage of students (88.6% and 94.1%) “thought marking questions for review was a useful feature of the application” (Figure 6F).

At the suggestion of the students, several of the features were added to the computer application. A textbox was intended to eliminate the need to distribute and collect pieces of note paper. However, only approximately one-half of either class strongly agreed or agreed that being able to write notes (53.4 % – 57.4%) in the textbox was a useful feature of the application (Figure 6G). The half hearted support for the textbox was reinforced by written comments that requested scrap paper the students could write or draw on.

The ability to strikethrough the options to multiple-choice questions was also added in response to the student suggestions for improving the application (Figure 6I). The addition, which was evaluated in 2006, was popular. Ninety-five percent of the students strongly agreed this was a useful feature of the computer application.

DISCUSSION

The computer-based laboratory examination described in this report assesses the facts and concepts contained in the lecture and laboratory sections of a histology course, overcomes some of the inadequacies of conventional histology examinations and matches the method of learning to the method of assessment in a program of laboratory instruction carried out with computer-guided tutorials. The computer application, which was developed internally, generates and delivers the examinations from databases once the contents for the examination are in place. Once an examination begins, the faculty is only needed to proctor and answer questions, if questions are permitted. And being a paperless examination, paper is conserved and there are no examination papers to either handle or score after an examination.

The scoring of the examination is more convenient than Scantron scoring (bubble in answer sheets) or grading by hand: a server rather than the faculty scores an examination, the scoring of the examination is accomplished the moment the examination is submitted and an individual’s score can be displayed, if desired, on the monitor screen upon submission of the examination. The students prefer not seeing a score immediately but are aware the scores are readily available and therefore expect the results to be posted within a few days. If an item is scored improperly, the error is easily rectified and the examination for the entire cohort of examinees is automatically rescored. The server also stores the results in the memory of the computer along with student and examination information.

As pointed out by Dr. Daniel Emmer, instructional software developer with the School of Dental Medicine and Dr. Frank Schimpfhauser of the Office of Medical Education, the digital images and the questions can be recycled or a new examination made by mixing old images and questions with a selection of new ones. But, recycling is viable only if the examination questions and images are not posted or released to the students. Withholding the images from the students is viewed as a practical necessity for two reasons: first, the preparation of a laboratory examination de novo involves, in large measure, the time consuming and challenging task of acquiring and customizing the digital images; and second, and more importantly, withholding the images protects the uniqueness of the examination. Unlike microscope, video, and project slide examinations, the digital images of computer-based examinations and questions may be copied and distributed if an electronic from of the examination is released to the students. If this were to repeatedly occur, at some point it would be impossible to create a genuine laboratory examination and eventually the examinations would assess only the ability to associate a structure with an image–not the ability to apply knowledge.

Matching the method of assessment to the method of learning was expected to lessen the uneasiness of the students in the time leading up to an examination. Unfortunately, it did not. “Like many other students … students become apprehensive at exam time. In part this is due a loss of perspective, but a major contributing factor is that they do not know what to expect on the exams.”5 When two University of Buffalo students were asked what not knowing to expect might mean, one explained that students do not know how they were going to perform and another explained that the students have never been tested with a computer. The student insights are supported by student surveys done in 2003 and 2004. The surveys showed only 7 % of the students entered the school with training in histology and only 14.3% and 12.5% of the students in 2003 and 2006 respectively had taken a course in which a computer was used for multiple-choice examinations (Figure 6A).

Over a period of four years, a practice examination introduced the students to the mechanics of taking a computer-based examination, the interactive features of the computer application and the types of questions used on an examination. The exercise did not mitigate the uneasiness of the students and only raised the expectation the questions used on the practice examination were the same as those used on the real examination or of the same difficulty. It appears the uneasiness observed in individuals and the class as whole can only be quelled by having them sitting for a meaningful (actual) examination. To help prepare the students, they are now given sample questions that resemble the type and difficulty of the questions used on the examinations and directed to Web sites on the Internet where they can practice identifying structures.

The student-screen interactions that a graphical computer interface make possible enable an examinee to perform operations that improve the process of sitting for a laboratory-based test. One of the most important improvements is the flexibility with which an examinee can navigate the examination windows. An examinee can scrutinize a window–even one previously opened–and do so as many times as necessary during an examination. Thus, within the restriction imposed by the overall time limit for an examination, the amount of time and effort expended on a particular question is determined by the examinee. The pacing of oneself is thought to explain the student enthusiasm for computer-based testing in histology at the Medical University of South Carolina.22

Unlike a microscope examination in which the magnification and the field of view are usually fixed, another question window allows an examinee to view more than one field of view or level of magnification. This is in keeping with the practice of using a range of magnifications when studying specimens with a microscope4, 29-30 and comparable to the way histology is presented in the computer-guided tutorials. The process of answering questions is also facilitated by being able to tag questions for review, add notes to a textbox and striking through the options. The interactions lead to or replicate many of the strategies used by students in testing situations.28 The performance of students tested with computer-based examinations and other kinds of examinations is being assessed.

In academia, the development of computer-based examinations may be “hindered or abandoned due to time and funding restrictions, or reliance on an individual academic.”31 In this instance, computer-based testing would not have been possible had it not been for the cooperation of the Office of Medical Education (OMC) in scheduling the school’s central computer facility for histology instruction and testing during class time, the technical expertise of the OMC staff and the construction of a common medical computing laboratory with enough computer workstations for half of the medical school class.

REFERENCES

- 1.Hightower, J.A., Boockfor, F.R., Blake, C.A., and Millette, C.F. The standard medical microscopic anatomy course: histology circa 1998. The Anatomical Record (The New Anatomists). 1999; 257 (3): 96-101.

2.Bloodgood, R.A., and Ogilvie, R.W. Trends in histology laboratory teaching in United States medical schools. The Anatomical Record (The New Anatomist). 2006; 289B (5): 169-175.

3.Lehmann, H.P., Freedman, J.A., Massad, J., and Dintzis, R.E. An ethnographic, controlled study of the use of a computer-based histology atlas during a laboratory course. Journal of the American Medical Informatics Association. 1999; 6 (1): 38-52.

4.Cotter, J.R. Laboratory instruction in histology at the University at Buffalo: recent replacement of microscope exercises with computer applications. The Anatomical Record (The New Anatomist). 2001; 265 (5): 212- 221.

5.Heidger, Jr., P.M., Dee, F., Consoer, D., Leaven, T., Duncan, J., and Kreiter, C. Integrated approach to teaching and testing in histology with real and virtual imaging. The Anatomical Record (The New Anatomists). 2002; 269 (2): 107-112.

6.Painter, S.D. Microanatomy courses in U.S. and Canadian medical schools, 1991-92. Academic Medicine. 1994; 69 (2):143-147.

7.Drake, R.L., Lowrie, D.J., and Prewitt, C.M. Survey of gross anatomy, microscopic anatomy, neuroscience, and embryology courses in medical school curricula in the United States. The Anatomical Record (The New Anatomists). 2002; 269 (2):118-122.

8.Blystone, R.V., and Blystone, D.M. Digital video microscopy for the undergraduate histology laboratory. Journal of Visual Communication in Medicine. 1994; 17 (3): 125- 131.

9.Mclean, M. Introducing computer-aided instruction into a traditional histology course: student evaluation of the educational value. Journal of Audiovisual Media in Medicine. 2000; 23 (4): 153-160.

10.Harris, T., Leaven, T., Heidger, P., Kreiter, C., Duncan, J., and Dick, F. Comparison of a virtual microscope laboratory to a regular microscope laboratory for teaching histology. The Anatomical Record (The New Anatomists). 2001; 265 (1): 10-14.

11.Brueckner, J. A digital approach to cellular ultrastructure in medical histology: creation and implementation of an interactive atlas of electron microscopy. Journal of the International Association of Medical Science Educators. 2003; 13 (1): 30- 35.

12.Black, V.H., and Smith, P.R. Increasing active student participation in histology. The Anatomical Record (The New Anatomist). 2004; 278B (1): 14-17.

13.Lei, L-W., Winn, W., Scott, C., and Farr A. Evaluation of computer-assisted instruction in histology: effect of interaction on learning outcome. The Anatomical Record (The New Anatomists). 2005; 284B (1): 28-34.

14.Michaels, J.E., Allred, K., Bruns, C., Lim, W., Lowrie, Jr., D.J., and Hedgren, W. Virtual laboratory manual for microscopic anatomy. The Anatomical Record (The New Anatomists). 2005; 284B (1): 17-21.

15.Rosenbaum, B.P., Patel, S.G., and Lambert, H.W. Atlas for medical students: implementation of database technology. Journal of the International Association of Medical Science Educators. 2007; 17 (1): 58- 66.

16.Rosenbaum, B.P., Patel, S.G., and Lambert, H.W. Development of a database to streamline access and manipulation of images in a web-based histology atlas. Journal of the International Association of Medical Science Educators. 2007; 17 (1S): 3-4.

17.Downing, S.W. A multimedia-based histology laboratory course: elimination of the traditional microscope laboratory. MedInfo. 1995; 8 (2):1995.

18.Brisbourne, M.A.S., Chin, S.S.-L., Melnyk, E., and Begg, D.A. Using web-based animations to teach histology. The Anatomical Record (The New Anatomist). 2002; 269 (1): 11-19.

19.Blake, C.A., Lavoie, H.A., and Millette, C.F. Teaching medical histology at the University of South Carolina School of Medicine: transition to virtual slides and virtual microscopes. The Anatomical Record (The New Anatomist). 2003; 275B (1): 196-206.

20.Krippendorf, B.B., and Lough, J. Complete and rapid switch from light microscopy to virtual microscopy for teaching medical histology. The Anatomical Record (The New Anatomists). 2005; 285B (1), 19-25.

21.Kumar, R.K., Freeman, B., Velan, G.M., and de Permentier, P. J. Integrating histology and histopathology teaching in practical classes using virtual slides. The Anatomical Record (The New Anatomists). 2006; 289B (4): 128-133.

22. Ogilvie, R.W., Trusk, T.C., and Blue, A.V. Students’ attitudes towards computer testing in a basic science course. Medical Education. 1999; 33 (11): 828-831.

23. Kane, C.J.M., Burns, E.R., O’Sullivan, P.S., Hart, T.J., Thomas, J.R., and Pearsall, I.A. Design, implementation, and evaluation of the transition from paper and pencil to computer assessment in the medical microscopic anatomy curriculum. Journal of the International Association of Medical Science Educators. 2007; 17 (1): 38-44.

24. Bloodgood, R.A. The use of microscopic images in medical education: In: Gu, J., and Ogilvie, RW. (Eds). Virtual Microscopy and Virtual Slides in Teaching, Diagnosis, and Research. Chapter 8 (pages 111-140). Boca Raton, Florida: CRC Press. 2005.

25. Dee, F.R., and Heidger, P. Virtual slides for teaching histology and pathology: In: Gu, J., and Ogilvie, RW. (Eds). Virtual Microscopy and Virtual Slides in Teaching, Diagnosis, and Research. Chapter 9 (pages 141-149). Boca Raton, Florida: CRC Press. 2005.

26. Ogilvie, R.W. Implementing virtual microscopy in medical education. International Association of Medical Science Educators. 2006. http://www.iamse.org/development/2006/was_2006_spring.htm Accessed November 1, 2007.

27. Case, S.M., and Swanson, D.B. Constructing Written Test Questions for the Basic and Clinical Sciences. National Board of Medical Examiners. 2002.

28. Peterson, M.W., Gordon, J., Elliott, S., and Kreiter, C. Computer-based testing: initial report of extensive use in a medical school curriculum. Teaching and Learning in Medicine. 2004; 16 (1): 51-59.

29. Stiles, K.A. Handbook of histology. New York, New York: McGraw-Hill Book Company, Inc. 1950.

30. Krause, W.J. The art of examining and interpreting histological preparations. Pearl River, New York: The Parthenon Publishing Group. 2001.

31. Bull, J. Computer-Assisted Assessment: Impact on Higher Education Institutions, Educational Technology & Society. 1999; 2(3): 123-126. http://www.ifets.info/journals/2_3/joanna_bull.html. Accessed November 21, 2006.